AMD is poking fun at the almost homeopathic memory expansion of the NVIDIA Ada mid-range, and NVIDIA feels secure enough with smart memory management, and in the end, the customer no longer knows what to buy. Although I do not question the issue of the too tightly measured memory expansion, because it is what it is and cannot be glossed over. However, one can also test the side effects and behavior of the cards of both manufacturers in the borderline area, where memory demand and memory expansion meet exactly. Because there, the behavior of the respective solution approaches could not be more different. And it is also interesting to see what happens with only 6 GB in Ultra HD. Doesn’t work? Yes, it does! But somehow differently than expected.

Preface and system requirements

In order to be able to test all this at all, you need a graphics card from AMD and NVIDIA respectively, with at least 32 GB of memory. For this I have both the Radeon Pro W7800 with 32 GB and the RTX 6000 Ada with 48 GB of memory. In addition, I play “The Last of Us Part 1” (TLOU1), a game in which both cards are confronted with a memory requirement of 10 GB in identical settings and still deliver playable frame rates with sufficient free memory. It is also not an NVIDIA game like Cyberpunk 2077, but a console port with slight advantages for AMD.

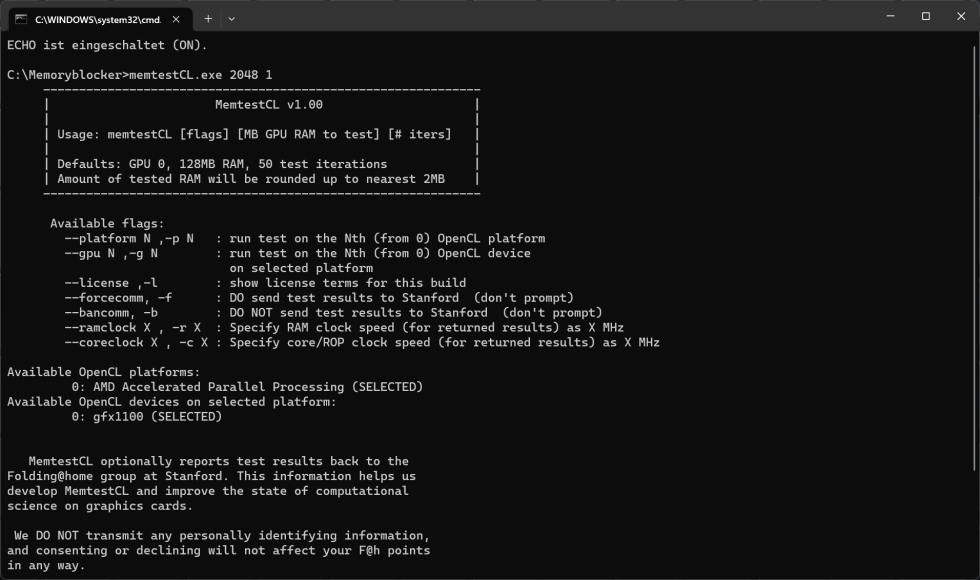

We tested it with the latest Steam version and the latest drivers from AMD and NVIDIA on an MSI X670E Ace with a water-cooled Ryzen 9 7950X and DDR5 6000, two fast 2 TB PCIe 4.0 NVMe SSDs for the system and the game, and the two workstation graphics cards mentioned above with an enormous amount of memory. I can artificially reduce this between each session by reserving (i.e. allocating) the appropriate blocks with memtestcl. This is quite easy to do by executing a single run of the command line program via batch file and then simply making the window an icon on the pause command and dropping it in the task bar. Until you press a key in the respective window, the memory is not released. You can repeat this with as many windows as you like. It is only important to close the game in each case and only restart it after the new “memory expansion” has been created.

By the way, the Ryzen 9 7950X offers a rather unexpected hurdle here, because an activated iGP is always seen as graphics card device 0 (i.e. the first one), which of course has to go grandly wrong in some cases. You always have to select the second device manually in the program or, more sensibly, simply deactivate iGP in the BIOS (see picture above). This also avoids runtime problems with certain programs, where the wrong GPU is always detected at startup. That’s just in passing, but it seemed important to me to mention (e.g. for older Solidworks programs, which then refuse to start components due to lack of certified graphics hardware).

The right benchmark was not easy to find. A normal savegame is not really 100% reproducible if you want to discuss variances. In the end I decided to use a movie sequence, which is also long enough for statistical surveys. With the sequence “Adios” from “Tommy’s Dam” you have a whopping 163 seconds of absolutely consistent content with lots of camera cuts, changing perspectives and lots of forest, landscape and light shows. Even savegames or sequences with city panoramas were less challenging and varied there. Both maps were fully warmed up in the menu and only the second run of the sequence was finally recorded as a benchmark. And by the way: Shader pre-caching was of course taken into account.

First measurement and reason for this article

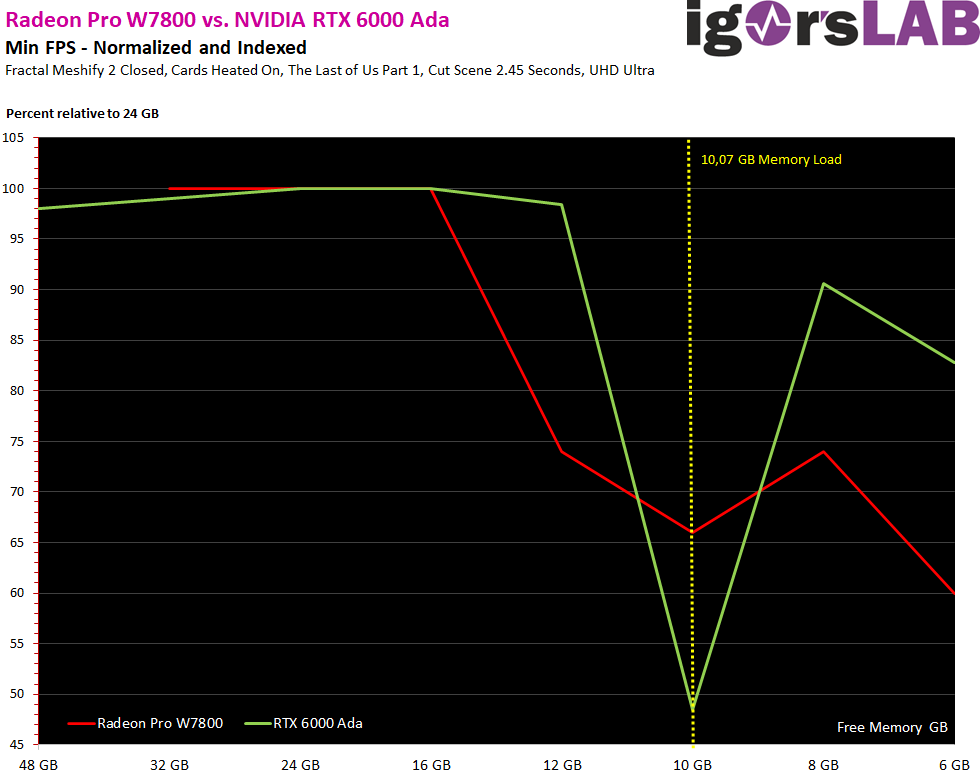

I’ll just start with a cumulative result of the P1 Low (Min FPS) now and feel my way to reality with all detailed measurements later. This is highly interesting and even more exciting than this first, rather superficial summary. Since the FPS rates of both cards differ, I did a percentage evaluation for the first direct comparison to become comparable. Interesting side information: the RTX 6000 Ada even gets a tad slower above 24 GB. I therefore set the reference point for my 100% mark to the respective measured values at 24 GB memory expansion. I could have also taken the slightly faster Radeon Pro W7900, but it would not have changed anything about the actual result and findings. By the way, I will still test ALL workstation cards in a special with what they were actually made for. Only the effort of the preparation is quite high and I can currently because of my still positive tests in no company to borrow over the weekend times the licenses of the full programs for the benchmarks.

The P1 Low used here (i.e. the new Min FPS) shows very impressively that the AMD card already collapses very strongly 2 GB BEFORE reaching the memory expansion, to then reach the low at the 10.07 GB, while the NVIDIA card only collapses at the 10 GB, but then all the more for it. You can also see that NVIDIA’s memory management always fails exactly when memory requirements and memory expansion are pretty much the same. Then it’s completely over. The game only runs again with 8 GB and even with a measly 6 GB everything is still smoother and more playable than with 10 GB. However, you will also see errors in the stream, textures remain muddy and every now and then something is completely missing or pops up at some point. Flickering included. The drop is smaller with the AMD card, but the FPS curve does not really recover later. Now, of course, you can speculate which is more pleasant, but with the NVIDIA card you could at least still play. In a bit uglier, but at least it worked.

Of course, just one game is not 100% representative, but you can observe these effects in other games as well. With FarCry 6, the whole thing is even more blatant (RTX 6000 Ada at 10 GB only with a measly 12 FPS), but the benchmarks of all my savegames are unfortunately never really consistent (as is the integrated benchmark). Similar results are also seen in other games, such as Horizon Zero Dawn, where you then already have to upscale during rendering to get the memory full. But the bottom line is that it’s always the same. However, we’ll see how the individual frame progressions present themselves, I’ve created lots of individual metrics for this, which you should really take a close look at. After all, the percentiles are way too rough, so you really need to look at the variances and frame times to understand where AMD and NVIDIA really differ.

Of course, just one game is not 100% representative, but you can observe these effects in other games as well. With FarCry 6, the whole thing is even more blatant (RTX 6000 Ada at 10 GB only with a measly 12 FPS), but the benchmarks of all my savegames are unfortunately never really consistent (as is the integrated benchmark). Similar results are also seen in other games, such as Horizon Zero Dawn, where you then already have to upscale during rendering to get the memory full. But the bottom line is that it’s always the same. However, we’ll see how the individual frame progressions present themselves, I’ve created lots of individual metrics for this, which you should really take a close look at. After all, the percentiles are way too rough, so you really need to look at the variances and frame times to understand where AMD and NVIDIA really differ.

113 Antworten

Kommentar

Lade neue Kommentare

Veteran

Urgestein

1

Veteran

Veteran

1

1

Veteran

Neuling

Urgestein

Mitglied

Veteran

1

Veteran

Neuling

Urgestein

1

Veteran

Urgestein

Alle Kommentare lesen unter igor´sLAB Community →