After the already extensively tested new AMD cards in the recently published launch article, the Sapphire Radeon RX 6950 XT Nitro+ Pure has now also found its way to my lab as another board partner card. The continued unavailability of these particular graphics cards for the masses is a shame and all the more annoying because these products are really good and competitive graphics cards that would have deserved to make the masses of willing buyers happy as well. And so today I’m testing a product that stands out pleasantly from the crowd and is unfortunately still stuck in the delivery to Germany.

With the (Attention! Officially prescribed name according to AMD’s nomenclature) “NITRO+ AMD Radeon™ RX 6950 XT PURE Gaming Graphics Card with 16GB GDDR6, AMD RDNA™ 2”, which I will call Sapphire RX 6950 XT Nitro+ Pure again in the article for the sake of simplicity, as otherwise the charts and legends would explode, here is a thoroughly interesting RDNA2 specimen that you have to listen twice (or three times) to hear. And Sapphire has not set the factory clock too high and thus even leaves a lot of room for own OC experiments. I will show that this is possible in a water cooling article.

This graphics card, like all RX 6000 models, can handle the new AV1 video codec, they also support DirectX 12 Ultimate for the first time and thus DirectX Raytracing (DXR). With AMD FidelityFX, they also offer a feature that should also give developers more leeway when selecting effects. Variable Rate Shading (VRS) is also included, which can save an immense amount of computing power by smartly reducing the display quality of image areas that are not in the player’s eye anyway. So much for the feature set of all new Radeon cards.

With an MSRP of 1399 Euros, you have generated a certain premium that you can accept or not. Everyone has to work that out for themselves. But Snow White and unicorn feeling are already included. After all, that’s something.

Optics and haptics

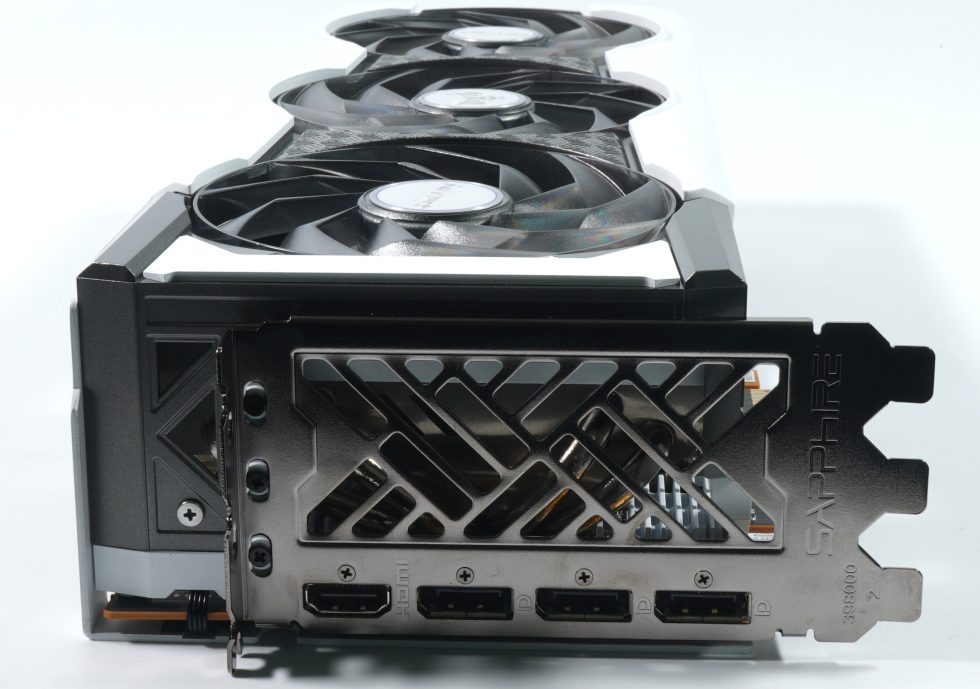

The Sapphire RX 6950 XT Nitro+ Pure weighs 1571 grams, which is of course heavier than the RX 6900XT reference card, but slightly lighter than the MSI card from the launch article. It is also longer with its full 32.5 cm, a stately 13 cm high (installation height from PEG) and in addition a brute 6.4 cm thick (3.5 slot design), whereby a backplate and the PCB are added with a total of four more millimeters.

The white body is made of light metal, the Sapphire lettering and the light band on the top are LED illuminated, the backplate is still adorned with an illuminated logo. Apart from that, everything is nicely made of light metal instead of plastic, which makes a lot of difference haptically.

The graphic brick including illumination is supplied via two standard 8-pin sockets and a 6-pin socket, so everything is as known and used. We also see the vertical orientation of the cooling fins and the board reinforcement in the form of a backplate and a massive frame here. But more about that in a moment in the teardown.

The slot bezel is closed, carries 1x HDMI 2.1 and three current DP ports. However, the USB Type C port is missing. More about the construction, the cooler and the assembly can be found on the next page in the teardown.

Technology

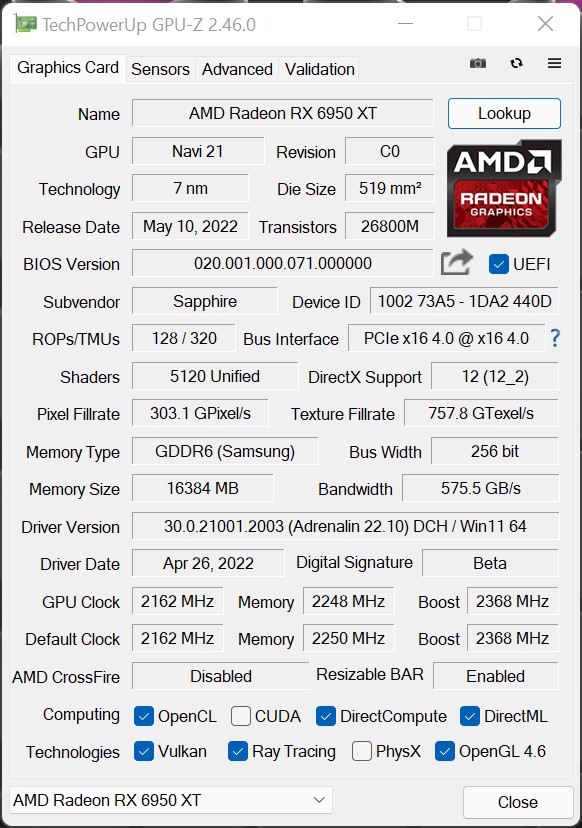

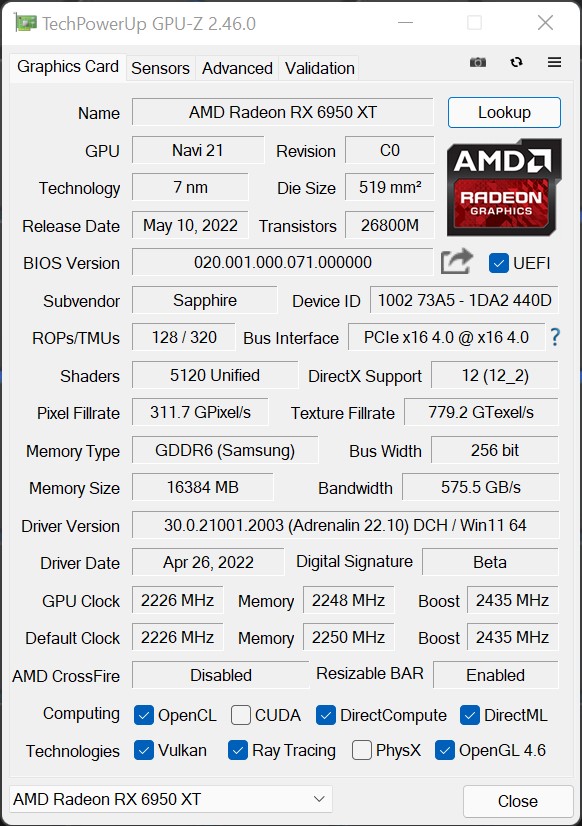

With the 80 compute units (CU), the card has a total of 5120 shaders. While the base clock is specified at 2162 MHz, the boost clock is 2368 MHz in the Silent BIOS and 2435 MHz in the Performance BIOS, which is also achieved. The card uses 16 GB GDDR6 with 18 Gbps, which is made up of 8 modules of 2 GB each. This also includes the 256-bit memory interface and the 128 MB Infinity Cache, which is supposed to solve the bandwidth problem. The card thus has a switchable dual BIOS, which is nice. With the BIOS DIP switch in the middle position, a switchover can be solved seamlessly and directly via software on-the-fly with Sapphire’s TriXX software.

Other data is different in the BIOS, for example the TGP, i.e. the maximum power for GPU, SoC and memory. The performance version offers up to 325 watts TGP instead of 303.

Raytracing / DXR

At the latest since the presentation of the new Radeon cards, it is clear that AMD will also support ray tracing. Here, NVIDIA takes a different path and implements a so-called “ray accelerator” per compute unit (CU). Since the Radeon RX 6800 has a total of 72 CUs, this also results in 72 such accelerators for the Radeon RX 6800XT, while the smaller Radeon RX 6800 still has 60. A GeForce RTX 3080 has 68 RT cores, which is nominally less for now. When comparing the smaller cards, the score is 62 for the RX 6800 and 46 for the GeForce RTX 3070. However, RT cores are organized differently and we will have to wait and see what quantity can do against specialization here. In the end, it is an apples and oranges comparison.

But what has AMD come up with here? Each of these accelerators is first capable of simultaneously calculating up to 4 beam/box intersections or a single beam/triangle intersection per cycle. In this way, the intersection points of the rays with the scene geometry are calculated (analogous to the bounding volume hierarchy), first pre-sorted and then this information is returned to the shaders for further processing within the scene or the final shading result is output. However, NVIDIA’s RT cores seem to be much more complex, as I already explained in detail during the Turing launch. What counts is the result alone, and that’s exactly what we have suitable benchmarks for.

Smart Access Memory (SAM)

AMD already showed SAM, i.e. Smart Access Memory, at the presentation of the new Radeon cards – a feature that I also enabled today in addition to the normal benchmarks, which also makes a direct comparison possible. But actually SAM is not Neuers, just verbally more nicely packaged. This is nothing more than the clever handling of the Base Address Register (BAR), and it is exactly this support that must be activated in the substructure. Resizable PCI bars (see also PCI SIG from 4/24/2008) have played an important role in modern AMD graphics hardware for quite some time, since the actual PCI BARs are normally only limited to 256 MB, while the new Radeon graphics cards now offer up to 16 GB VRAM.

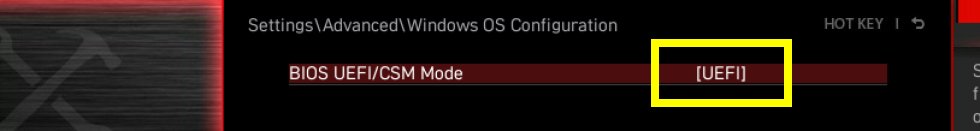

The consequence is that only a fraction of the VRAM is directly accessible for the CPU, which requires a whole series of workarounds in the so-called driver stack without SAM. Of course, this always costs performance and should therefore be avoided. AMD thus starts exactly there with SAM. This is not new, but it has to be implemented cleanly in the UEFI and also activated later. This is only possible when the system is running in UEFI mode and CSM/Legacy is disabled.

CSM stands for the Compatibility Support Module. The Compatibility Support Module exists exclusively under UEFI and it ensures that older hardware and software also works with UEFI. The CSM is always helpful when not all hardware components are compatible with UEFI. Some older operating systems as well as the 32-bit versions of Windows also cannot be installed on UEFI hardware. However, exactly this compatibility setting often prevents the clean Windows variant needed for the new AMD components during installation.

Test system and evaluation software

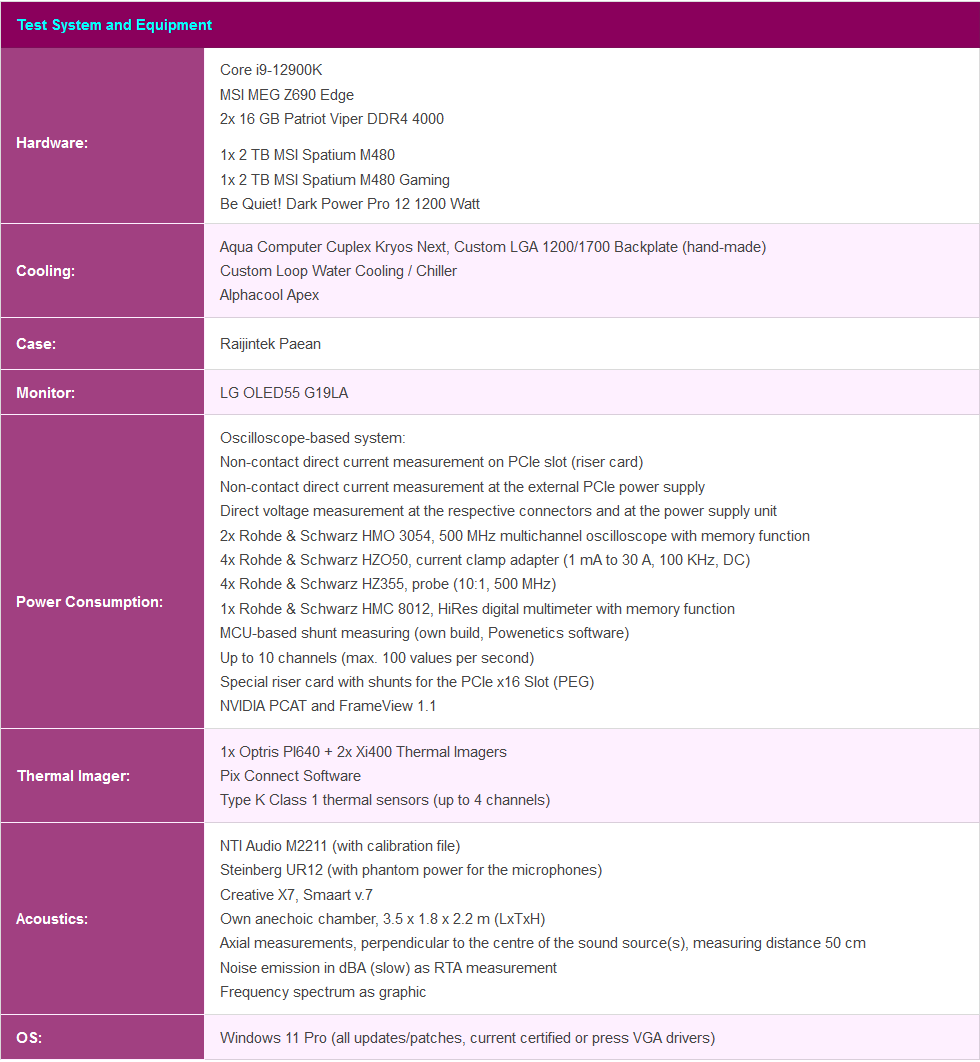

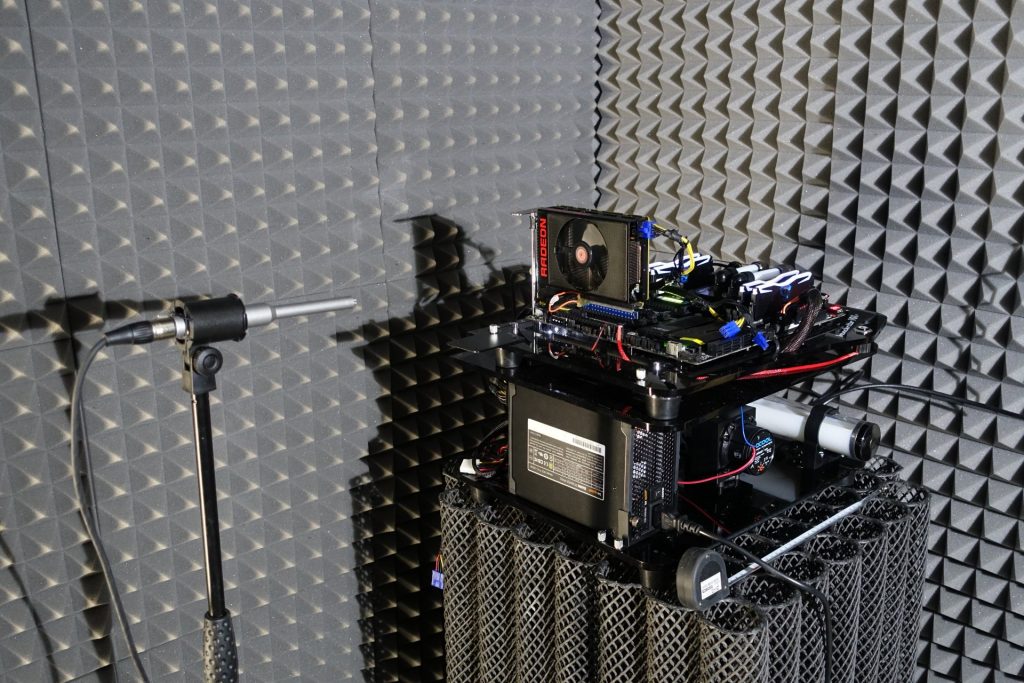

The benchmark system is new and is no longer in the lab, but in the editorial room again. For direct logging during all games and applications, I use NVIDIA’s PCAD and my own development with Powenetics’ software, which increases the comfort immensely. The measurement of power consumption and other things continues to be carried out in the air-conditioned laboratory on a redundant test system that is identical in every detail, but then using high-resolution oscillograph technology..

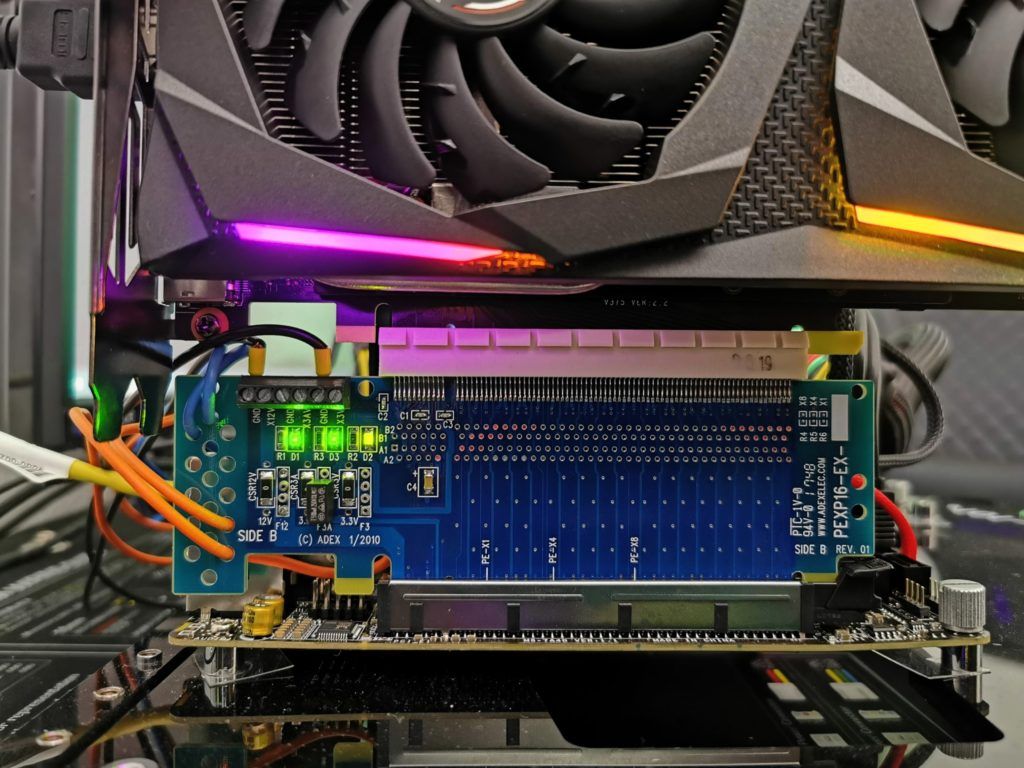

…and the self-created MCU-based measurement setup for motherboards graphics cards (pictures below), where at the end in the air-conditioned room also the thermographic infrared images are created with a high-resolution industrial camera. The audio measurements are done outside in my Chamber (room within a room).

I have also summarized the individual components of the test system in a table:

- 1 - Einführung und technische Details

- 2 - Teardown: Platine, Spannunsversorgung, Kühler

- 3 - Gaming Performance

- 4 - Leistungsaufnahme beim Gaming und Effizienzanalyse

- 5 - Leistungsaufnahme, Lastspitzen und Netzteil-Empfehlung

- 6 - Taktraten und Temperaturen

- 7 - Lüfter und Geräuschemission ('Lautstärke')

- 8 - Übersicht, Zusammenfassung und Fazit

19 Antworten

Kommentar

Lade neue Kommentare

Urgestein

Urgestein

Veteran

Urgestein

Veteran

Urgestein

1

Veteran

Urgestein

1

Veteran

Urgestein

1

Urgestein

1

Urgestein

1

Veteran

Mitglied

Alle Kommentare lesen unter igor´sLAB Community →