With the Radeon RX 6700XT presented today, AMD rounds off the Big Navi portfolio downwards for the time being. The board partner cards may then be presented from tomorrow and there are already products in the pipeline for this as well. But more on that at the appropriate time. The Navi22 chip in the RX 6700XT is new and we’ll take a closer look at it in a moment. In general, however, graphics cards are currently a sensitive topic, so that one has to separate very strongly between technical and emotional considerations. Therefore, let’s please leave miners, scalpers and the partly already megalomaniac retail sector aside for now and dedicate ourselves purely to the technology for the time being. I’ll write about the rest at the end, you can’t help it.

The Radeon RX 6700 XT as a reference design

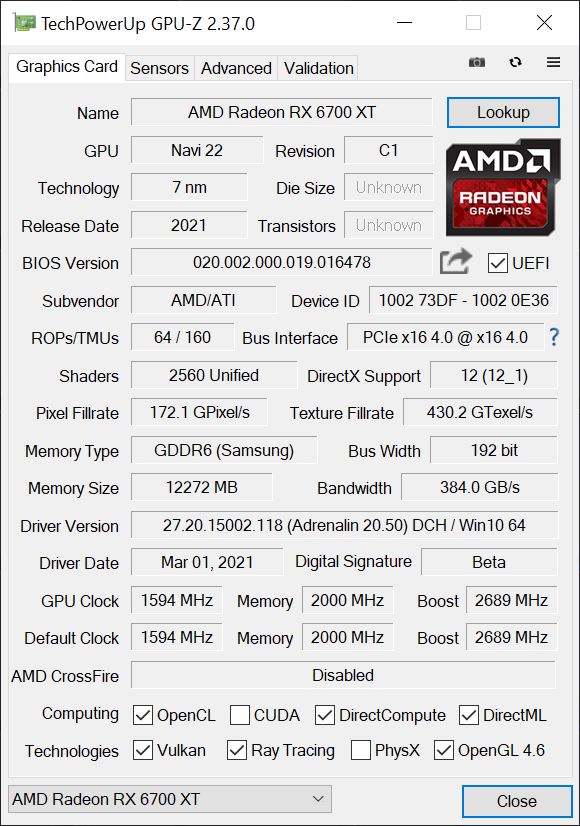

With the 40 compute units (CU), the RX 6700 XT has 2560 shaders, making it effectively half an RX 6900XT. While the base clock of the RX 6800 is given with 1815 and the boost with 2105 MHz, the RX 6700XT manages 1594 to 2689 MHz. The card relies on 12GB of GDDR6 at 16Gbps, which is made up of 6 modules of 2GB each. Also common are the 192-bit memory interface and the 96MB Infinity Cache, which is supposed to solve the bandwidth problem.

The RX 6700 XT weighs just over 900 grams, is 26.7 cm long, 12 cm high (11.5 cm installation height from PEG), 3.8 cm thick (2-slot design), with a backplate and the PCB adding a total of four more millimeters. The slot bracket is closed, carries 1x HDMI 2.1 and three current DP connectors. The USB Type C is omitted. The body is made of light metal, the Radeon lettering is illuminated and the whole thing is powered via an 8-pin and a 6-pin socket. More about this on the next page in the teardown.

The screenshot from GPU-Z then provides information about the remaining data of the card:

Again, I have a table for all statisticians among you, before it really gets going from the next page on.

| Name | RX 6900 XT | RX 6800 XT | RX 6800 | RX 6700 XT |

|---|---|---|---|---|

| Chip | Navi 21 XTX | Navi 21 XT | Navi 21 XL | Navi 22 XT |

| Chip Size | 520 mm² | 520 mm² | 520 mm² | n/a |

| Shader Cluster | 80 | 72 | 60 | 40 |

| Shaders/TMUs/ROPs | 5.120/320/128 | 4.608/288/128 | 3.840/240/96 | 2560/160/64 |

| RT Units | 80 | 72 | 60 | 40 |

| Infinity Cache MB | 128 | 128 | 128 | 96 |

| GPU Game Clock MHz | 2.015 | 2.015 | 1.815 | 2 |

| FP16 TFLOPS | 41.27 | 37,14 | 27.88 | 23.04 |

| FP32/FP64 TFLOPS | 20.63/1.29 | 18.57/1.16 | 13.94/0.87 | 11.52/0.72 |

| Fillrate Mtex/Mpix per sec. | 644.8/257.9 | 580.3/257.9 | 435.6/174.2 | 432/144 |

| L2 Cache MB | 4 | 4 | 4 | 3 |

| Memory interface | 256-bit | 256-bit | 256-bit | 192-bit |

| Memory Speed Gbps | 16 | 16 | 16 | 16 |

| Memory | GDDR6 | GDDR6 | GDDR6 | GDDR6 |

| Bandwidth GB/s | 512 | 512 | 512 | 384 |

| Memory Capacity GB | 16 | 16 | 16 | 12 |

| PCIe Connectors | 2× 8-pin | 2× 8-pin | 2× 8-pin | 1× 8-pin + 1x 6-pin |

| TBP Watts | 300 | 300 | 250 | 230 |

| Launch Price (MSRP) | 999 Euro | 649 Euro | 579 Euro | n/a |

Raytracing / DXR

At the latest since the presentation of the new Radeon cards it is clear that AMD will also support ray tracing. Here one goes a way clearly deviating to NVIDIA and implements a so-called “Ray Accelerator” per Compute Unit (CU). Since the Radeon RX 6700XT has a total of 40 CUs, this also results in 40 such accelerators. A GeForce RTX 3070 comes with 46 RT cores. However, RT cores are organized differently and we will have to wait and see what quantity can do against specialization here. So in the end it’s an apples and oranges comparison for now.

But what has AMD come up with here? Each of these accelerators is first capable of simultaneously computing up to 4 beam/box intersections or a single beam/triangle intersection per cycle. This way, the intersection points of the rays with the scene geometry are calculated (analogous to the Bounding Volume Hierarchy), first pre-sorted and then this information is returned to the shaders for further processing within the scene or the final shading result is output. NVIDIA’s RT cores, however, seem to have a much more complex approach to this, as I explained in detail during the Turing launch. What counts is the result alone, and that’s exactly what we have suitable benchmarks for.

Smart Access Memory (SAM)

AMD already showed SAM, i.e. Smart Access Memory, at the presentation of the new Radeon cards – a feature I enabled today in addition to the normal benchmarks, which also allows a direct comparison. But actually SAM is not Neuers, just verbally more nicely packaged. This is nothing else than the clever handling of the Base Address Register (BAR) and exactly this support must be activated in the substructure. With modern AMD graphics hardware, resizable PCI bars (see also PCI SIG from 4/24/2008) have played an important role for quite some time, since the actual PCI BARs are normally only limited to 256 MB, while with the new Radeon graphics cards you can now find up to 16 GB VRAM (here it’s 12 GB).

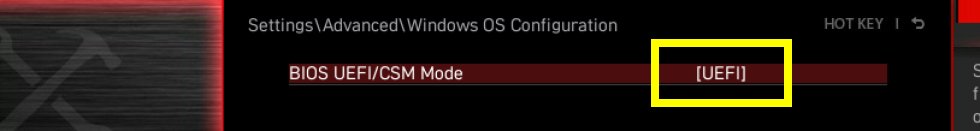

The result is that only a fraction of the VRAM is directly accessible to the CPU, which without SAM requires a whole series of workarounds in the so-called driver stack. Of course, this always costs performance and should therefore be avoided. So that’s where AMD comes in with SAM. This is not new, but it must be implemented cleanly in the UEFI and later also activated. This only works if the system is running in UEFI mode and CSM/Legacy are disabled.

CSM stands for the Compatibility Support Module. The Compatibility Support Module is exclusive to UEFI and ensures that older hardware and software will work with UEFI. The CSM is always helpful when not all hardware components are compatible with UEFI. Some older operating systems and the 32-bit versions of Windows also do not install on UEFI hardware. However, it is precisely this compatibility setting that often prevents the clean Windows variant required for the new AMD components from being installed.

Test system and evaluation software

The benchmark system is new and is now no longer in the lab, but back in the editorial office. I now also rely on PCIe 4.0, the matching X570 motherboard in the form of an MSI MEG X570 Godlike, and a select Ryzen 9 5950 X that has been heavily overclocked water-cooled. Along with that comes fast RAM as well as multiple fast NVMe SSDs. For direct logging during all games and applications, I use NVIDIA’s PCAD, which adds to the convenience immensely.

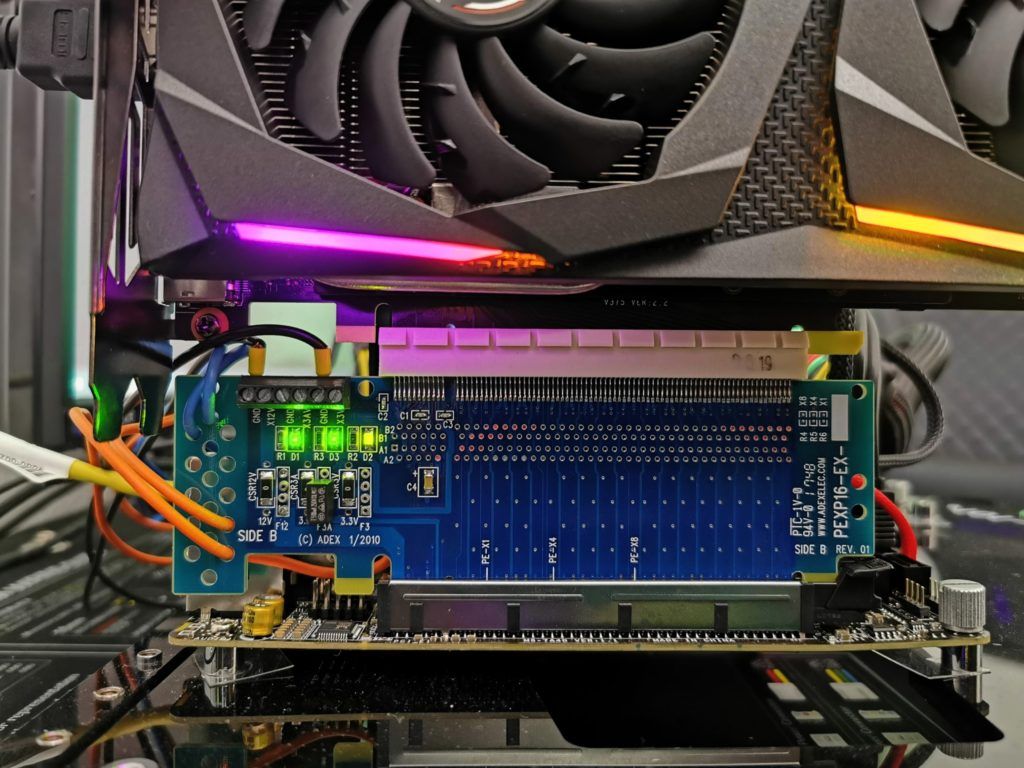

The measurement of the power consumption and other things takes place here in the special laboratory on a redundant and in detail identical test system then double-tracked by means of high-resolution oscillograph technology…

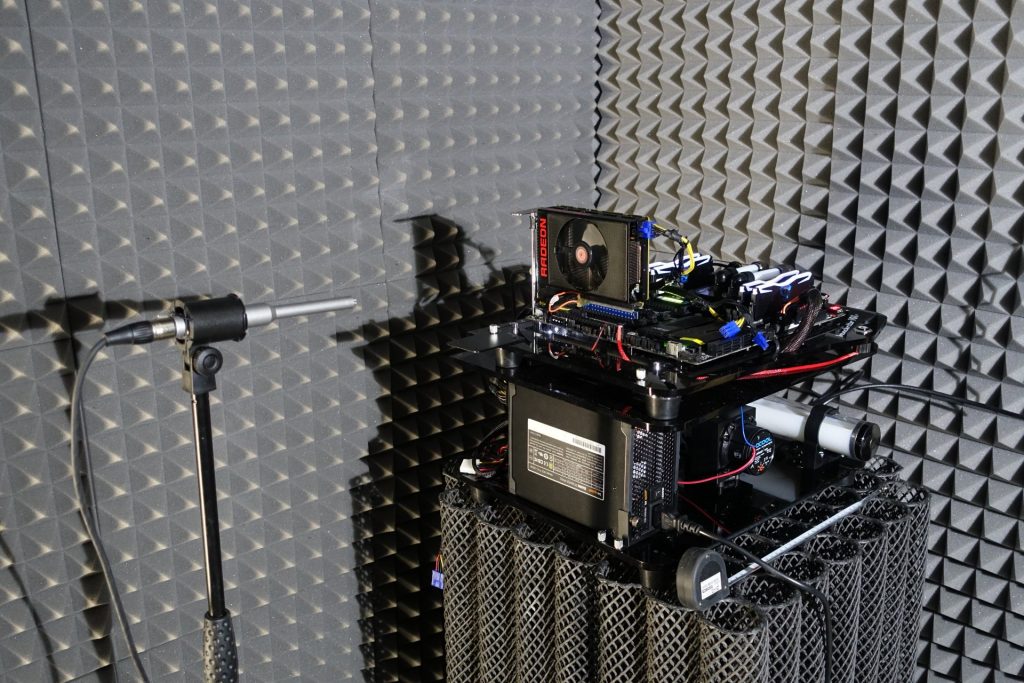

…and the self-created MCU-based measurement setup for motherboards graphics cards (pictures below), where at the end in the air-conditioned room also the thermographic infrared images are created with a high-resolution industrial camera. The audio measurements are done outside in my chamber.

I have also summarized the individual components of the test system in a table:

| Test System and Equipment |

|

|---|---|

| Hardware: |

AMD Ryzen 9 5950X OC MSI MEG X570 Godlike 2x 16 GB Corsair DDR4 4000 Vengeance RGB Pro 1x 2 TByte Aorus (NVMe System SSD, PCIe Gen. 4) 1x 2 TB Corsair MP400 (Data) 1x Seagate FastSSD Portable USB-C Be Quiet! Dark Power Pro 12 1200 Watt |

| Cooling: |

Alphacool Eisblock XPX Pro Alphacool Eiswolf (modified) Thermal Grizzly Kryonaut |

| Case: |

Raijintek Paean |

| Monitor: | BenQ PD3220U |

| Power Consumption: |

Oscilloscope-based system: Non-contact direct current measurement on PCIe slot (riser card) Non-contact direct current measurement at the external PCIe power supply Direct voltage measurement at the respective connectors and at the power supply unit 2x Rohde & Schwarz HMO 3054, 500 MHz multichannel oscilloscope with memory function 4x Rohde & Schwarz HZO50, current clamp adapter (1 mA to 30 A, 100 KHz, DC) 4x Rohde & Schwarz HZ355, probe (10:1, 500 MHz) 1x Rohde & Schwarz HMC 8012, HiRes digital multimeter with memory function MCU-based shunt measuring (own build, Powenetics software) NVIDIA PCAT and FrameView 1.1 |

| Thermal Imager: |

1x Optris PI640 + 2x Xi400 Thermal Imagers Pix Connect Software Type K Class 1 thermal sensors (up to 4 channels) |

| Acoustics: |

NTI Audio M2211 (with calibration file) Steinberg UR12 (with phantom power for the microphones) Creative X7, Smaart v.7 Own anechoic chamber, 3.5 x 1.8 x 2.2 m (LxTxH) Axial measurements, perpendicular to the centre of the sound source(s), measuring distance 50 cm Noise emission in dBA (slow) as RTA measurement Frequency spectrum as graphic |

| OS: | Windows 10 Pro (all updates, current certified or press drivers) |

- 1 - Einführung und Testsystem

- 2 - Teardown, Platinenanalyse und Kühler

- 3 - Gaming Performance Full-HD

- 4 - Gaming Performance WQHD

- 5 - Details: Frames per Second (Curve)

- 6 - Details: Percentiles (Curve)

- 7 - Details: Frame Times (Bar)

- 8 - Details: Frame Times (Curves)

- 9 - Details: Variances (Bar)

- 10 - Leistungsaufnahme und Effizienz der Einzelspiele

- 11 - Leistungsaufnahme: Übersicht & Netzteil-Empfehlung

- 12 - Temperaturen und Infrarot-Tests

- 13 - Geräuschemission / Noise

- 14 - Zusammenfassung. Features und Fazit

137 Antworten

Kommentar

Lade neue Kommentare

Neuling

Mitglied

1

Mitglied

1

Mitglied

Mitglied

Mitglied

Mitglied

Veteran

Urgestein

Neuling

Mitglied

Urgestein

Mitglied

1

Neuling

1

Mitglied

Alle Kommentare lesen unter igor´sLAB Community →