Intel in fast AND economical? Yes, since the 12th. Generation, i.e. Alder Lake S, this also fits together again. And so today’s text effectively becomes the narrative for a kind of turnaround, for which Intel can really only be congratulated if it is objectively tested and everything is properly classified. Since this was connected with some technical hurdles, I will also not be able to avoid combining this with a somewhat longer preface. This will help to be able to correctly classify what has been presented today. Because often enough it is not only the length of the FPS bars considered first that decides the quality and acceptance of a new product, but it is the many details in the sum that form the actual added value.

Important preface and preview of the follow-ups

If we’re honest, we’ve currently reached a stage in gaming where the CPU is almost always limited by the graphics card. This concerns the fast clickers with their 240 Hz monitors just as much as more relaxed gamers in the Ultra HD area (e.g. me), who are already happy if their expensive 120 Hz monitor at least pays off to some extent. And then Intel comes up with a new CPU that is supposed to make everything (even) better? Sure, there are e-sports titles that really still manage to push even a CPU to the limits, it’s just that this exact target group won’t usually use many-core high-end bolides on the CPU. Because I’d really like to meet the guy that can still tell the difference between 500 or 600 FPS. At most, you can still hear this in the coil whine of the graphics card. The percentages of the bars are quickly a case for the gallery, so that I have deliberately sorted out for today’s first part and just not these (mostly Denuvo-infested) titles have chosen. And the Reviewers Guide, well….

Of course, I’m sure there will be one or two people for whom today’s bar length differences aren’t big enough in my review. There’s a pretty simple explanation for this too, at least in gaming today (see below). However, if you really want to see in which real-world applications the self-proclaimed blue giant Core i9-12900K can shrink the Ryzen 9 5950X to a red dwarf even outside of the propaganda slides, please read part two tomorrow when it comes to production use. Because there were genuine moments of shock on my end, too, and the much-loved bars with the X-factor on the upwardly open torture scale. By the way, this is also available today, but I had to use all my metrics and several gigabytes of log files to illustrate it later. I’m just saying 1% low and power consumption….

First, though, let’s get to the story with the bars. What Intel had listed as its own benchmarks is equally wrong and right. Wrong in the extreme and yet right in the trend. Most AMD CPUs (especially those with more than 8 cores) were initially extremely handicapped under Windows 11. While I could initially measure over 15 percentage points in 720p in the sum of all games and over 10 percentage points difference in 1080p between the two fastest representatives of Intel and AMD, this advantage has shrunk considerably after the relevant Windows update (“L3 bug”), the subsequently added AMD chipset driver and consequently also a new BIOS for the motherboard (which reached me only 7 days before this launch, but once again brought something). Here, the sensation in the average FPS is missing for the time being, even though we (almost) always see Intel’s new CPUs in front. It would be bad if that wasn’t the case.

But behind the supposedly all-important performance, there’s a second side that I find much more important in light of the ever-expanding power consumption values of new hardware in ecologically fragile times like today. The fact that Intel (as a US company) doesn’t put such aspects in the foreground is logical (since energy isn’t a cost driver there), but it’s actually a grandiose missed opportunity to do some clever marketing away from the benchmark bars. Now you can certainly harp on the story with the PL1 and the very high setting, but my measured values also prove that even setting to infinite values will not change my conclusion today.

No, Intel didn’t shrink AMD in performance today, but they did (I’m going to spoil this) at the power outlet. And that’s exactly what today’s article builds on as well. I’ll cheekily save the partial destruction of some Ryzens in the production area for tomorrow, where the power socket will also play a role, by the way. And in the direction of Intel I can only say: please have more courage for the present, there is not only gaming. Open World could also be interpreted in a different way.

NVIDIA or AMD – In search of the right graphics card

However, I don’t want to digress too far, but now come back to the test system and the most suitable graphics card. That Intel prefers to use a graphics card from NVIDIA in the form of the GeForce RTX 3090 in the fight against AMD’s Ryzen is quite understandable, even if it’s not 100% accurate in 720p. While I did all of my professional testing (including certified hardware) with a very fast RTX A6000, gaming at lower resolutions doesn’t look quite as rosy in places because of the overhead from NVIDIA’s drivers. How strong and in which game, I will show in a moment, because it is not permanent.

The RTX A6000 can be brought to the level of the RTX 3090 and above with a little OC as a full expansion of the GA102, but it’s only really useful in Ultra HD, where the Radeon card shows its known weaknesses. However, there is an absolute GPU bound even on the GeForce in this case, which rather relativizes the supposed disadvantage. Yes, there is sometimes up to 12% performance difference between the two cards, it just doesn’t matter anymore. The biggest performance difference here was less than 2% between the slowest and fastest CPU, so when compared per card, the results were again almost identical.

More important, on the other hand, is figuring out the real differences in CPU bound, which forces us into the low resolutions. However, that’s exactly where the MSI RX 6900XT Gaming X I used outclasses the big RTX A6000 by a frighteningly clear margin in some cases. And just as some games are extremely out of sync with Intel, it’s the same in my test, where while there’s “only” an ample 9 percentage point difference between the two on average across all games, some games perform up to 20 percentage points faster. I have also very deliberately created these benchmarks only with the second-fastest Intel CPU and had to determine that the differences of both cards in total were about as high as the difference between the two fastest CPUs from Intel and AMD.

By the way, the whole thing hardly looks any different in full HD, even if it’s not quite as blatant. But the RX 6900XT definitely doesn’t have any disadvantages, and that’s all that mattered to me. And in the end it was also important for me to test resizeable BAR, i.e. the usage and addressing of the extended memory area between X570 and Z690. This in turn is rather difficult with a GeForce card, because the possible gain is rather marginal. So neither manufacturer preferences nor any emotions play a role here, but common sense.

Sure, it’s an extra effort to do gaming and workstation benchmarks separately, but this actually allows for some parallelization of work if you can put in the effort of redundant hardware. And finally, to be clear: Of course, benchmarks with the GeForce RTX 3090 (or the RTX A6000) are definitely not wrong, quite the contrary. But nuances are certainly more visible and clearer in the test with the Radeon in 720p. And that’s all I cared about, because after all the AMD patches and driver updates, the bars were suddenly way too similar.

Which brings us back to the introduction. So the 720p are set in my tests, the higher resolutions up to WQHD as well. I decided today, the day before launch, to pass on Ultra HD in the charts despite all the measurements. Because it absolutely doesn’t matter anymore which CPU is working in the background. I already mentioned the difference between a Ryzen 5 5600X and a Core i9-12900K with PL1 on 241 watts of less than 2%. 100% GPU Bound makes this possible. The power consumption values are also almost 100% the same as those from WQHD, so I’ll just save all the graphics.

Benchmarks, Test system and evaluation software

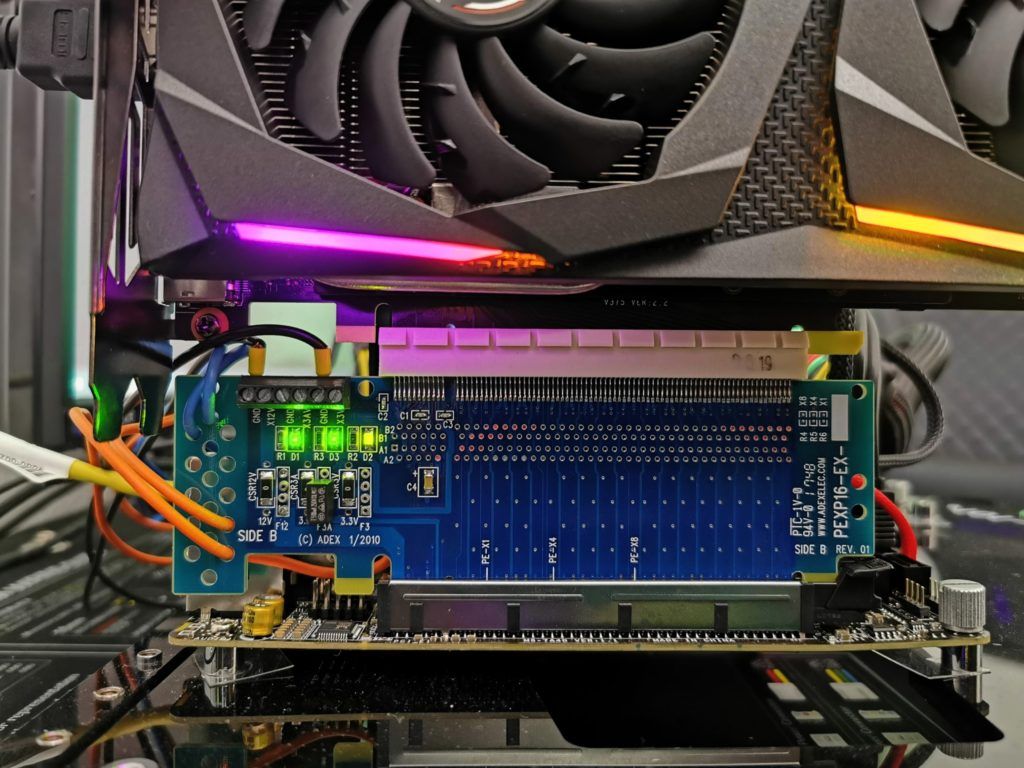

The measurement of the detailed power consumption and other, more profound things is done here in the special laboratory (where at the end in the air-conditioned room also the thermographic infrared recordings are made with a high-resolution industrial camera) on two tracks by means of high-resolution oscillograph technology (follow-ups!) and the self-created, MCU-based measurement setup for motherboards and graphics cards (pictures below).

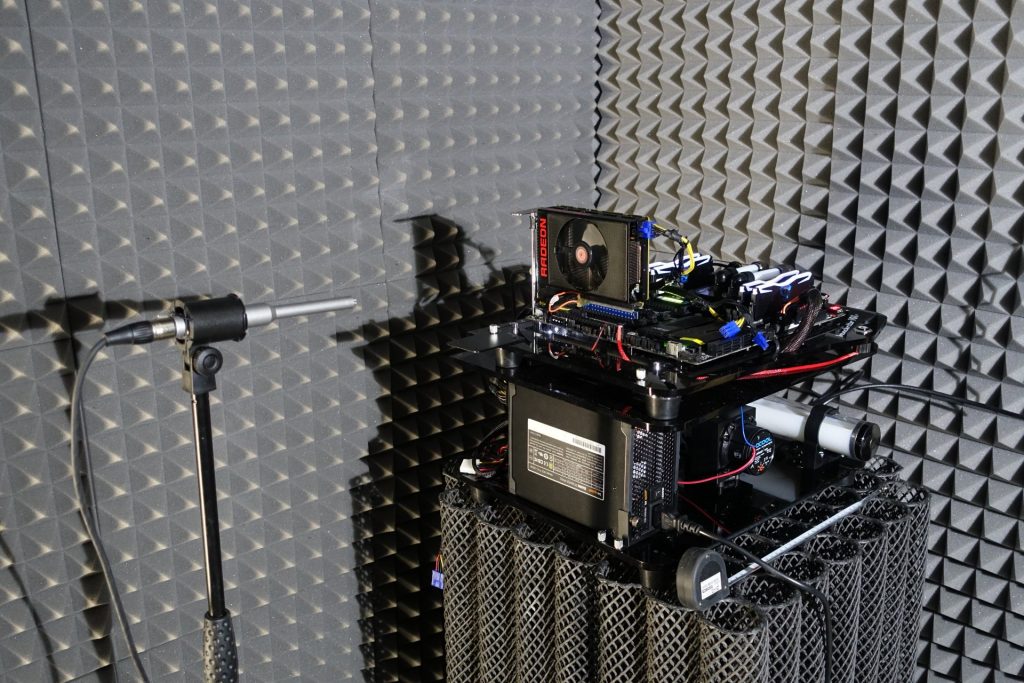

The audio measurements are done outside in my Chamber (room within a room). But all in good time, because today it’s all about gaming (for now).

I have also summarized the individual components of the test system in a table:

| Test System and Equipment |

|

|---|---|

| Hardware: |

Intel LGA 1700 Intel LGA 1200 AMD AM4 MSI Radeon RX 6900XT Gaming X OC 1x 2 TB MSI Spatium M480 |

| Cooling: |

Aqua Computer Cuplex Kryos Next, Custom LGA 1200/1700 Backplate (hand-made) Custom Loop Water Cooling / Chiller Alphacool Subzero |

| Case: |

Raijintek Paean |

| Monitor: | LG OLED55 G19LA |

| Power Consumption: |

Oscilloscope-based system: Non-contact direct current measurement on PCIe slot (riser card) Non-contact direct current measurement at the external PCIe power supply Direct voltage measurement at the respective connectors and at the power supply unit 2x Rohde & Schwarz HMO 3054, 500 MHz multichannel oscilloscope with memory function 4x Rohde & Schwarz HZO50, current clamp adapter (1 mA to 30 A, 100 KHz, DC) 4x Rohde & Schwarz HZ355, probe (10:1, 500 MHz) 1x Rohde & Schwarz HMC 8012, HiRes digital multimeter with memory function MCU-based shunt measuring (own build, Powenetics software) |

| Thermal Imager: |

1x Optris PI640 + 2x Xi400 Thermal Imagers Pix Connect Software Type K Class 1 thermal sensors (up to 4 channels) |

| Acoustics: |

NTI Audio M2211 (with calibration file) Steinberg UR12 (with phantom power for the microphones) Creative X7, Smaart v.7 Own anechoic chamber, 3.5 x 1.8 x 2.2 m (LxTxH) Axial measurements, perpendicular to the centre of the sound source(s), measuring distance 50 cm Noise emission in dBA (slow) as RTA measurement Frequency spectrum as graphic |

| OS: | Windows 11 Pro (all updates/patches, current certified or press VGA drivers) |

- 1 - Introduction, preface and test systems

- 2 - 720p - Gaming performance

- 3 - 720p - Power consumption and efficiency

- 4 - 1080p - Gaming performance

- 5 - 1080p - Power consumption and efficiency

- 6 - 1440p - Gaming performance

- 7 - 1440p - Power consumption and efficiency

- 8 - Overall evaluation of gaming performance

- 9 - Overall evaluation of power consumption and efficiency

- 10 - Summary and conclusion for gaming

163 Antworten

Kommentar

Lade neue Kommentare

Veteran

Urgestein

Urgestein

Urgestein

Veteran

Urgestein

Urgestein

Urgestein

Veteran

Urgestein

Veteran

Urgestein

1

Moderator

Urgestein

Urgestein

Mitglied

1

Urgestein

Alle Kommentare lesen unter igor´sLAB Community →