Sure, the full-size GA102, especially overclocked and well cooled, is really fast. It is a card that is in no way inferior to a normal GeForce RTX 3090 in terms of gaming performance, but is much more efficient. But for CPU tests in the CPU bound (“bottleneck”) with resolutions from 1080p downwards, not only the chip and the theoretical performance have to be considered, but all circumstances up to the driver. If Intel’s Alder Lake CPUs, especially the flagship in the form of the Core i9-12900K(F), should really be as fast as they are rumored to be (I’m deliberately playing dumb here, even though I don’t have to), then the absolute fastest graphics card in the sum of hardware and software would just be good enough.

The practical value of testing with a screen resolution of 1280 x 720 pixels (“720p”) is debatable, but there are plausible arguments for doing so, even if no one with high-end or enthusiast hardware will ever seriously use such postage stamp resolutions. It’s just that the CPU is almost always limited by the graphics card (GPU bound) in higher resolutions and better settings and therefore doesn’t play the most important role. But most users are still stuck with 1440p and 1080p today, partly because of the all-important high FPS numbers in common shooters. And besides, maybe today’s 720p is the CPU benchmark for tomorrow’s 1080p.

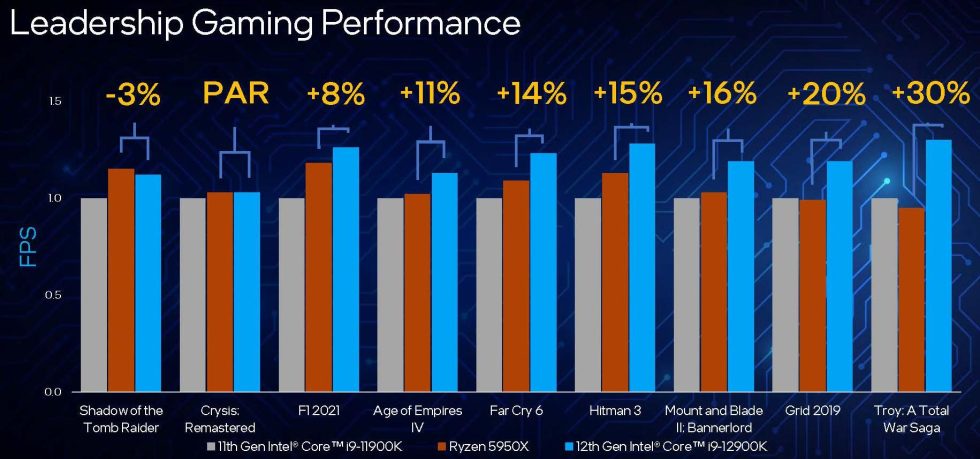

Intel has naturally chosen the smallest resolution of 1920 x 1080 pixels (“1080p”) in its own manufacturer slides for exactly this reason, which certainly corresponds to the logic of the CPU bounds to be checked. In contrast, however, I’ll be testing 10 well-balanced games in a total of four resolutions from Ultra HD (“2160p”) all the way down to 720p in great detail, because the ultimate goal is to satisfy both parts of the readership, even those who can’t do anything with 720p to begin with. The fact that there weren’t 11 games (as planned) is due to the annoying problem of the DRM mechanisms, which have their problems with the new CPU. Intel will have to improve this as quickly as possible (which they are currently doing frantically). Denuvo once again turns out to be a plague that can’t cope with the separation into E- and P-Cores.

Speaking of Intel slides, you also have to include an important side note here about the testing methodology that pissed me off a bit. Yes, I too will be testing exclusively on Windows 11 for good reasons, because using ADL is not fun on Windows 10, nor could you fully exploit the potential of the CPUs. Only if one should and wants to meet Intel as a customer already so far to get a new operating system beside the CPU, then also the compared CPUs should not have to fight with blunt weapons.

From my point of view it is not irresponsible to create such benchmarks at a very early stage and also to use them as an internal comparison and orientation, but it is well known that the Ryzen CPUs under Windows 11 had to accept performance losses of up to 15% compared to Windows 10. But then a deliberate publication of false benchmark results is all the more questionable. I’ve remeasured some things with a completely updated system (Windows patches, AMD chipset drivers, motherboard BIOS) and can really only shake my head.

I won’t mention any numbers here for reasons, but it’s definitely the case that the picture has changed visibly in some cases. To produce such a silly slide that is reasonably up-to-date, you don’t even need a whole working day for benchmarking a single CPU and the necessary correction in PowerPoint. For a company with Intel’s resources, all this is actually a piece of cake, but quite obviously not wanted by the marketing department.

NVIDIA or AMD – In search of the right card

However, I don’t want to digress too far, but now come back to the test system and the most suitable graphics card. The fact that Intel prefers to use a graphics card from NVIDIA in the form of the GeForce RTX 3090 in the fight against AMD’s Ryzen is quite understandable, even if not 100% purposeful. While I did all of my professional testing (including certified hardware) with a very fast RTX A6000, gaming at lower resolutions doesn’t look quite as rosy in places because of the overhead from NVIDIA’s drivers. How strong and in which game, I will show in a moment, because it is not permanent.

The RTX A6000 can definitely be brought up to the level of the RTX 3090 with a little OC as a full upgrade of the GA102, but the whole thing is really only useful in Ultra HD, where the Radeon card shows its known weaknesses. Only in this case, an absolute GPU bound can even be calculated on the GeForce, which rather relativizes the supposed disadvantage. Yes, there is sometimes up to 12% performance difference between the two cards, it just doesn’t matter anymore. The biggest performance difference here was less than 2% between the slowest and fastest CPU, so when compared per card the results were again almost identical.

More important, on the other hand, is figuring out the real differences in CPU bound, which forces us into the low resolutions. But that’s exactly where the MSI RX 6900XT Gaming X that I used outclasses the big RTX A6000, sometimes shockingly clearly. And just as some games are extremely out of sync with Intel, it’s the same in my test, where while there’s “only” an ample 9 percentage point difference on average across all games, some games perform up to 20 percentage points faster. I also very deliberately created these benchmarks with only the second fastest Intel CPU, but will of course only use the percentages and not the real measured values in FPS before launch.

By the way, the whole thing hardly looks any different in full HD, even if it’s not quite as blatant. But the RX 6900XT definitely doesn’t have any disadvantages, and that’s all that mattered to me. So neither manufacturer preferences nor any emotions play a role here, but common sense. Sure, it’s an extra effort to do gaming and workstation benchmarks separately, but this actually allows for some parallelization of work if you can put in the effort of redundant hardware. And finally, to be clear: Of course, benchmarks with the GeForce RTX 3090 (or the RTX A6000) are definitely not wrong, on the contrary, but nuances are certainly more visible and clearer in the test with the Radeon. And that’s all I cared about.

Which brings us back to where we were. So the 720p is set in my tests, and so are the high resolutions. Because even if the FPS bars for all CPUs are unfortunately very similar in these, I offer you further details like power consumption for each game, the frame times and variances and therefore finally a very well broken down efficiency view. Then, such benchmarks will also result in a practical added value up to the consideration of whether a new platform and CPU is needed for WQHD (“1440p”) or whether it is better to stay with Windows 10 for the time being. Alder Lake is only available with forced marriage as a WIntel-Couple, of course you have to consider that in the end.

42 Antworten

Kommentar

Lade neue Kommentare

Veteran

Veteran

Veteran

Urgestein

1

Urgestein

1

Veteran

Veteran

Veteran

1

Veteran

1

Mitglied

1

Mitglied

Urgestein

Mitglied

1

Alle Kommentare lesen unter igor´sLAB Community →