The fact that the GeForce RTX 3090 Ti, which is already rumored for 01/27/22, is postponed quite a bit might even have several different reasons, but they certainly have to be considered in context with each other. That there is in fact a delay, one could already read everywhere, but unfortunately not, why exactly. The tight-lippedness among NVIDIA’s board partners was surprisingly pronounced this time, and yet even in the yes-no exclusion process you come to an interesting conclusion if you ask the right questions. I don’t want to speculate wildly today, but to do a little basic research based on various information and puzzle pieces (which were so to say thrown into my virtual mailbox).

The circumstances I’m writing about today are a real scenario, and at the same time it only claims to be a practical and fact-based “what if” experiment and not a leak, where you fry your sources with composure and NVIDIA’s legal department starts to rotate. The current rumors, depending on the side, speak of hardware or firmware problems, I would even go so far as to speak of hardware AND firmware problems, where the firmware could be the cause and the solution at the same time and the hardware a hard concrete wall in the way of high speeds.

However, I also have to narrow it down, meanwhile even three big board partners have assured that their cards run smoothly, one would have no real problems with it and the hold came from NVIDIA without any indication of reasons. Whether NVIDIA is now struggling with its own Founders Edition and the rest of the vendors have to wait for the “MSRP model”, who knows… But that the customization of the FE is not easy (up to the cooling) is certainly no secret. That’s why I refer in today’s article only to the FE and no other model.

Existing designs and the limits of balancing

In order to get everyone up to pace, I will greatly simplify the following content. At least as far as it remains accurate enough in terms of content. Let’s start on the commercial and technical side first. Since the GeForce RTX 3090 Ti is more likely to lead a unicorn existence, it would not make much sense to develop a separate board for a physically almost identical card (apart from the GPU and the memory) or to send this new card through NVIDIA’s Greenlight program to validate all individual steps. This then also explains why e.g. MSI sticks to the three 8-pin connectors instead of the new 16-pin Microfit adapter or the 12+4 ATX connector. You just keep using what you already have!

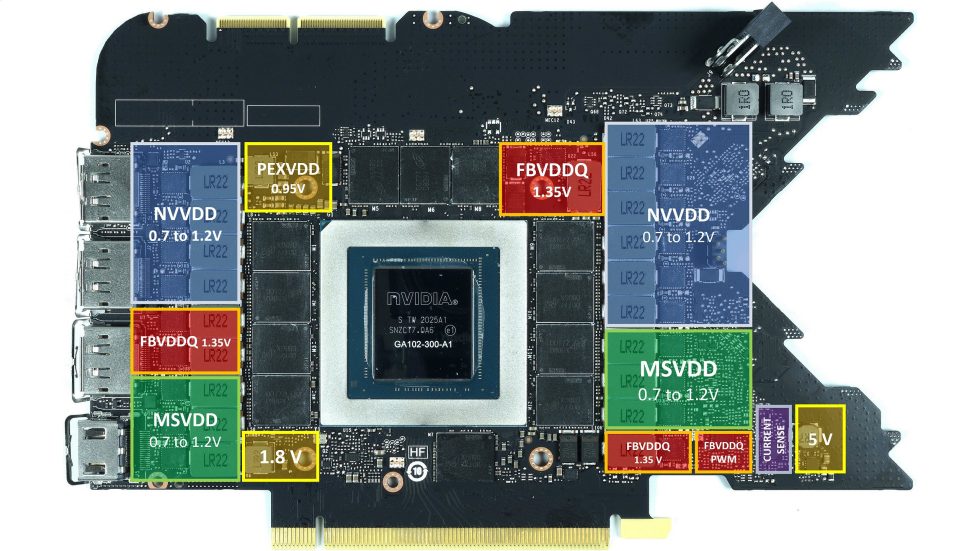

Therefore, board partners will usually do nothing but continue to use the existing designs of the GeForce RTX 3090 and simply adapt these boards, as well as the firmware, accordingly. But as always, the devil is already in the details, because the complete power supply of such a card is a very fragile and carefully balanced construction. It is not enough to turn just one or two screws (as has probably often happened), but it is essential to check what rat tail of mutual influences you may still be dragging behind you. Especially since all these cards have already been sewn very close to the edge. For the sake of simplicity, let’s first look at the GeForce RTX 3090 Founders Edition’s layout purely as an example, neglecting the rear:

We see a breakdown of the GPU supply voltage into NVVDD (blue) and MSVDD (green), as well as that for the memory in the form of FBVDDQ (red). The generation of the other partial voltages is negligible here for the time being because of their share in the TBP, although not completely uninteresting. If you know how the phases of the voltage converters work, then you also know that the majority is connected to the external PCIe port (Aux). But not all are. A certain part also hangs on the PCIe motherboard slot (PEG) and at the latest now the problem starts, which can even be understood quite easily! The PEG has already reached its limit of 5.5 amps (i.e. 66 watts) on the 12-volt rail in many GeForce RTX 3090s and could only be loaded further by means of a (blatant) spec excursion!

By the way, if you want to know which components cause which power consumption, you can first take a look at the reference layout (PG132), which NVIDIA gave to the board partners as a guide. The thermal load of course also corresponds to the electrical power dissipation of the respective components, the law of conservation of energy still applies! And so we see that the memory is really calculated with almost 60 watts (so 2x 30 watts per side) and the GPU actually stays even below 230 watts.

However, a considerably higher TBP (from about 480 watts) is stored in the firmware for the GeForce RTX 3090 Ti, i.e. the value for the entire graphics card including all existing 12-volt rails. And if we now keep the components from the schematic above in mind, it will also be quite evenful thermally, especially in the area of the GPU, the GPU voltage converters and the memory. However, the waste heat is ultimately only the result of the electrical energy previously supplied.

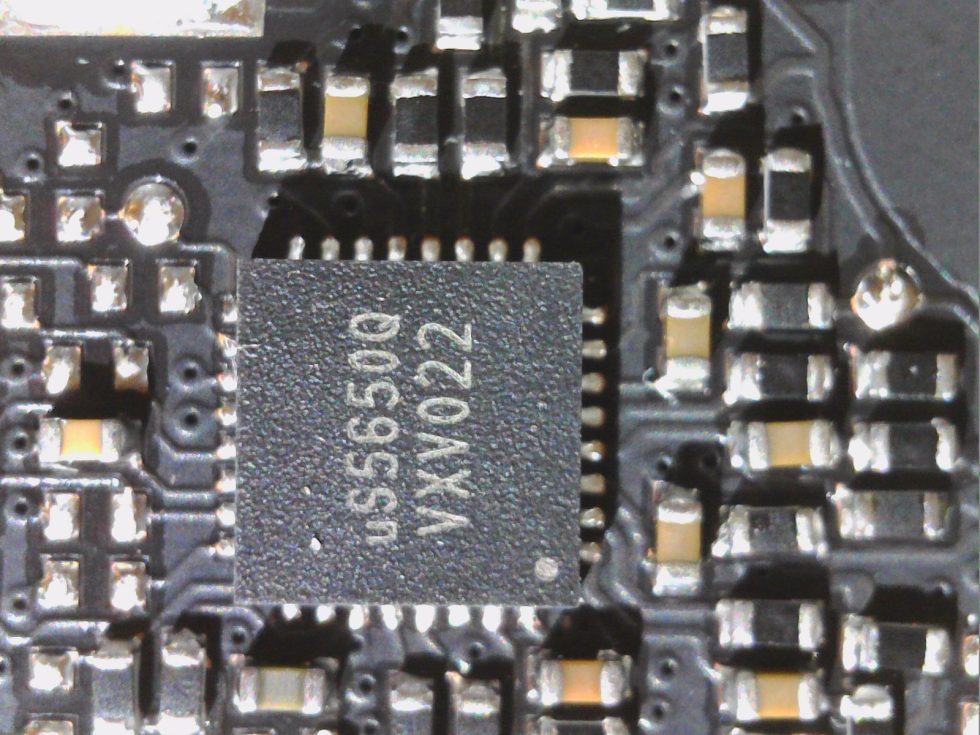

This should then also be the primary starting point of all the problems, even though NVIDIA meticulously monitors all incoming rails with so-called monitoring chips, such as UPI’s uS5650Q shown below. These special chips record various voltages at quite small and thus very suitable intervals, allowing real-time control.

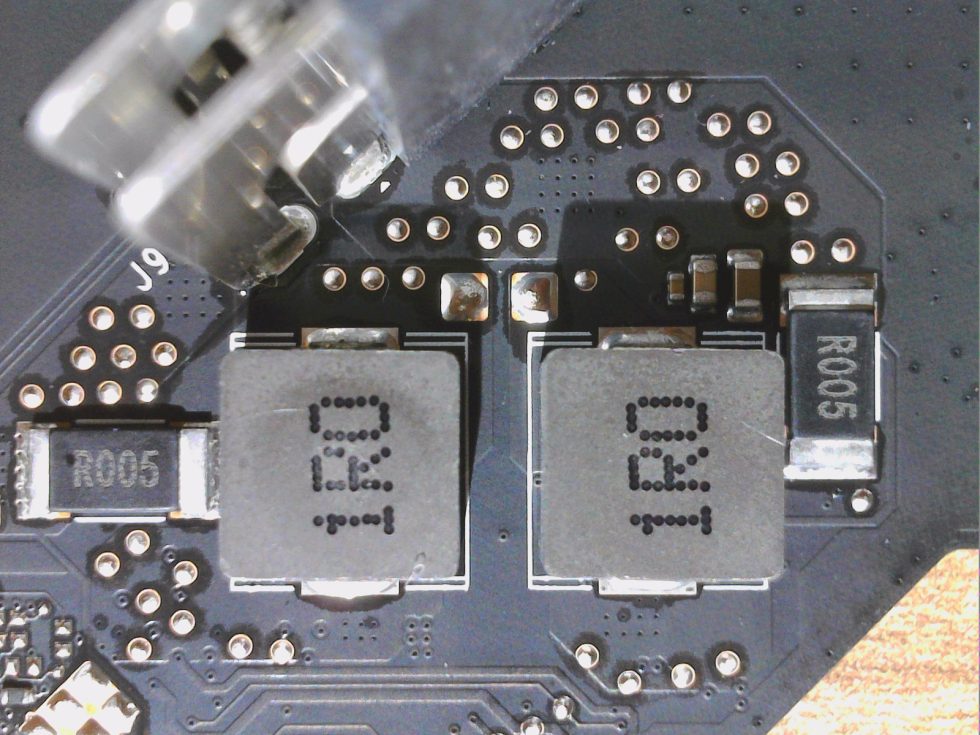

But in the end it is also about the flowing currents and so it is more convenient to measure the voltage drop that occurs at the respective inputs of the 12 V rails at so-called shunts (i.e. low impedance resistors) (see picture below next to the two longitudinal coils in the middle). From this, the current flowing in each case can be calculated quite simply according to Ohm’s law, and together with the input voltage, which is also recorded, one can thus conclude the respective power as the product of both values. You then have the current power consumption added up, which programs like HWInfo can also read out. AMD unfortunately does not offer such a service (yet). Too bad actually. And when I write “you” here in such a simplified way, of course the firmware of the card takes care of that.

Unfortunately, it is not possible to increase the power consumption of individual components like the GPU or memory without a simultaneous increase on all components. And yes, even the even faster memory will probably need additional power and will certainly not get by with the current maximum of 3 watts per module. And all this with already existing designs? I would certainly not have a thermal problem with an MSI RTX 3090 Ti Suprim due to the fat cooler, but I would have an electrical problem.

And even though I actually dislike shunt mods for everyday use, you can actually learn from them! Because if you only change the shunts on the external power connectors (in this case of the RTX 3090 FE, that means two) and leave the shunt of the PCIe slot (motherboard) in the original, the card will sporadically become unstable and it will also no longer run cleanly under load. I also tested this before also making the measurements on the following page. Keyword page turning! Now there are watts to marvel at.

69 Antworten

Kommentar

Lade neue Kommentare

Urgestein

1

Urgestein

Urgestein

Veteran

Urgestein

Veteran

1

Urgestein

Urgestein

Urgestein

Urgestein

Urgestein

Veteran

Veteran

Veteran

Urgestein

Urgestein

Urgestein

Alle Kommentare lesen unter igor´sLAB Community →