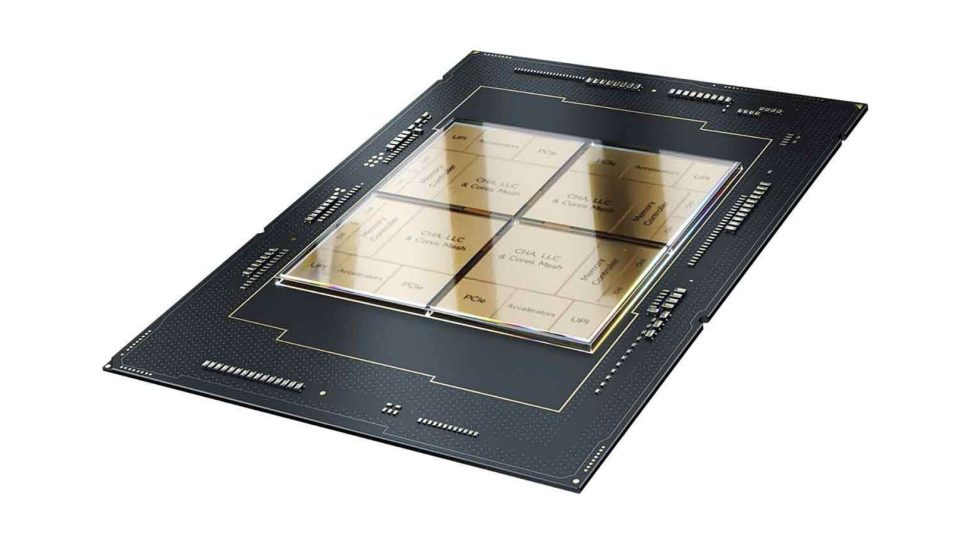

Today, MLCommons released the results of the renowned AI performance benchmark MLPerf Training 3.0. This benchmark used both the Habana Gaudi2 deep learning accelerator and the fourth-generation Intel Xeon Scalable processor. The latest MLPerf results, published by MLCommons, confirm the cost advantage that Intel Xeon processors and Intel Gaudi deep learning accelerators offer to AI customers.

The Xeon processor, with its built-in accelerators, is an ideal solution for running large-scale AI workloads on general-purpose processors. Gaudi, on the other hand, offers competitive performance for large language models and generative AI. Intel’s scalable systems with optimized, easy-to-use and open software lower the barriers for customers and partners to implement a variety of AI-based solutions in the data center – from the cloud to the intelligent edge. These solutions span from the cloud to the intelligent edge, highlighted by Sandra Rivera, executive vice president and general manager of the Data Center and AI Group at Intel.

A current perception in the industry is that generative AI and large language models (LLMs) can only run on Nvidia GPUs. However, new data shows that Intel’s AI portfolio offers compelling and competitive options for customers looking to break free from closed ecosystems that limit efficiency and scalability. The latest MLPerf Training 3.0 results underscore the power of Intel products on a wide range of deep learning models. The sophisticated training software and systems based on Gaudi2 were successfully demonstrated in large-scale training of the GPT-3 large-scale language model. Gaudi2 is one of only two semiconductor solutions that were able to deliver performance results for the GPT-3 LLM training benchmark.

In addition, Gaudi2 offers customers significant cost benefits in both servers and systems. The accelerator’s MLPerf-validated performance on GPT-3, computer vision, and natural language models, as well as future software advances, make Gaudi2 a highly compelling price/performance alternative to the Nvidia H100. On the CPU side, the 4th generation Xeon processors with Intel AI Engines have shown their capability in deep learning training. Customers can build a single general-purpose AI system on Xeon-based servers that combines data pre-processing, model training, and deployment, providing the right combination of AI performance, efficiency, accuracy, and scalability. Habana Gaudi2 results confirm that training generative AI and large language models requires server clusters to handle massive computational demands at scale. MLPerf results confirm Habana Gaudi2’s outstanding performance and efficient scalability on the most demanding model tested, GPT-3 with 175 billion parameters.

Highlights of the results:

- Gaudi2 delivered impressive “time-to-train” on GPT-31: 311 minutes on 384 accelerators.

- Nearly linear 95% scaling from 256 to 384 accelerators on the GPT-3 model.

- Excellent training results on computer vision – ResNet-50 8 accelerators and Unet3D 8 accelerators – and on natural language processing models – BERT 8 and 64 accelerators.

- Performance increases of 10 zw. 4 for BERT and ResNet models compared to the November submission, demonstrating the increasing maturity of Gaudi2 software.

- Gaudi2 results were submitted “out of the box,” meaning customers can achieve comparable performance results when implementing Gaudi2 on-premises or in the cloud.

The evolution of Gaudi2 software for the Gaudi platform continues to grow and adapt to the growing number of generative AI and LLM applications. Gaudi2’s GPT-3 contribution was developed based on PyTorch and used the popular DeepSpeed optimization library (part of Microsoft AI at scale) instead of proprietary software. DeepSpeed enables efficient scaling of LLMs by simultaneously supporting 3D parallelism (data, tensor, pipeline). Gaudi2 results for the 3.0 benchmark were presented in data type BF16. Gaudi2 is expected to see a significant performance boost in Q3 2023 when software support for FP8 and new features are released. Results with 4th Generation Xeon Processors: MLPerf results show that Intel Xeon processors are the only CPU to provide out-of-the-box enterprise AI functionality compared to other alternatives. This allows them to deploy AI applications on general-purpose systems and avoid the cost and complexity of dedicated AI systems. Some customers who occasionally train large models from scratch can use general-purpose CPUs, especially on the Intel-based servers they already use for their business operations. Most customers, on the other hand, use pre-trained models and refine them with their own smaller, carefully selected data sets. Intel has already demonstrated that this fine-tuning can be done in just a few minutes using Intel’s AI software and common open source software.

Highlights of the MLPerf results:

- In the closed division, 4th-generation Xeons were able to train BERT and ResNet-50 in less than 50 minutes (47.93 min.) and less than 90 minutes (88.17 min.), respectively.

- For BERT in the open division, the results show that the Xeon was able to train the model in about 30 minutes (31.06 min) when scaled to 16 nodes.

- For the larger RetinaNet model, Xeon was able to achieve a time of 232 minutes on 16 nodes, giving customers the flexibility to use Xeon cycles off-peak to train their models during the morning, over lunch, or overnight.

- The fourth-generation Xeon processor with Intel Advanced Matrix Extensions (Intel AMX) provides significant performance improvements that include multiple frameworks, end-to-end data science tools and a broad ecosystem of intelligent solutions.

MLPerf is widely recognized as the leading benchmark for Artificial Intelligence performance. It enables a fair and repeatable comparison of the performance of different solutions. Intel recently reached a major milestone by submitting over 100 entries. Intel remains the only vendor to publicly present CPU results using industry-standard deep learning ecosystem software.These results clearly demonstrate the excellent scalability possible with the low-cost and readily available Intel Ethernet 800 Series network adapters. These adapters use the open-source Intel Ethernet Fabric Suite software, which is based on Intel oneAPI.

Source: TechPowerUP

Bisher keine Kommentare

Kommentar

Lade neue Kommentare

Artikel-Butler

Alle Kommentare lesen unter igor´sLAB Community →