Even though the title may sound a bit martial and exaggerated – it’s the bitter reality if you really want to compare PC cases objectively in the thermal and acoustic area. And that’s all this article is about. Of course, subjective factors such as looks, build quality and usability also count towards the content of a good review and thus both naturally deserve to coexist peacefully. Only then you have to call it that. Hands-on and usability on the one hand, actual cooling performance, noise emission and damping for an honest comparison on the other. In today’s guest post, I simply want to give the perspective of those who really want to make it resilient and objective, without detracting from the performance of those who present a case from a user’s perspective in the normal reviews. But this is about legally compliant assessments and also certifications.

Initial situation: how much effort do you have to put into determining what?

Some people really believe that testing a case is an elegant piece of cake, because all you have to do is install a system in it and mention in the text how easy or difficult it was, if you like the internal and external design and what comes with it. And if you’re not getting paid to find it good, you might as well say a few words about the build quality. You can (and should) do that, but then please don’t do it as a comparison. Let’s better call it product presentation and user experience, because it has its justification and target group. But everything that leads on now becomes tricky.

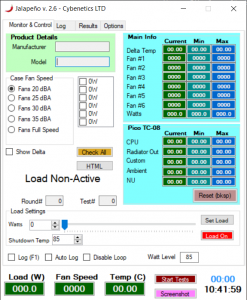

Some more savvy testers take this to the next step and also evaluate thermal performance by using the same system in all of their case tests and recording and comparing the temperatures of critical components such as CPU, GPU, drives, VRMs, etc. in a database. Even more experienced testers then use at least the delta temperature to compensate for the different ambient conditions. Assuming you don’t have a climate chamber, you can’t guarantee a stable operating temperature in this case either. Thus, using the delta difference is the only way to have a somewhat useful reference point in all evaluations. However, even this is not physically exact and does not do justice to some products. You can’t claim objectivity like that.

Finally, very few testers then measure noise emissions in addition to thermal performance and try to normalize the results by applying the same test conditions to all cases. That needs a little explaining, though. This is because you cannot directly compare a case that emits 40 dBA at full fan speed with a case that produces 35 dBA with its fans at maximum speed. The correct course of action now would be to reduce the fan speed of the noisy case and have its fans spin at the appropriate speed that will allow it to output 35 dBA. Only then could one also compare apples with oranges. To do this, however, you would need to normalize the fan noise levels. To do this at all, you would have to use a semi-anechoic chamber (hereafter simply referred to as a chamber) and a high-precision noise analyzer, and then follow the appropriate ISO guidelines that specify exactly how to place the microphone and the test object in the chamber. Such equipment is expensive and the construction of a suitable chamber is also difficult. Well, what now?

Another critical factor when it comes to honest case evaluations is the consideration of noise attenuation (colloquially known as damping). How to realize such a thing? It’s simple: install a constant source of noise inside the enclosure and then take measurements. The noise source is then removed from the enclosure and noise measurements are taken again (or vice versa). In the end, it’s just a matter of subtracting to get the noise attenuation as the difference. But which noise pattern should you use? Pink or white noise? And yes, if you really want to be thorough, you could still use a long chirp pattern. Let’s assume that the tester wants to provide more details to the interested users. In this case, one could use many different frequencies from ultra-low to super-high and determine the noise attenuation performance for each frequency (or different frequency bands). For example, this is what Cybenetics does in case assessments. It is a demanding procedure that requires top-notch equipment and an advanced chamber. But it also pushes the boundaries of a “normal” review considerably.

Why a real system can lead to errors/problems

However, the biggest flaw with most reviews is that they are based on a real system, which has the following drawbacks:

- It needs to be replaced frequently to keep up with technology trends and provide the necessary “wow factor”. No one is going to be pleased when he/she sees a 3-5 year old test system being used, when after all thermal performance is what matters most in these tests, not power. But no superficial observer is interested in that.

- You also can’t apply an even, accurate, and most importantly, adjustable load with it if you’re using a real system. You rely on how well the benchmark software can keep the load stable. However, even small load deviations can influence the recorded temperatures by several degrees, which in turn is sufficient to distinguish one case from another (unjustifiably). Besides, all options that can influence the CPU’s wattage have to be set manually in the BIOS first, and of course the same applies to all corresponding GPU options in the end. Measurement tolerances and reproducibility errors add up pretty quickly here, unfortunately.

- It is not easy to test different scenarios, i.e. different hardware, because you have to use a fixed system to keep all measured values compatible between evaluations.

However, with a simulated system, it would be possible to quickly and effortlessly adjust several parameters so that one could conveniently (and reproducibly) test different scenarios. So, for example, if you have a 250W CPU and a 350W GPU installed in a real system, you would not be able to see the performance differences between a system using a 100W CPU and a 200W GPU. On the other hand, if you can accurately set the load you need, this is absolutely simple and you can easily simulate all CPUs and GPUs from low-end to high-end. Sound good? It is!

Sensors and fans – further imponderables

By the way, many testers rely on the motherboard, CPU and GPU temperature sensors and you can never know if these sensors are reasonably accurate and really report correct temperatures at all over the entire range. Well, you could argue that all of this wouldn’t be much of a problem from the moment you use the same system in all tests, but every measurement system needs to be calibrated from time to time and that’s something you can’t do with integrated sensors (aging, firmware updates, etc.). Sure, there are a few testers that use proper and calibrated temperature loggers, but you have to be able to ensure that these temperature sensors are placed in the exact same location every time you test. Otherwise, the results provided will not match those of previous tests. The best way to ensure that the temperature sensors provide accurate information every time you test them is to drill small holes in the appropriate heat sinks or metal parts, and then install them right there every time.

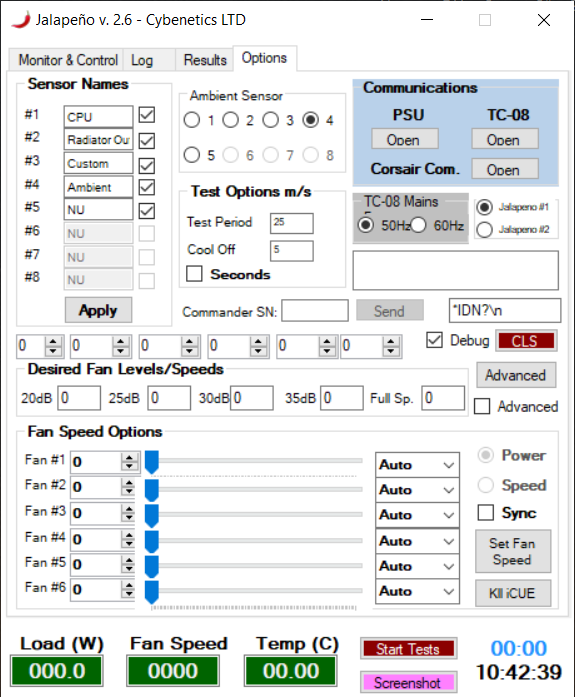

Controlling the fan speed is another challenge. You would need to have total control over all system fans to normalize fan noise and also get a detailed log. However, if you want to run automated tests, a programmable fan controller is also mandatory. And we have already arrived at the actual core issue, which must be clarified for the efficient processing of larger numbers of test objects.

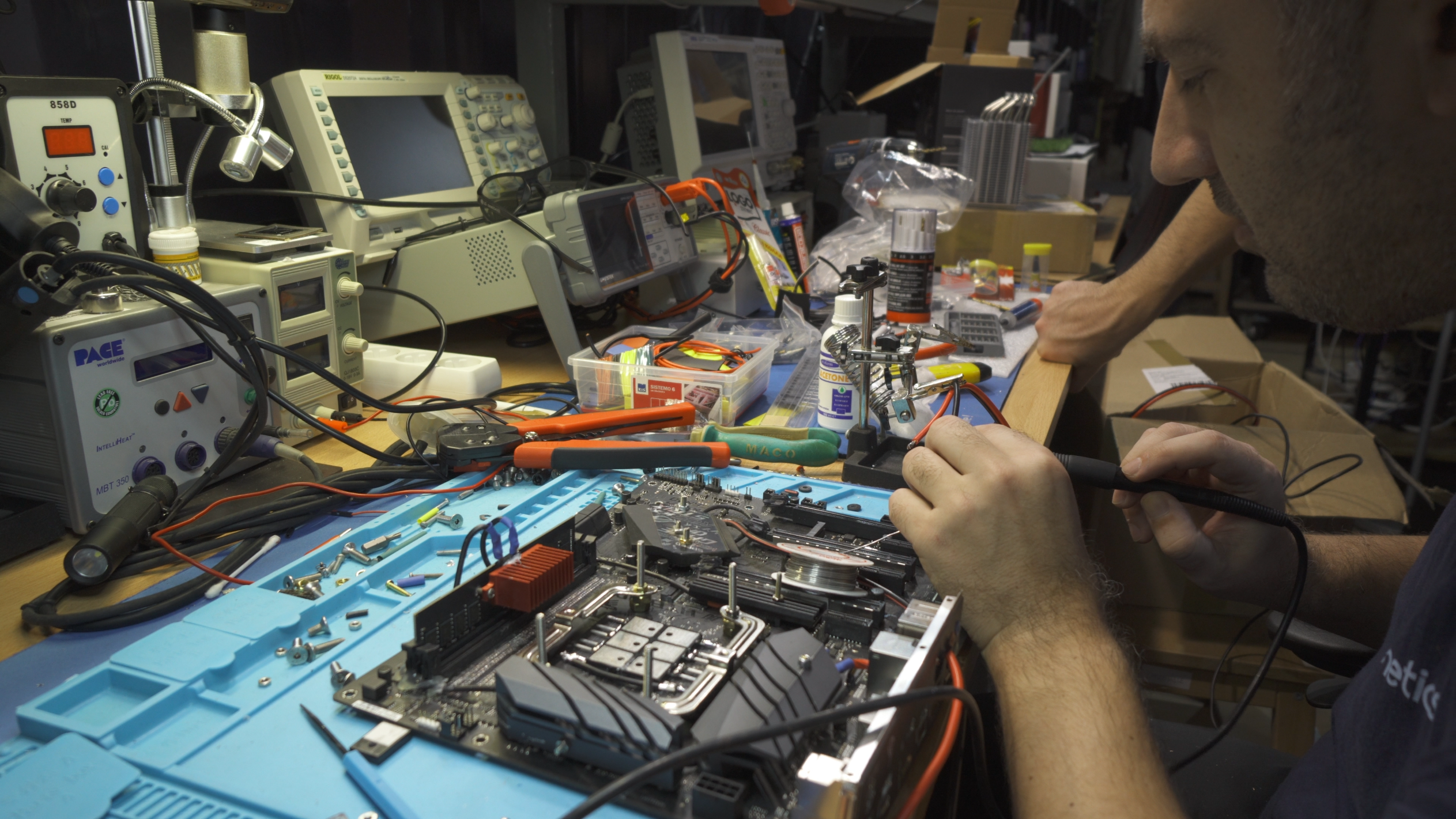

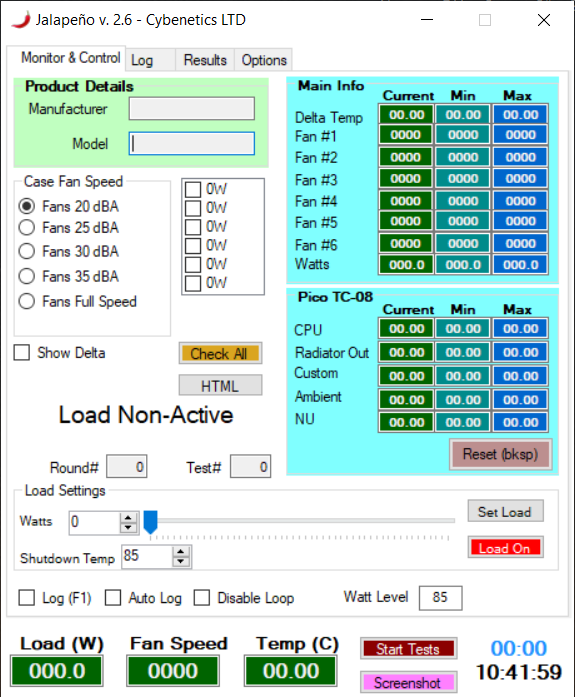

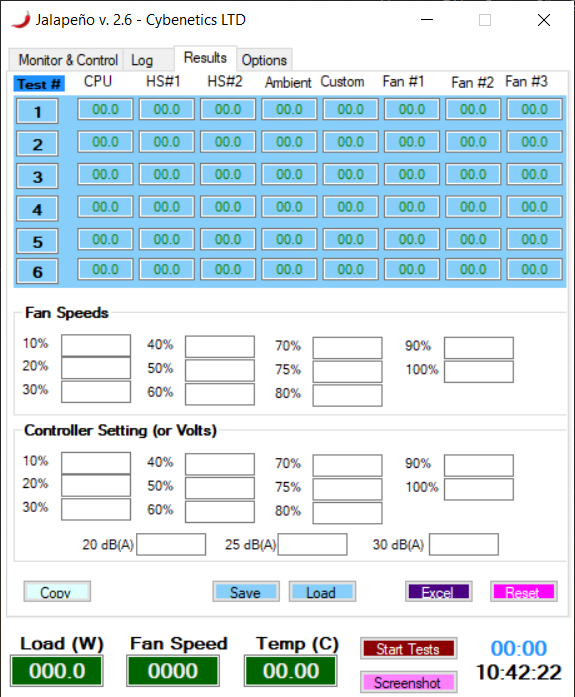

The automation of the processes minimizes errors – the test system is taking shape!

Thus, proper implementation is the most important thing of all. We are all human beings who are prone to mistakes that can be made during test execution or when the results are handed over to an Excel spreadsheet. To avoid all these mostly unconscious mistakes and to enable long test sessions where all data is logged and reported, you need automation, a control and monitoring program that controls all instruments and runs different test scenarios varying the applied load and fan speed.

Finally, when everything is ready, the same application should be able to deliver the data in the required format for further analysis, from CSV to Excel files. This part of the code can get very complicated and involved and requires long beta tests. Still, once it is working well, it will give the most accurate results and the whole testing procedure can take many hours without anyone having to supervise the whole process. In addition, such a program can also be used to calculate averages at specific points in time, which are much more accurate than just running a test and recording random spikes.

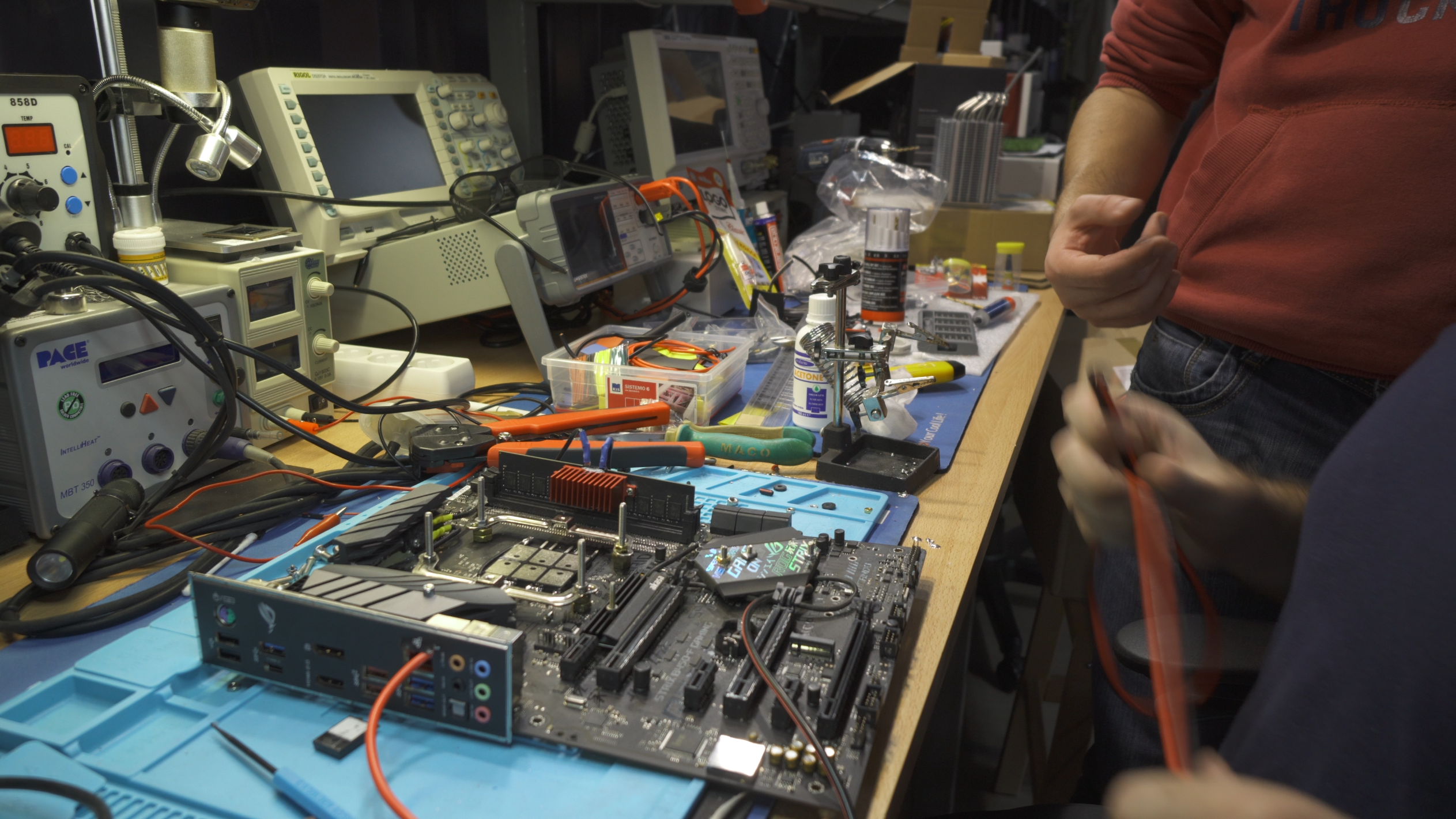

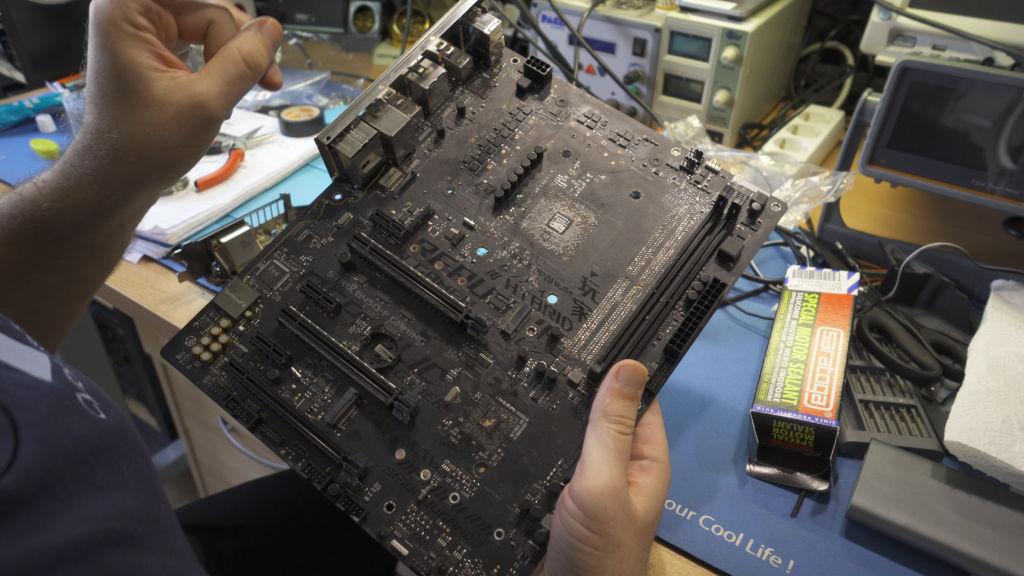

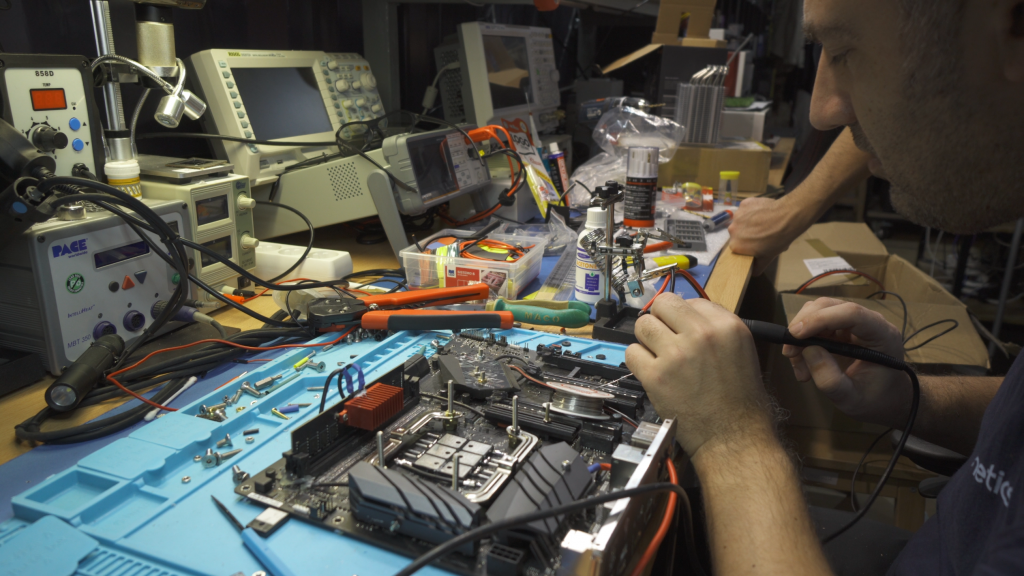

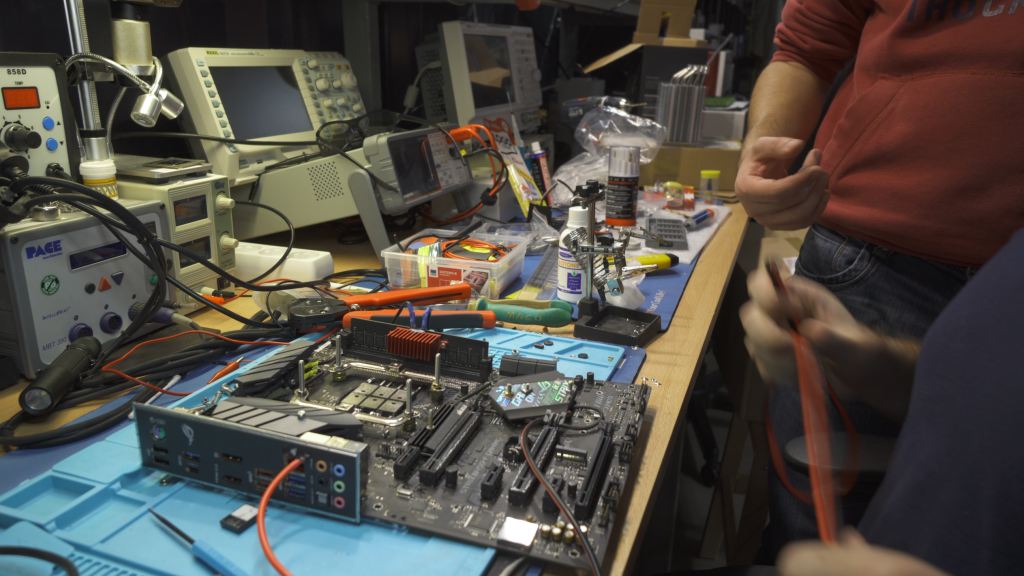

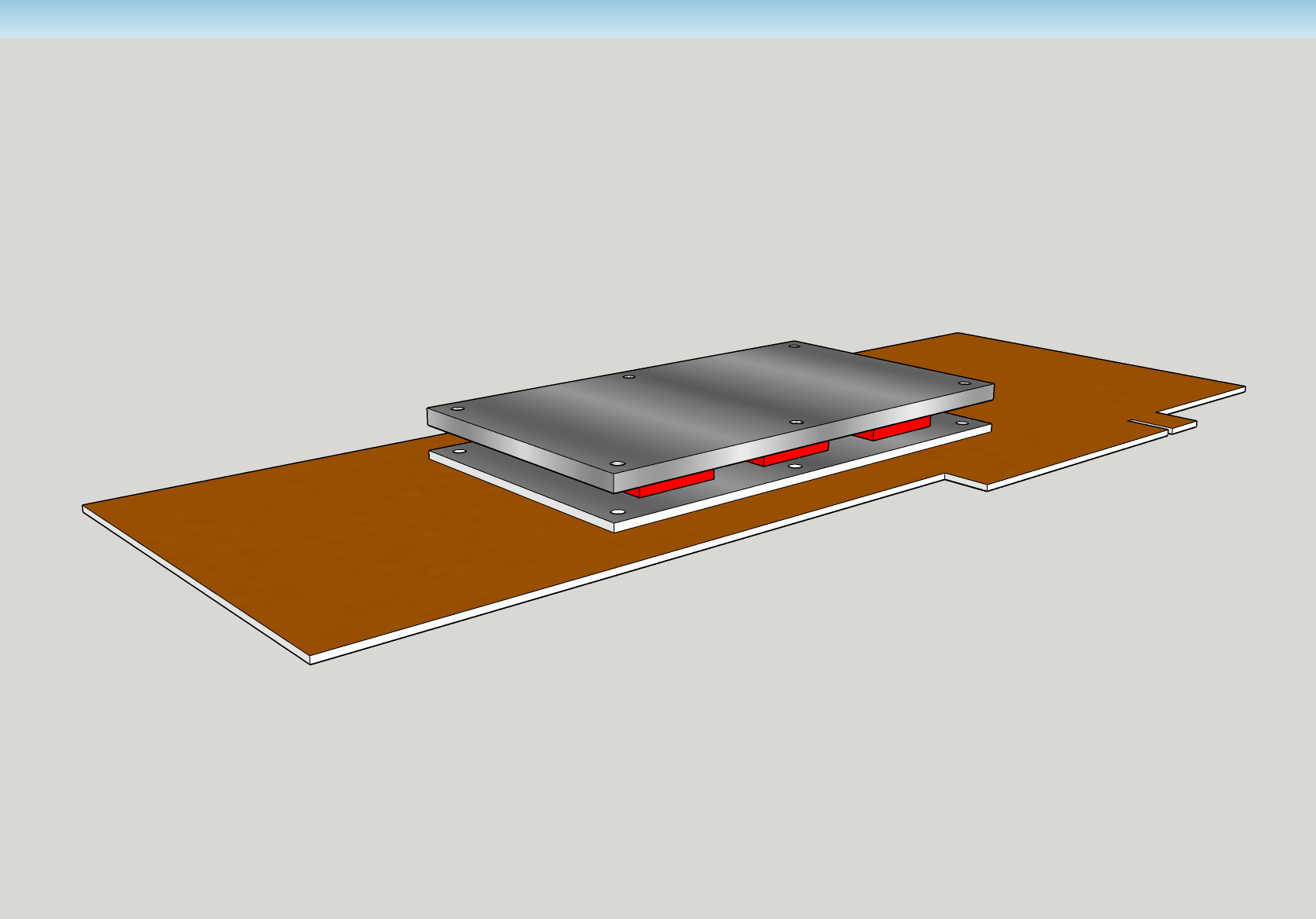

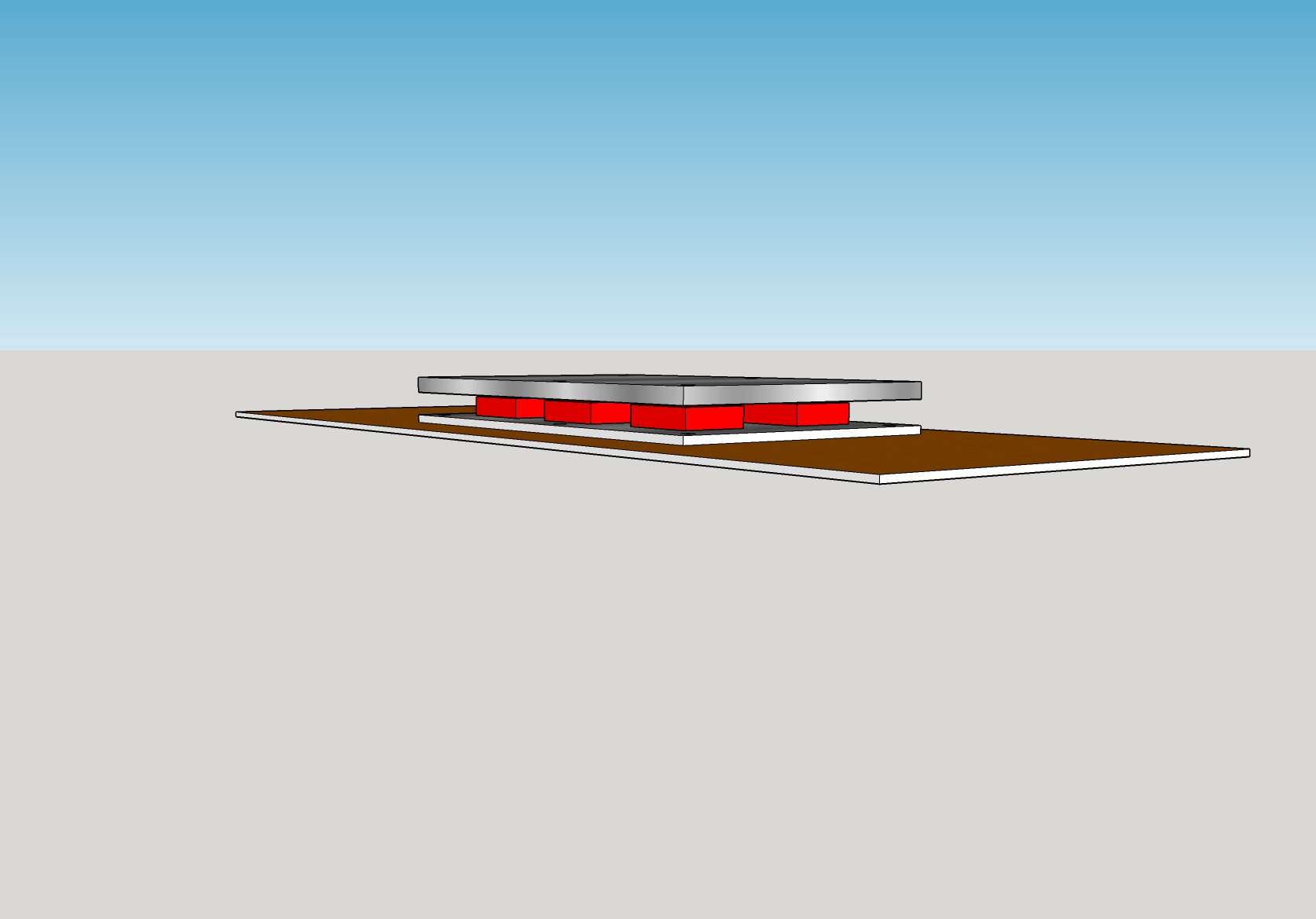

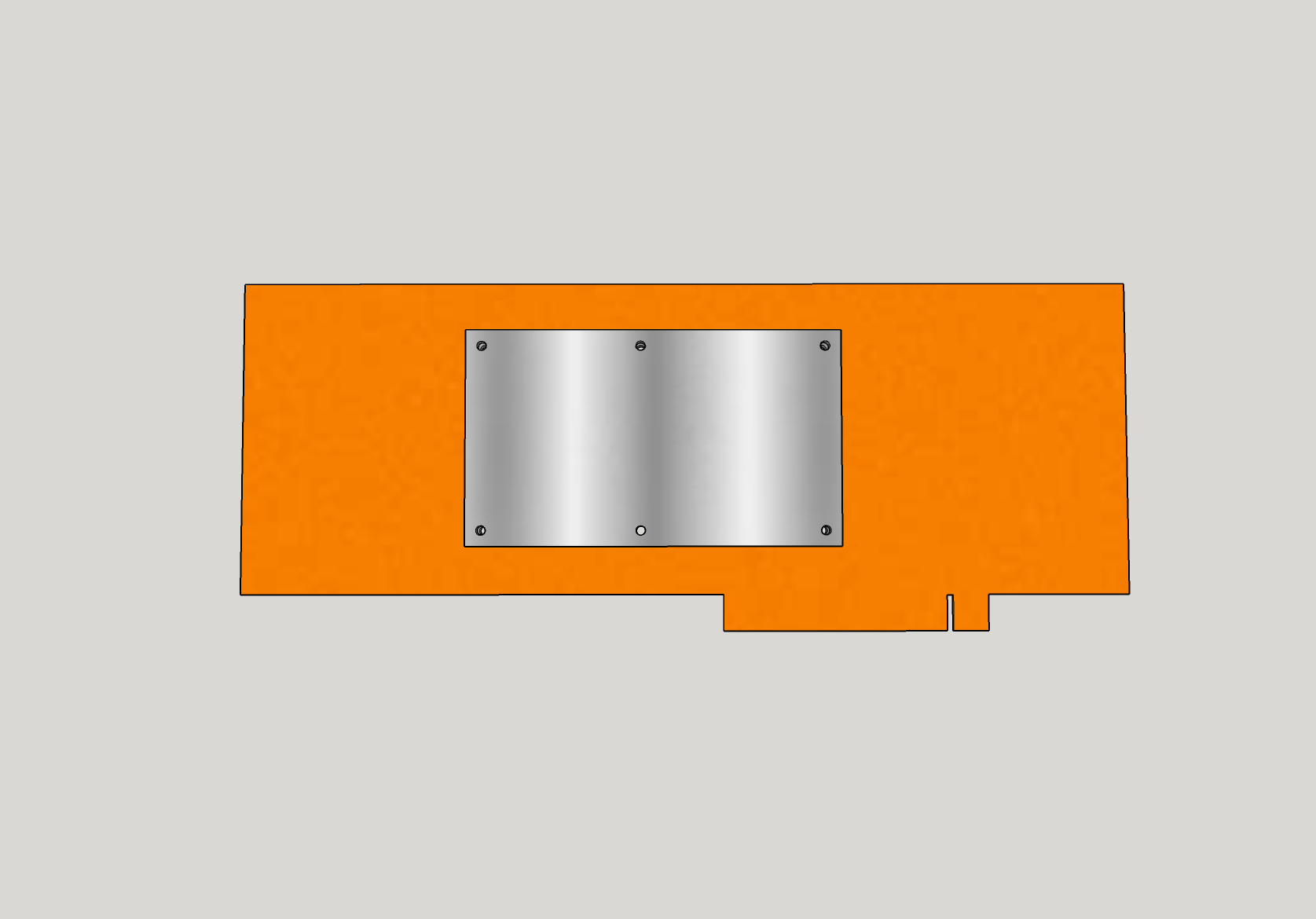

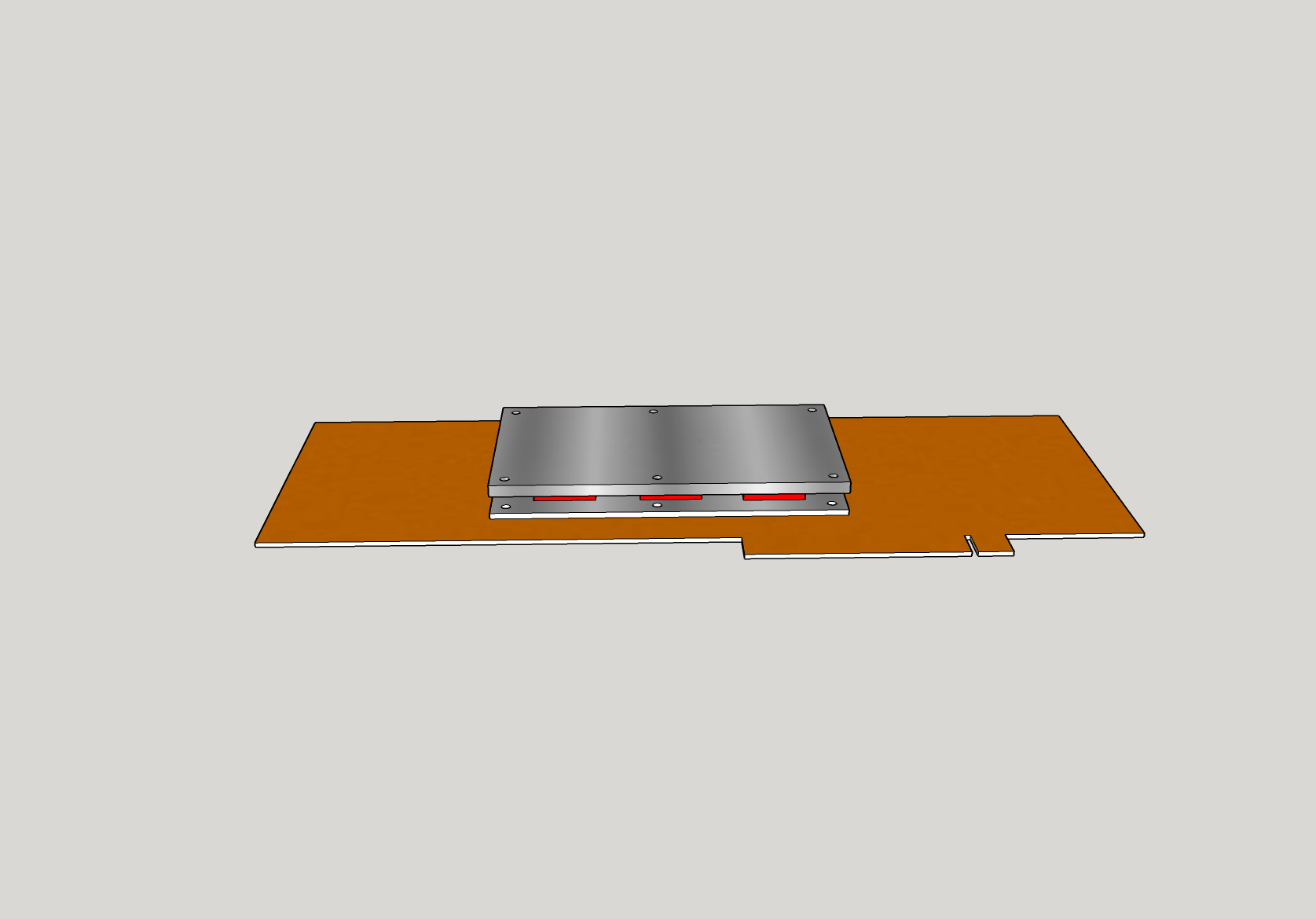

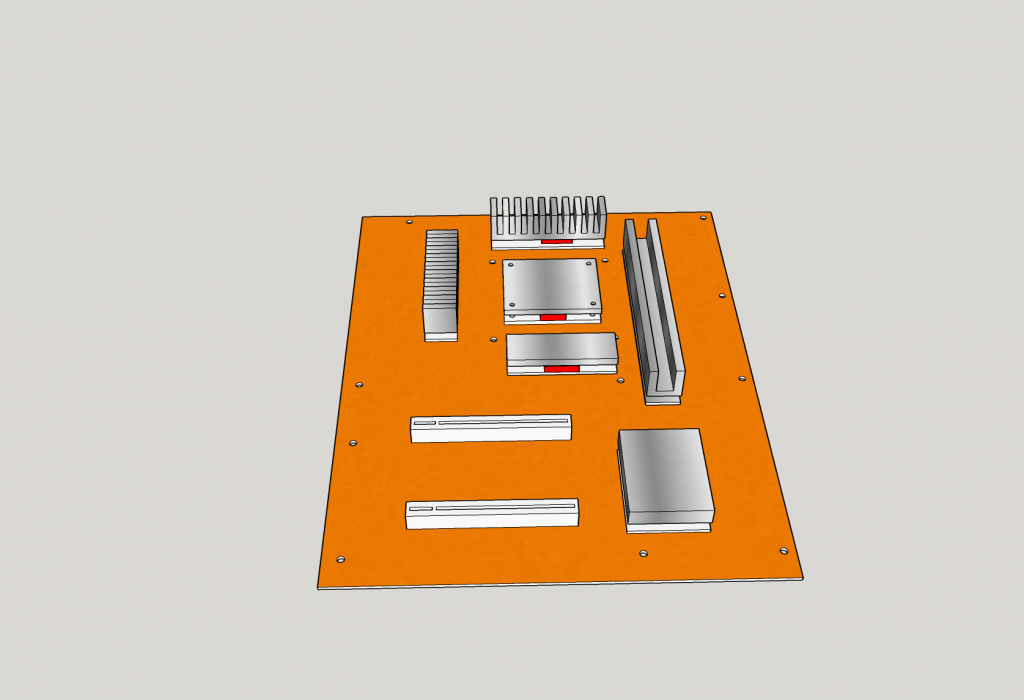

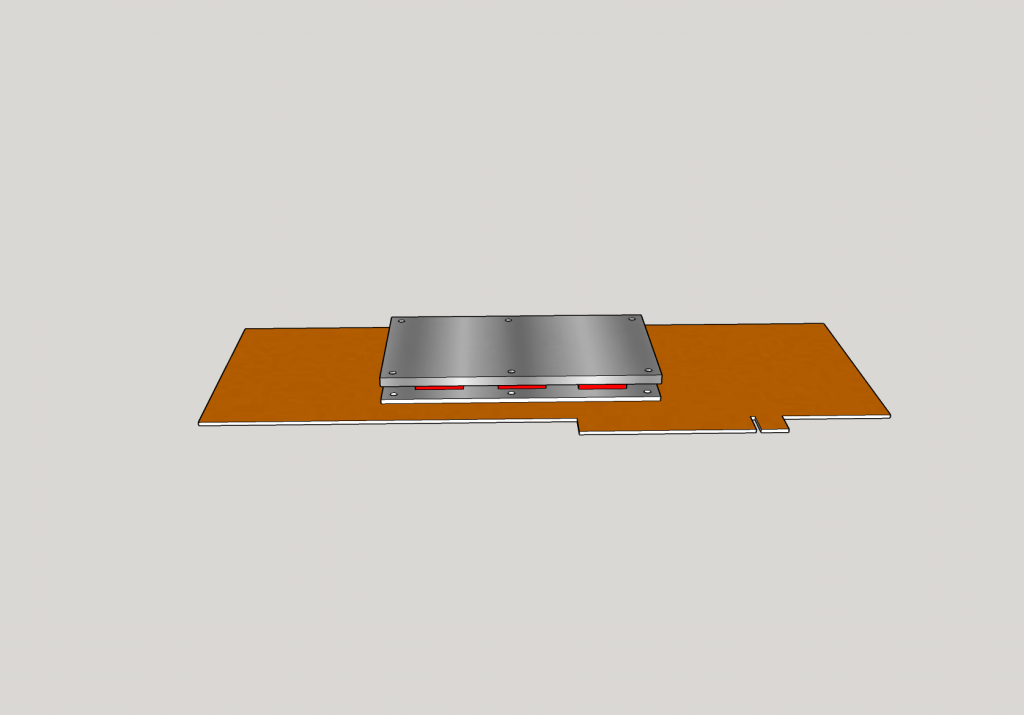

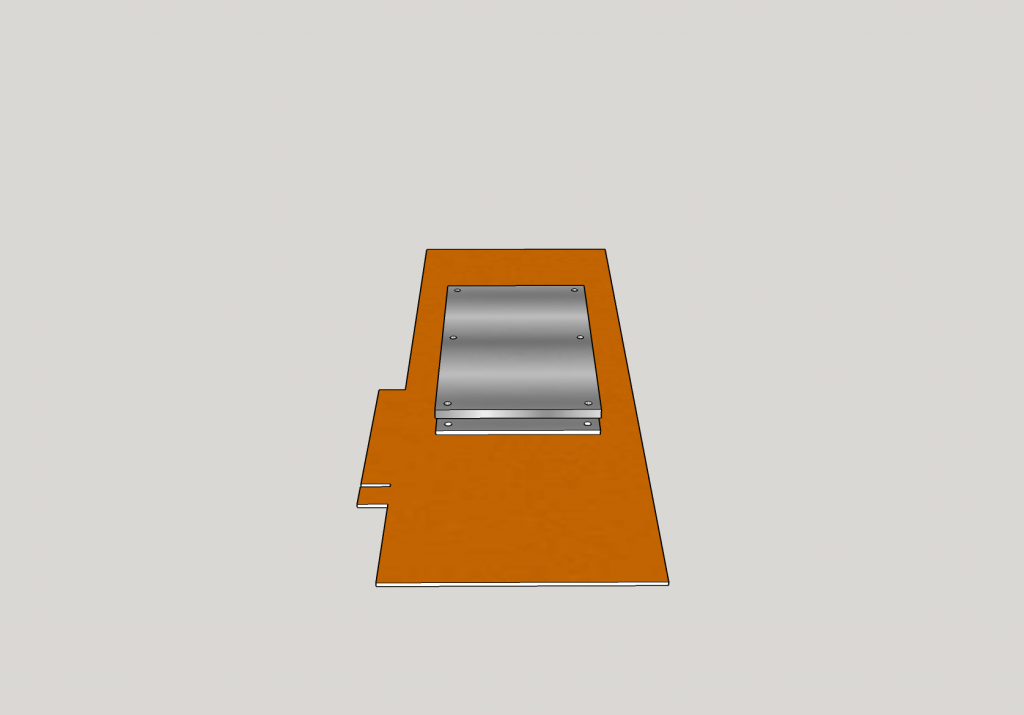

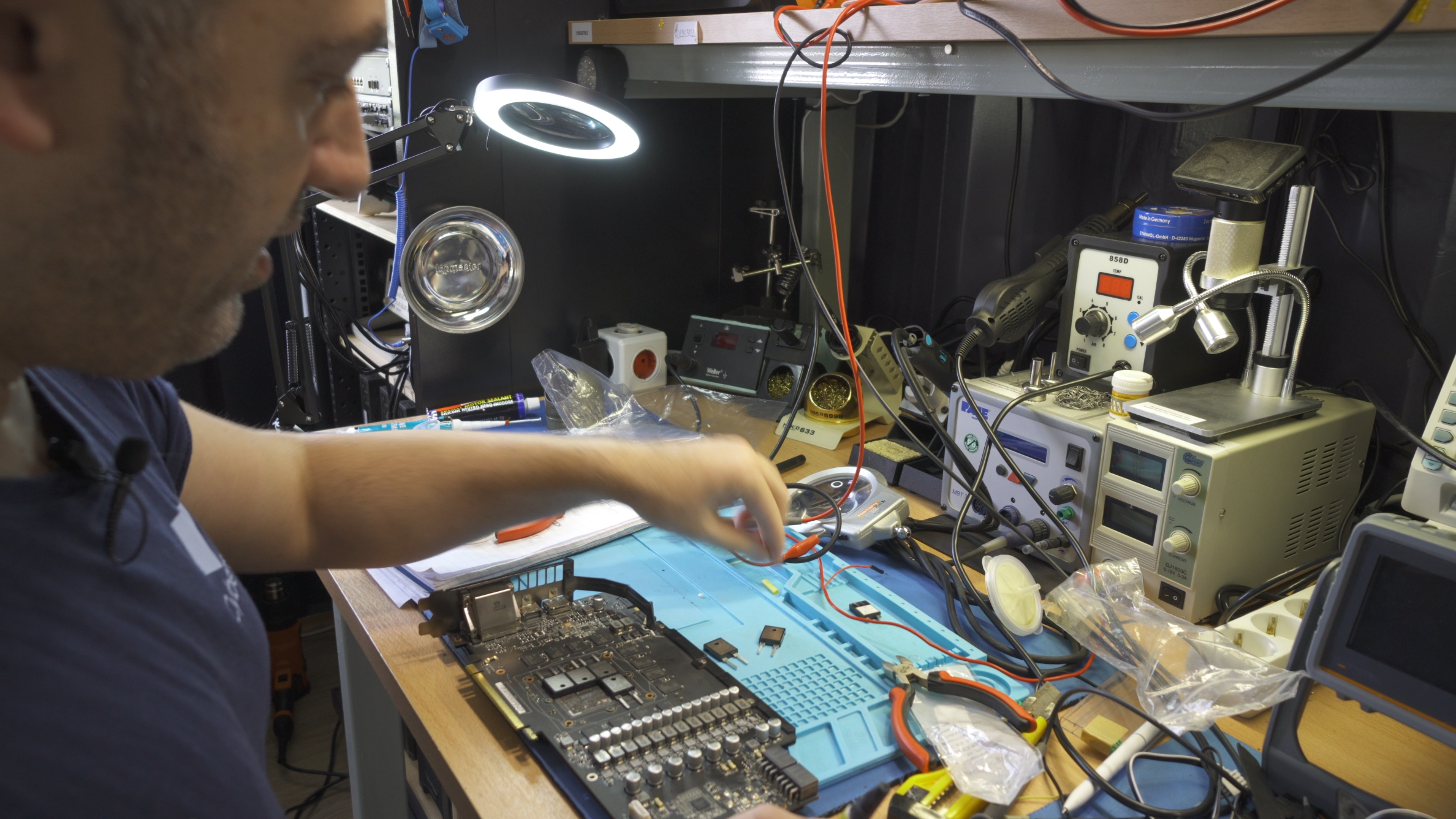

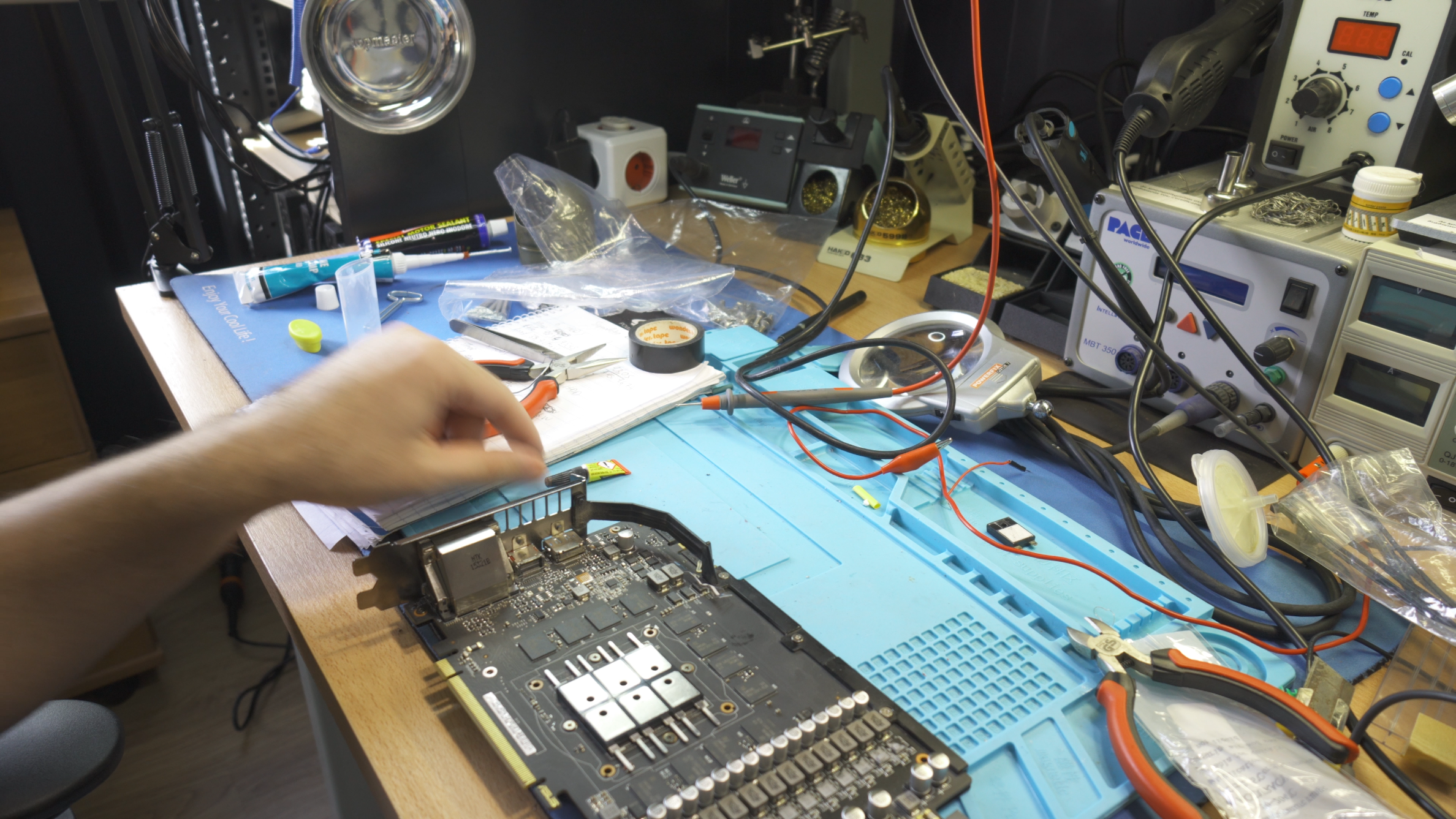

This is exactly why we decided to develop a fully custom system that is used exclusively in our enclosure testing to address all of the above. We have already developed something similar for our heat sink tests and the accuracy achieved is impressive. We now had two options: we could either design something from scratch or use a real system and adapt it to our needs. Since we don’t have a CNC machine in our workshop and 3D printing was out of the question, we decided to find some suitable PC parts and modify them for our needs. This has two advantages, because you can use a real system and the costs are much lower in the end.

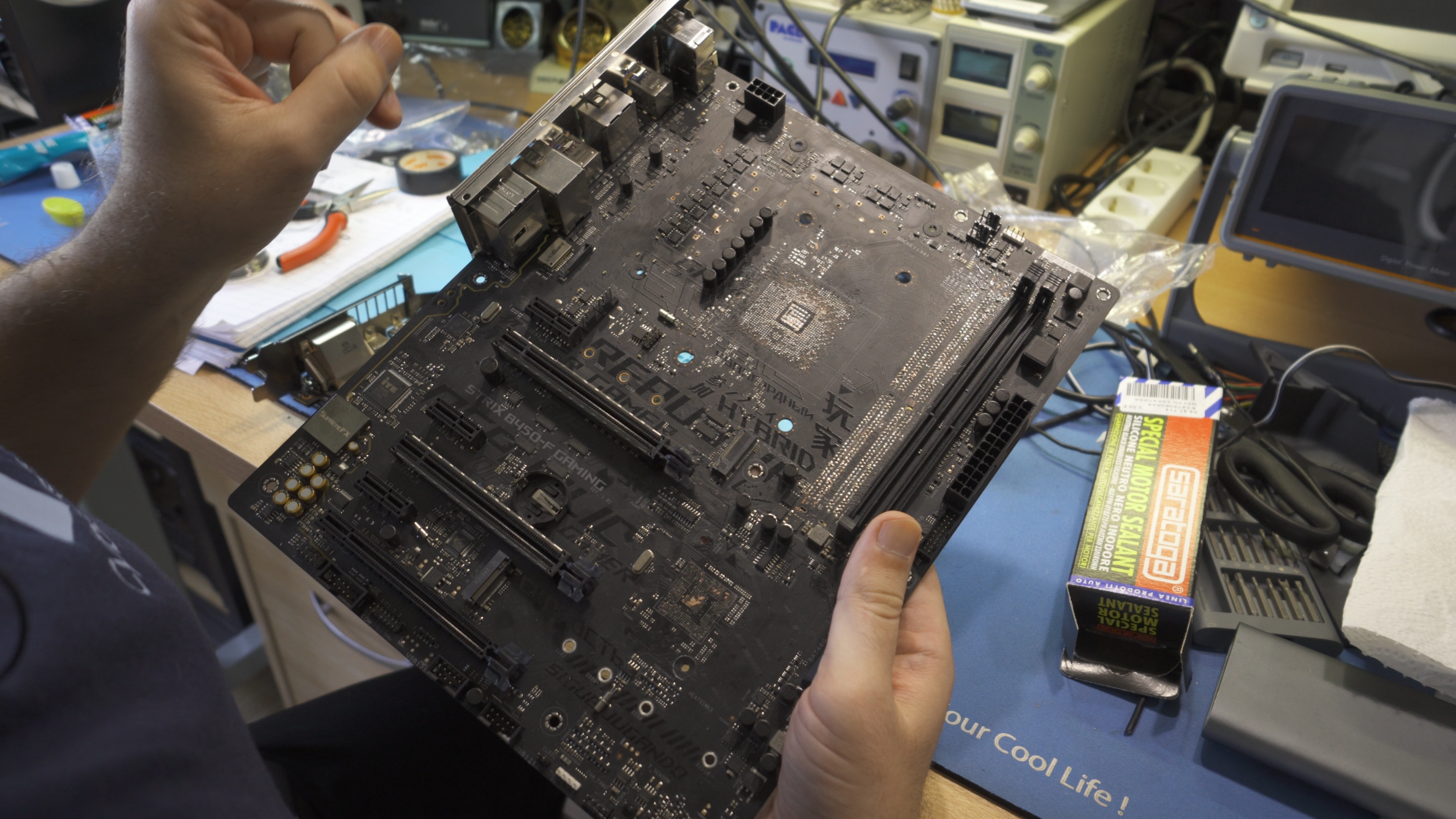

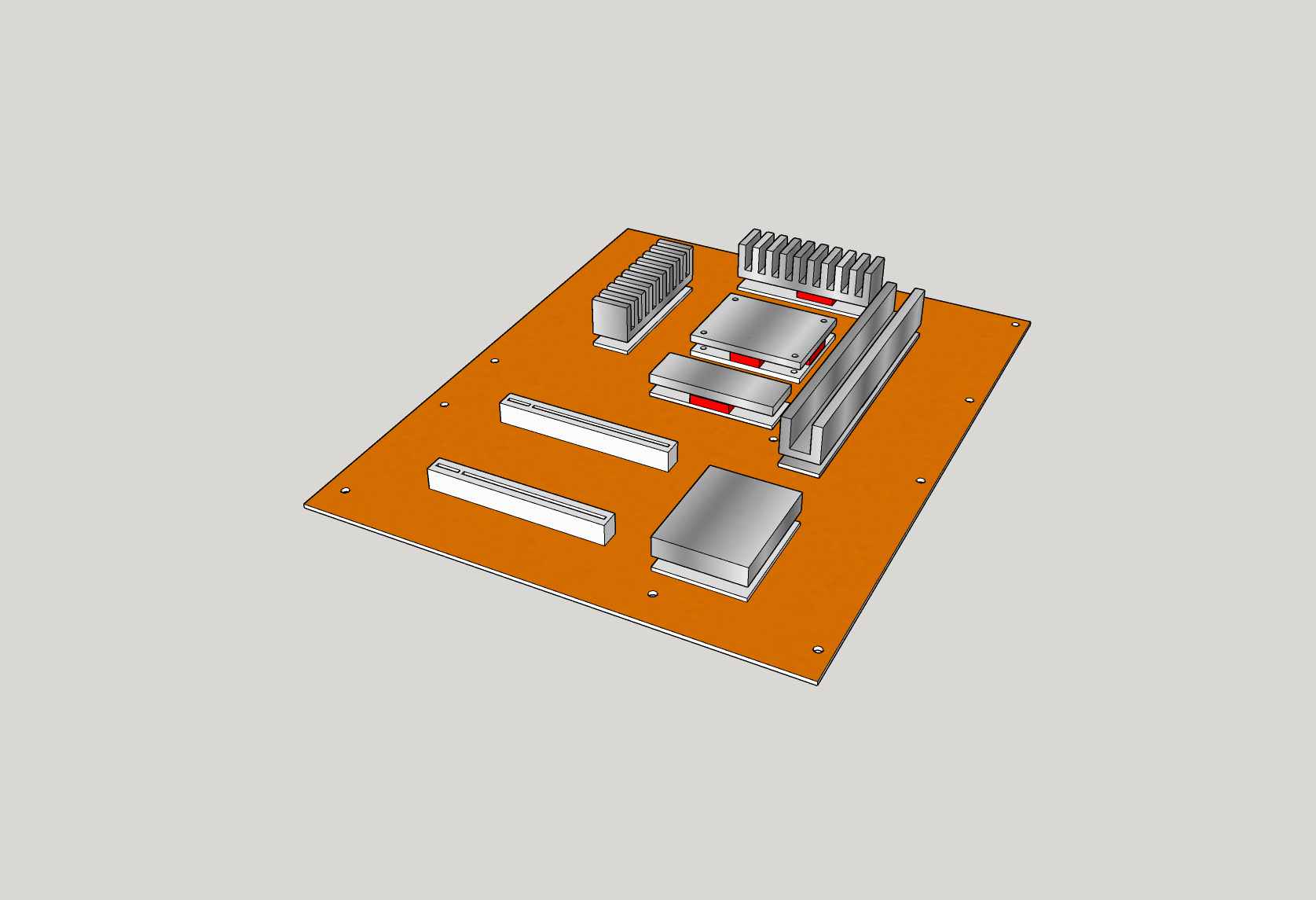

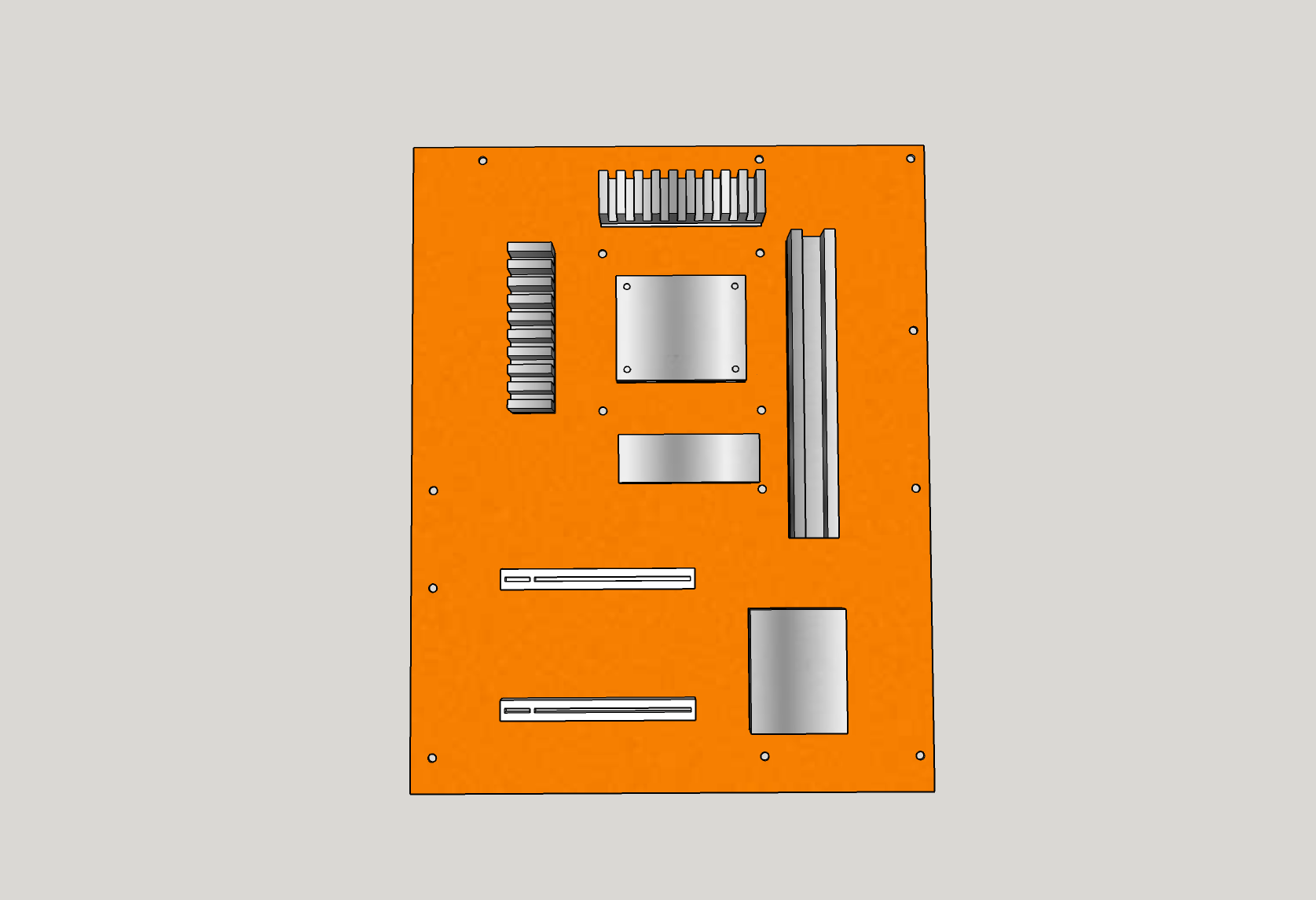

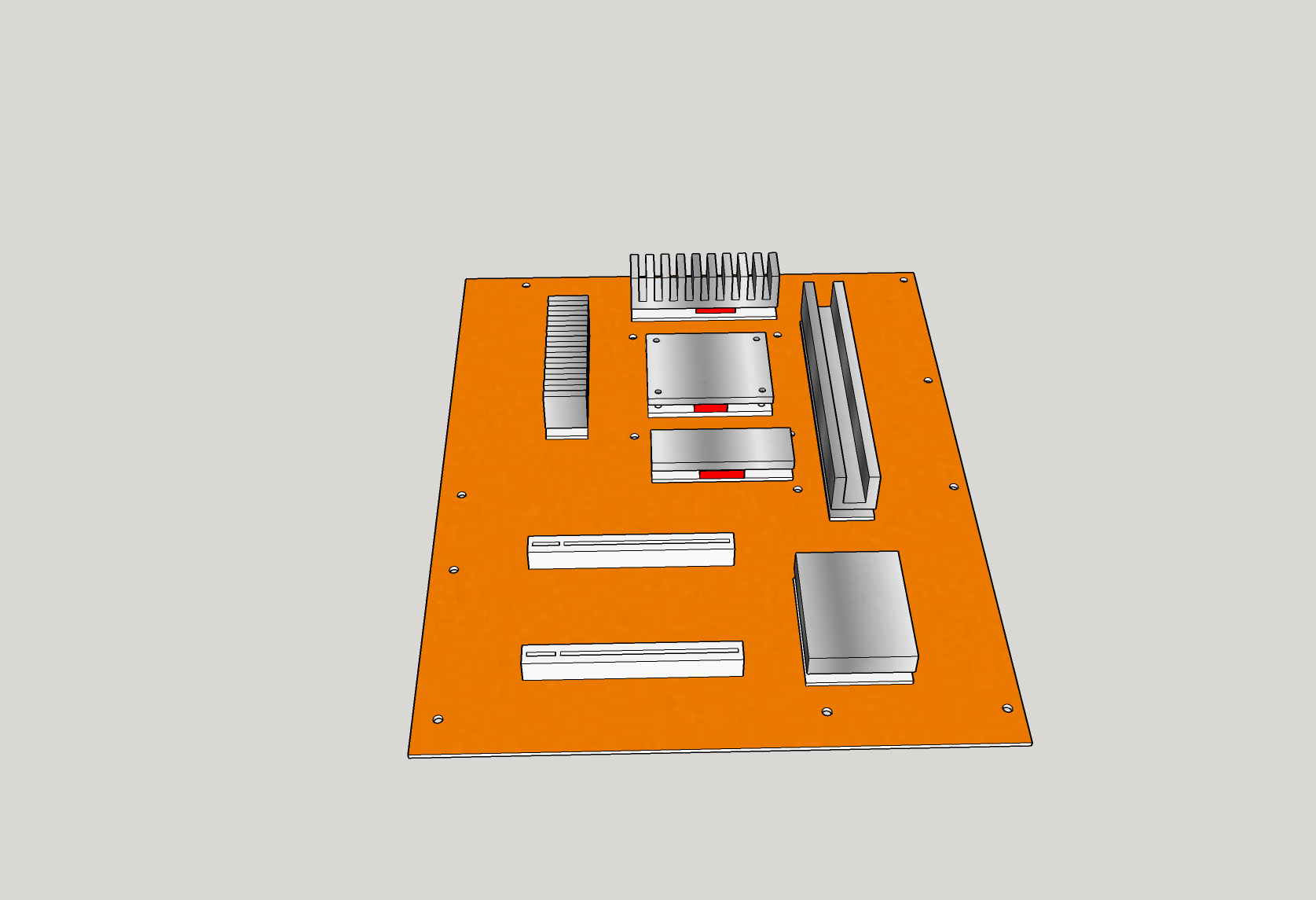

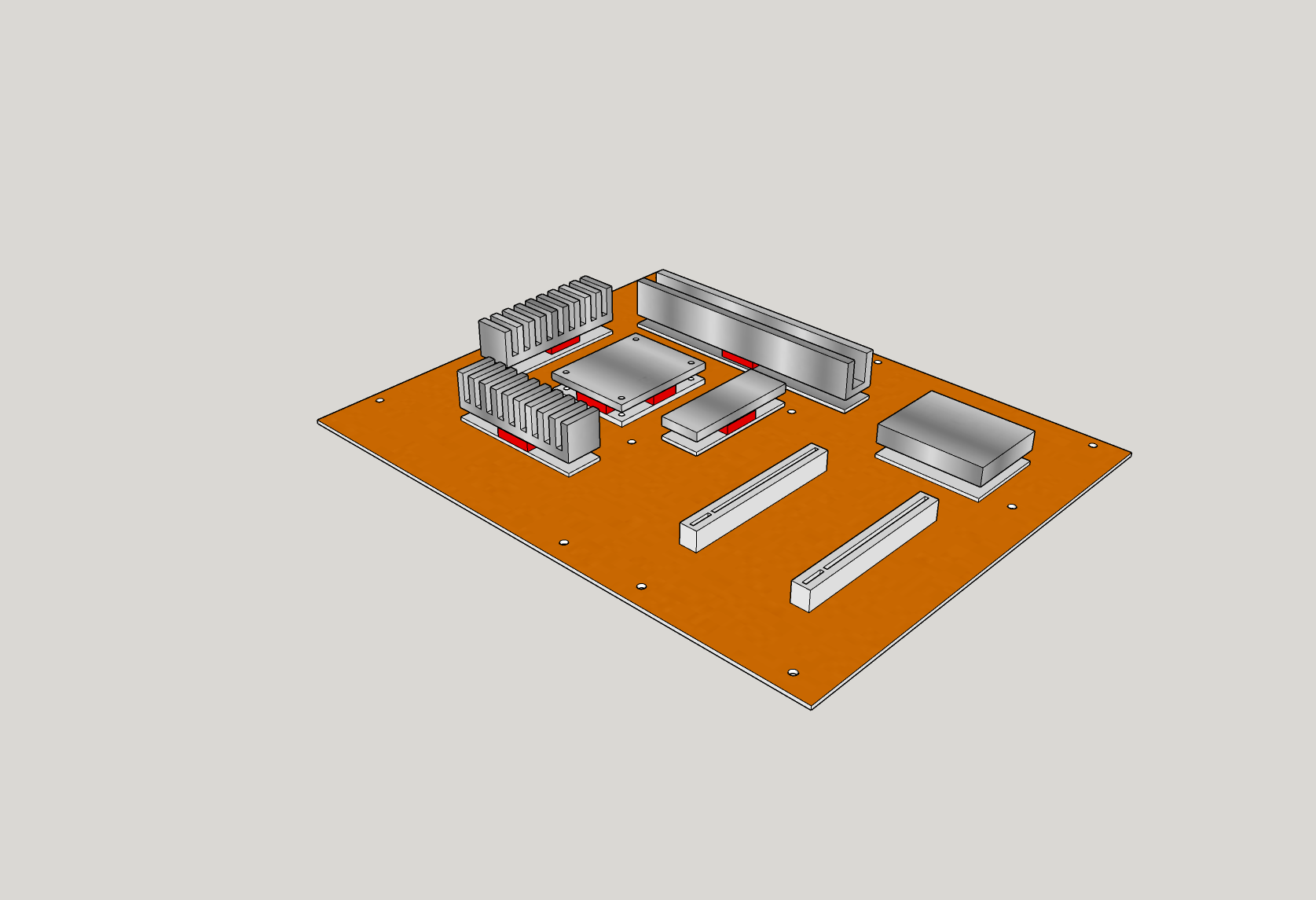

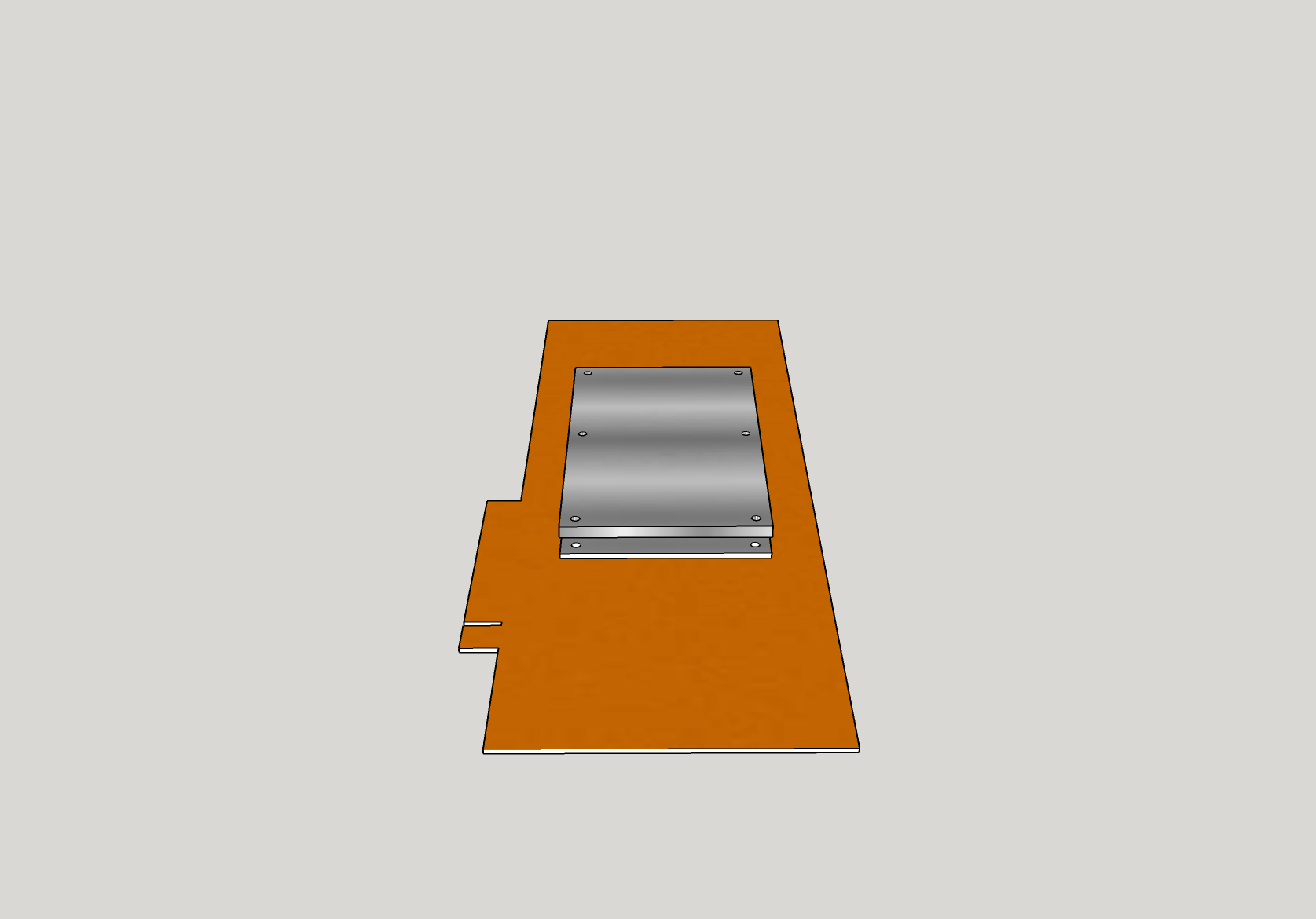

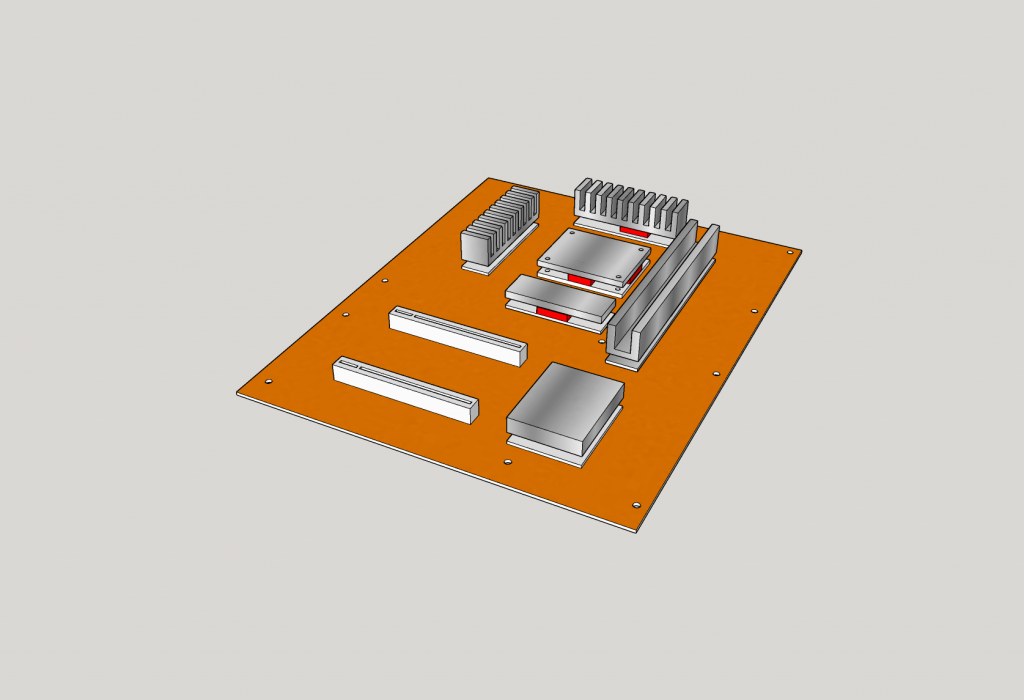

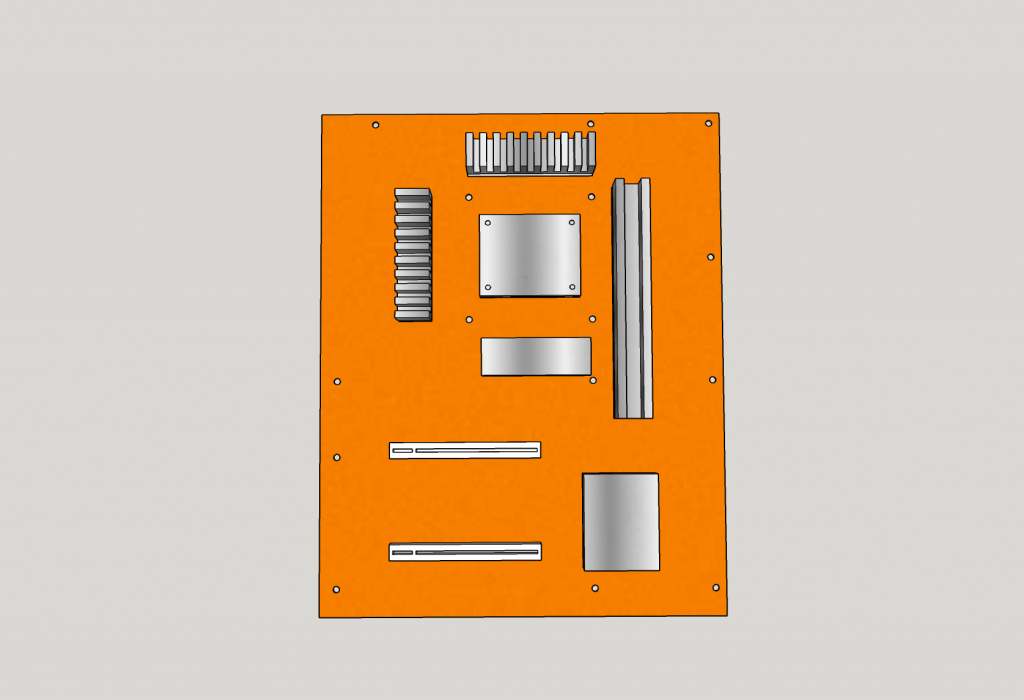

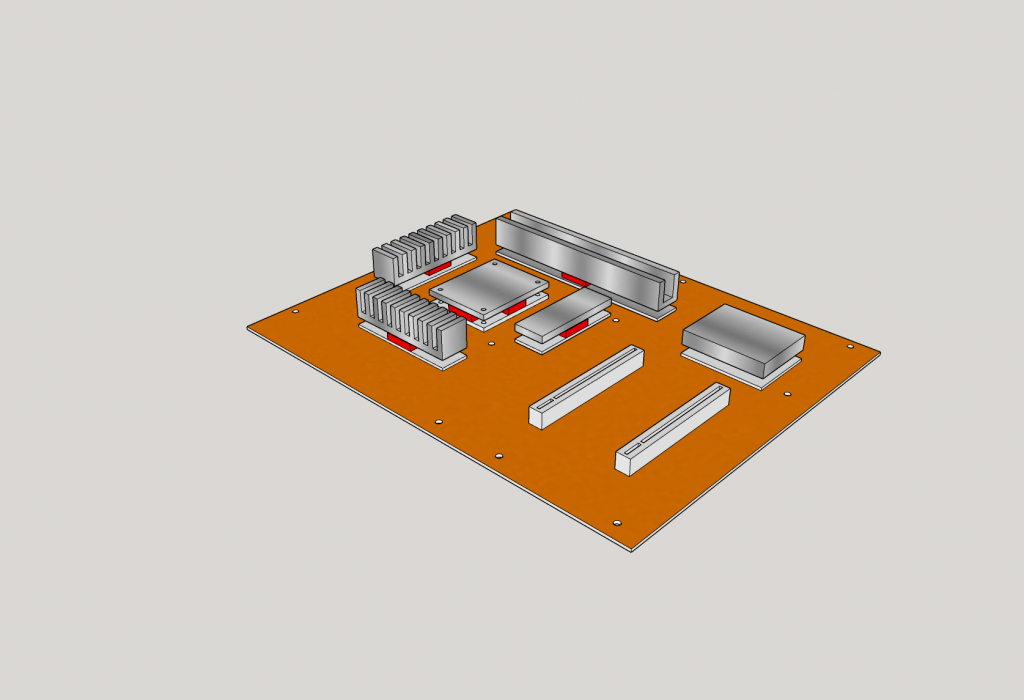

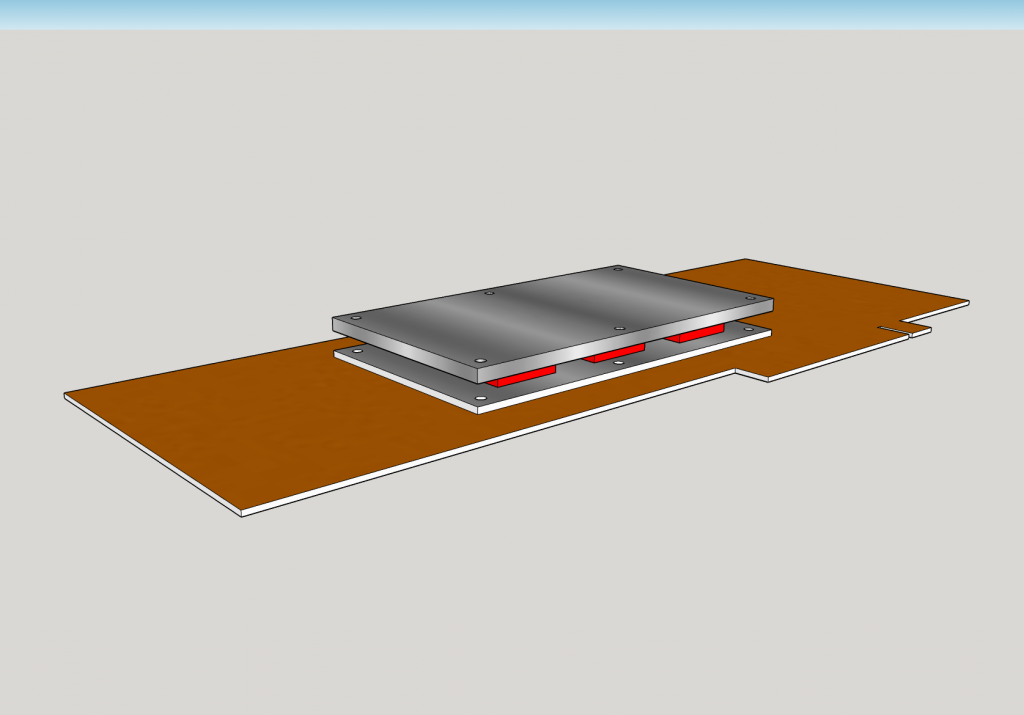

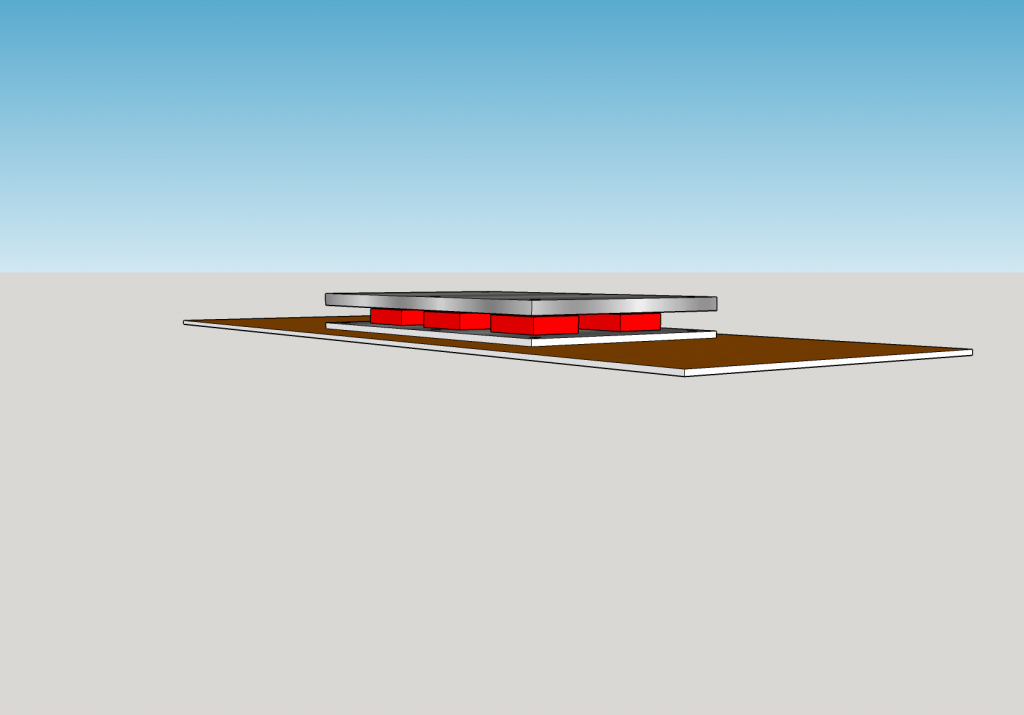

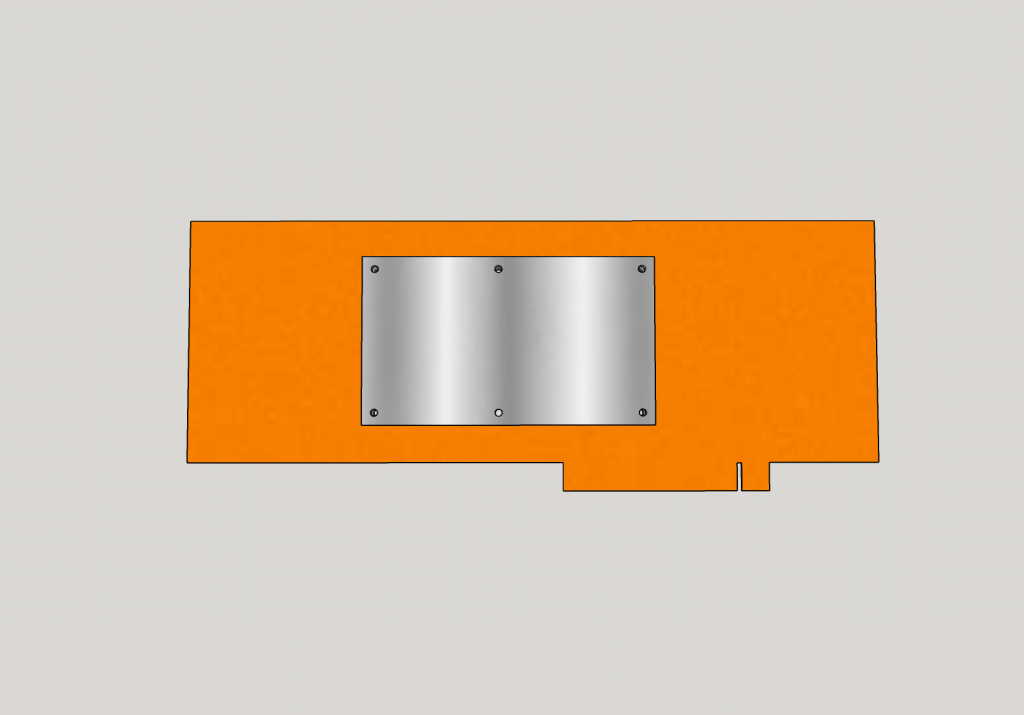

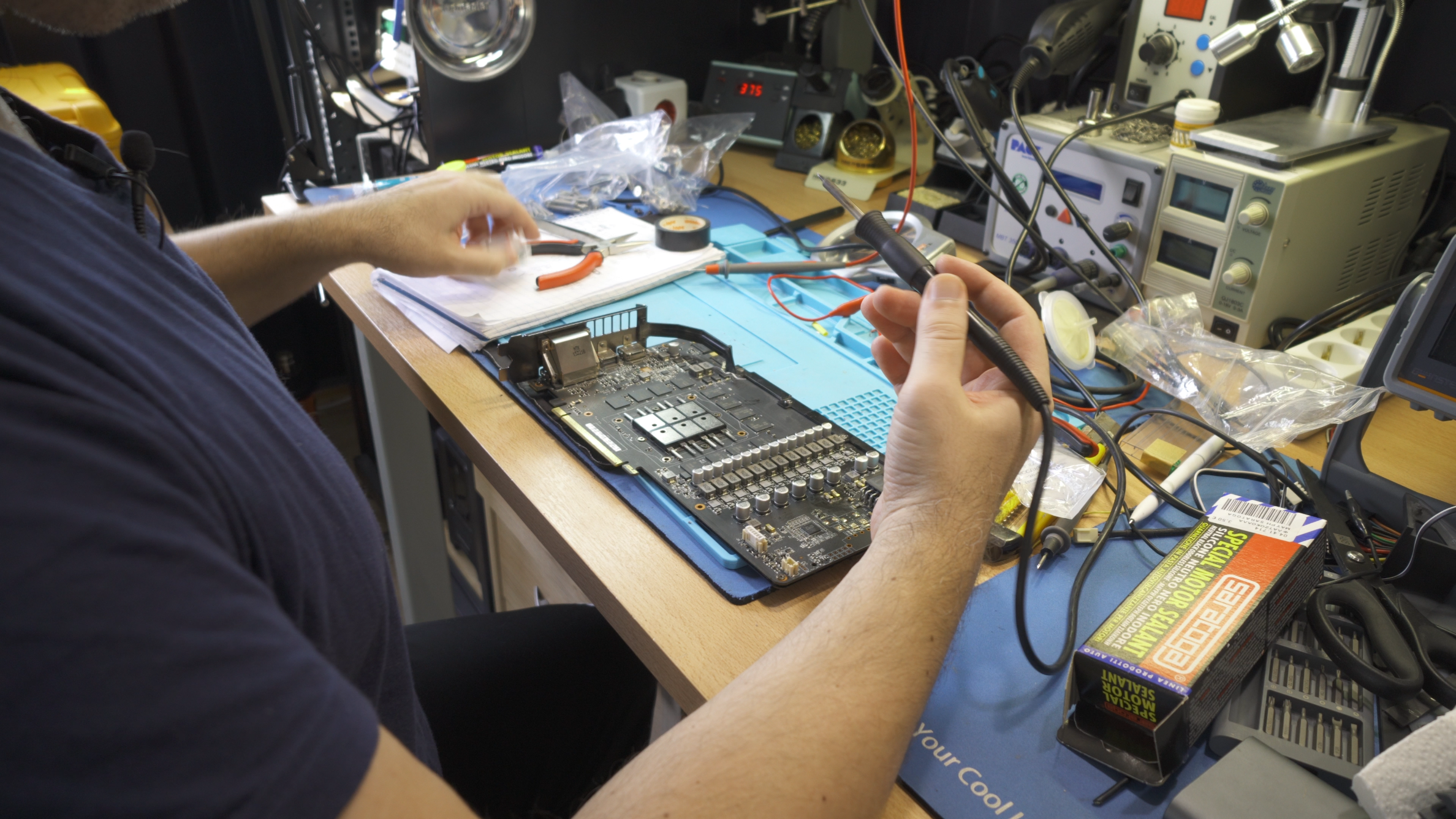

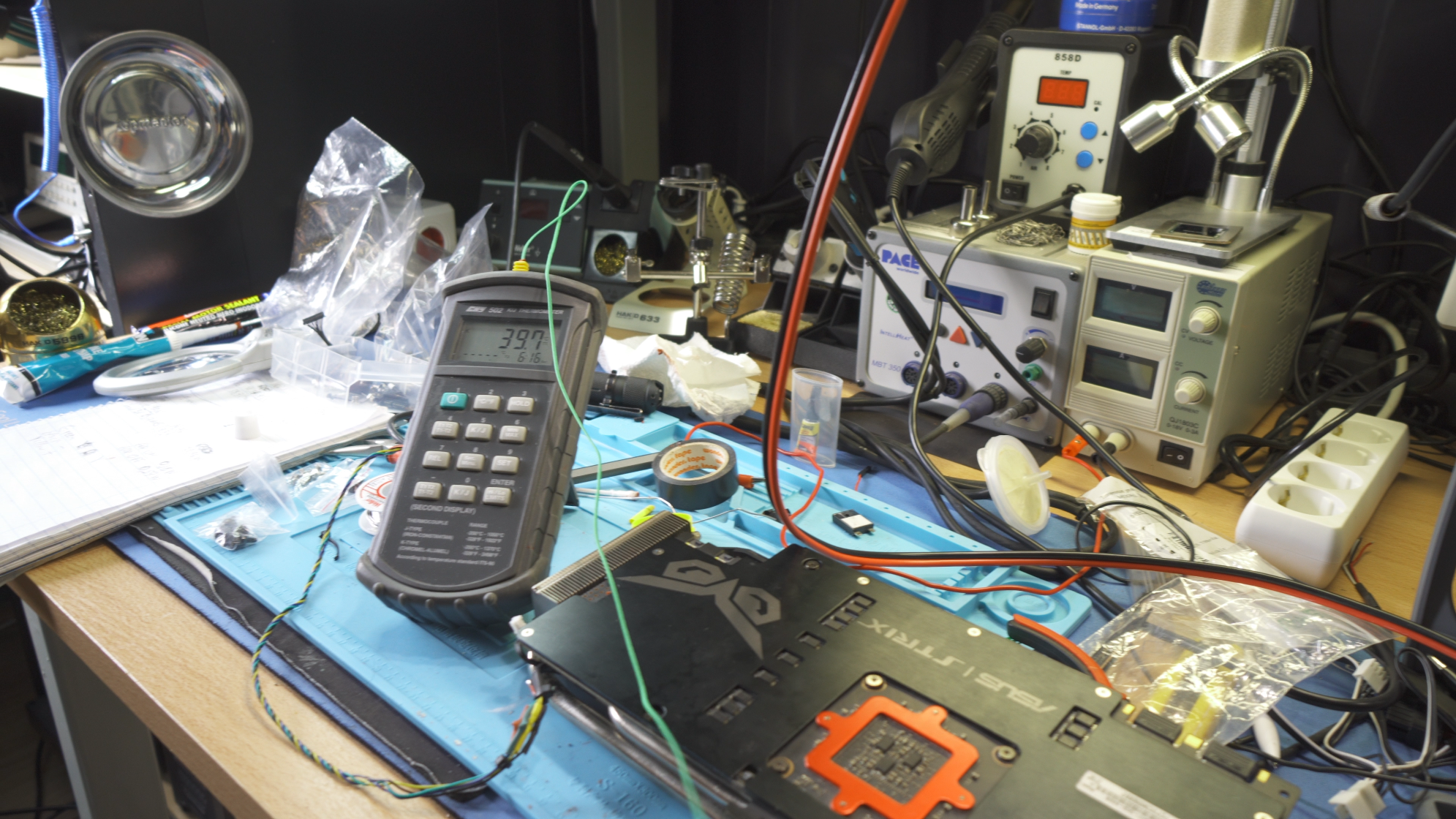

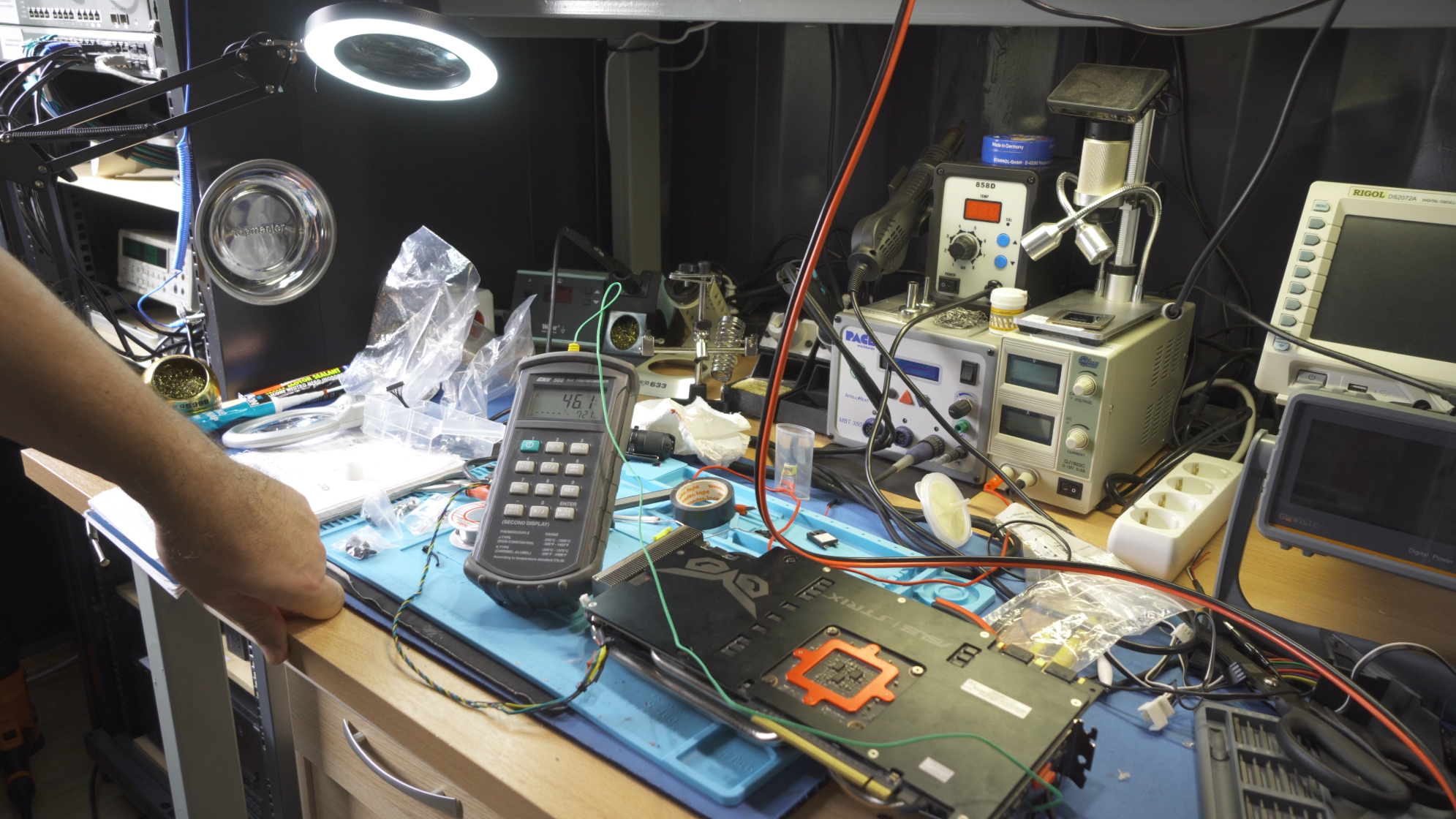

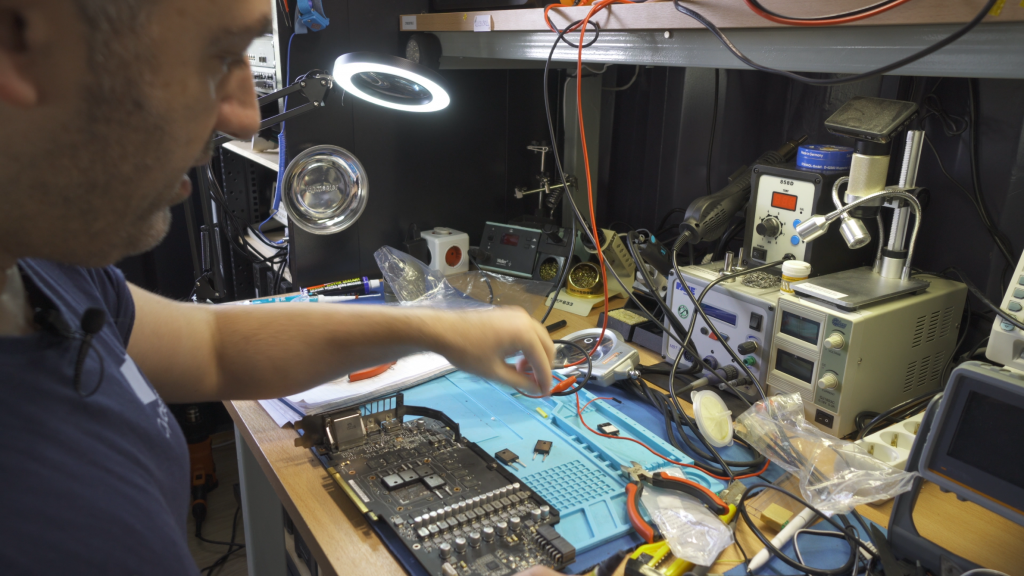

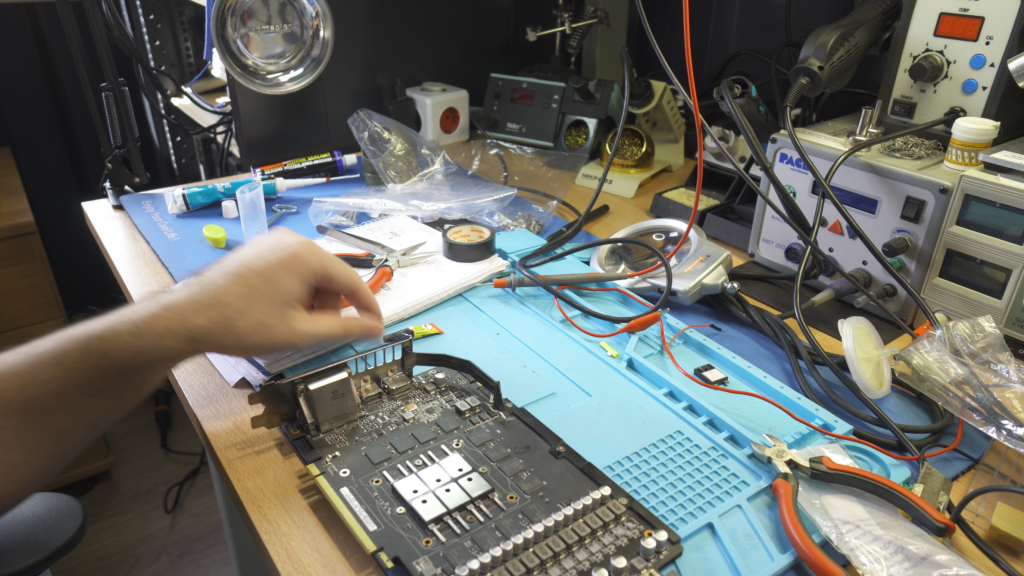

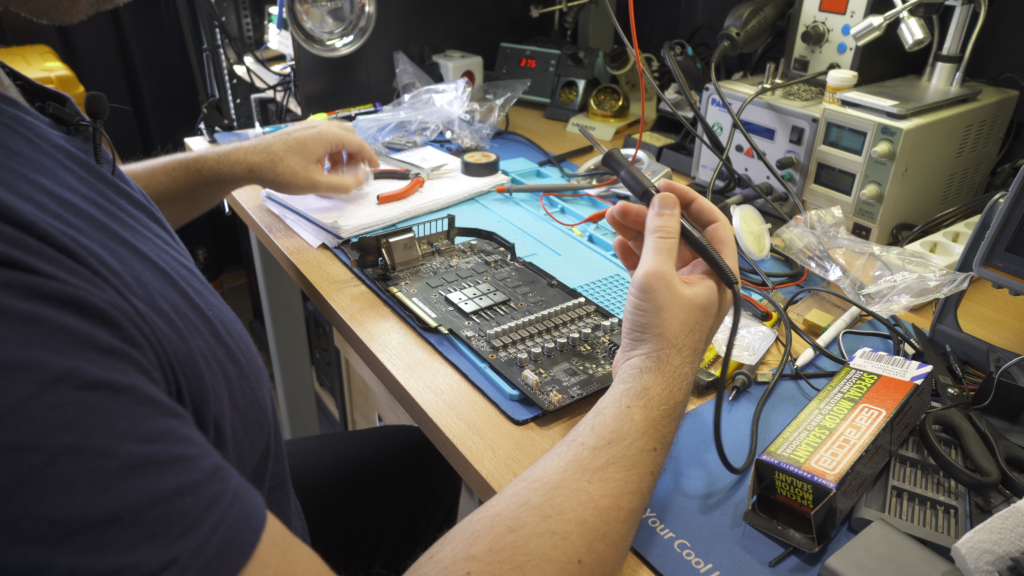

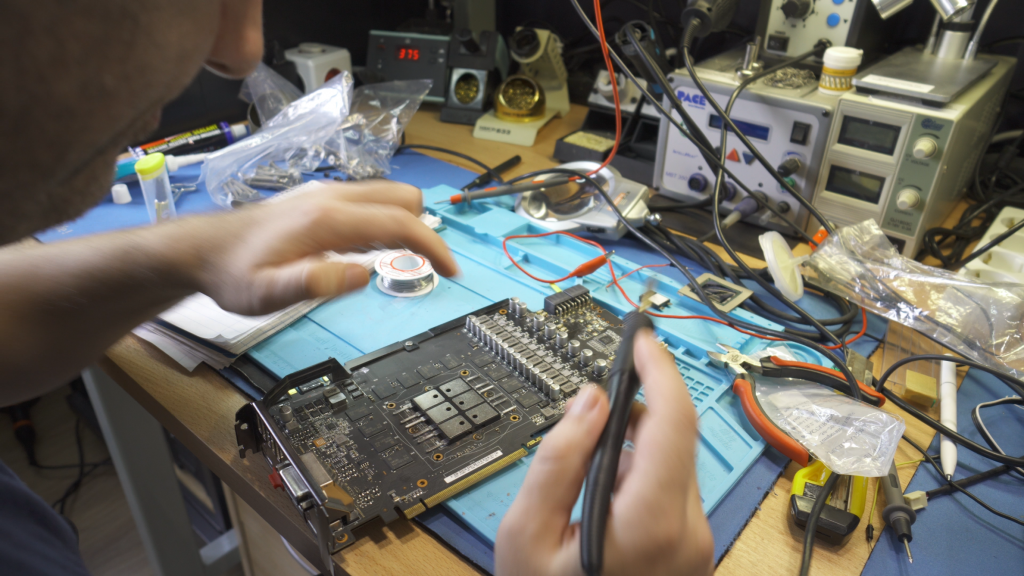

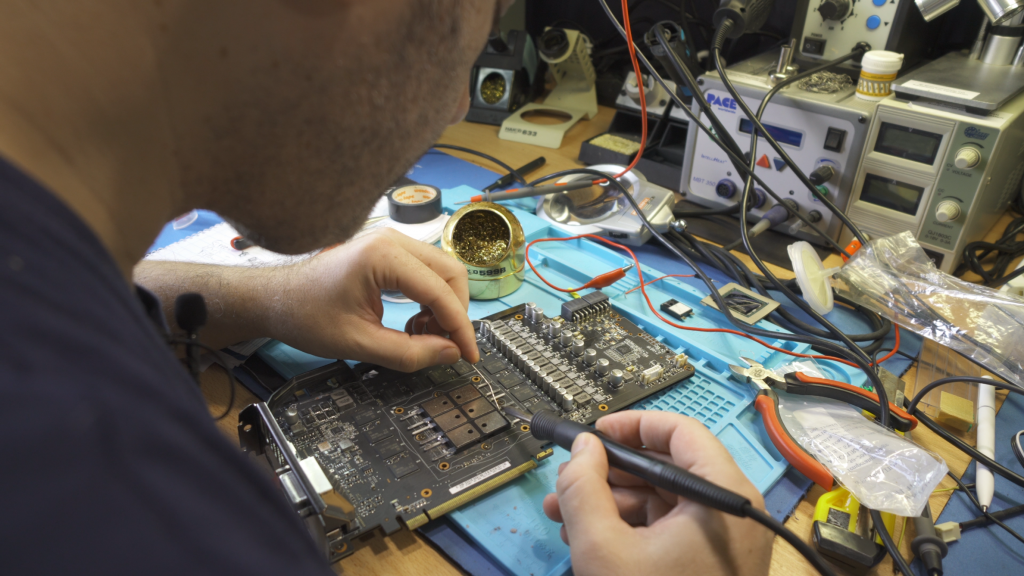

We started with a broken motherboard, with sufficiently strong heatsinks. We replaced all the FETs and chips under these heat sinks with special, high-performance resistors – our heaters. Simulating the CPU is not easy, because you have to remove the socket and squeeze several resistors together there. We cleared the way with a lot of effort and installed six 140 W resistors in the area of the CPU socket. For cooling the emulated CPU we will use a standard heatsink. The areas where appropriately sized resistors are installed besides the CPU socket are the VRMs, RAM slots, chipset and NVMe slot. We will also modify another HDD. The loads are adjustable in all parts and the CPU load can be up to 600 W if required!

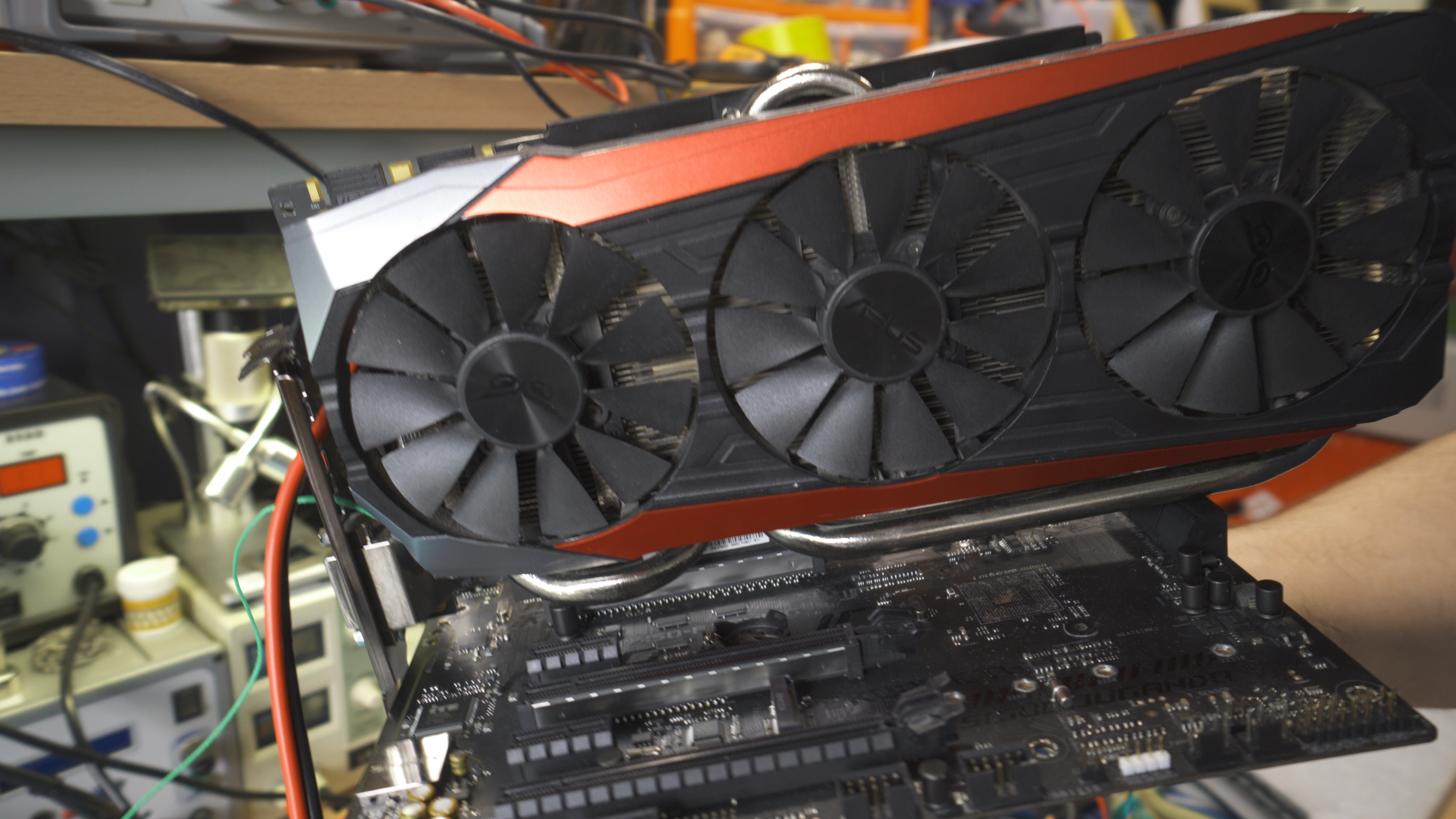

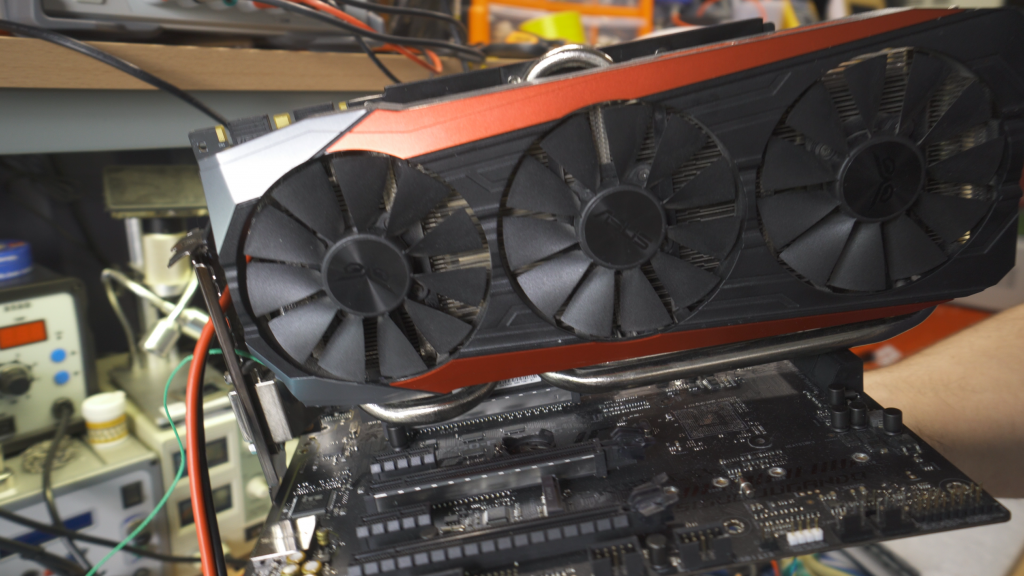

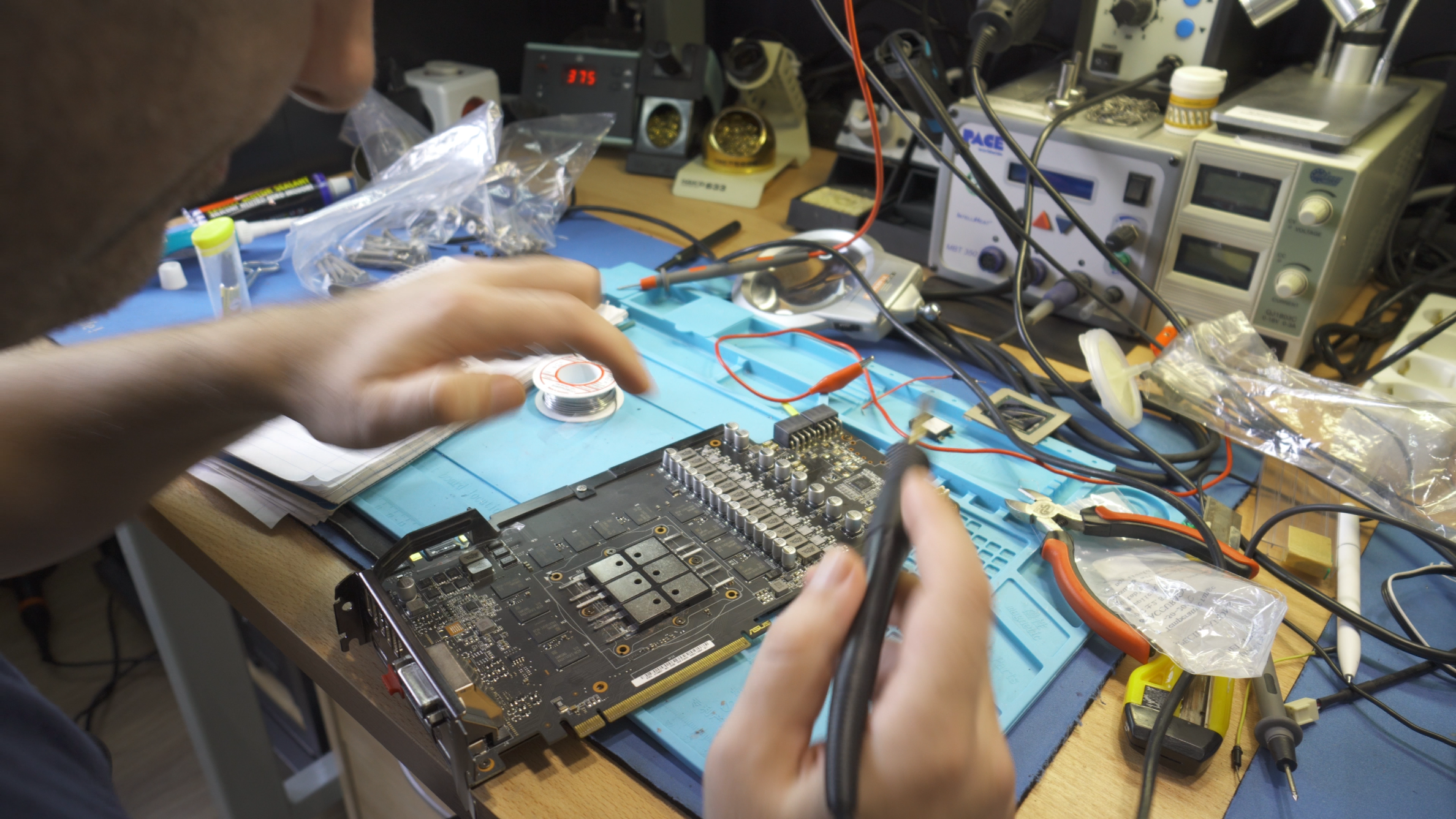

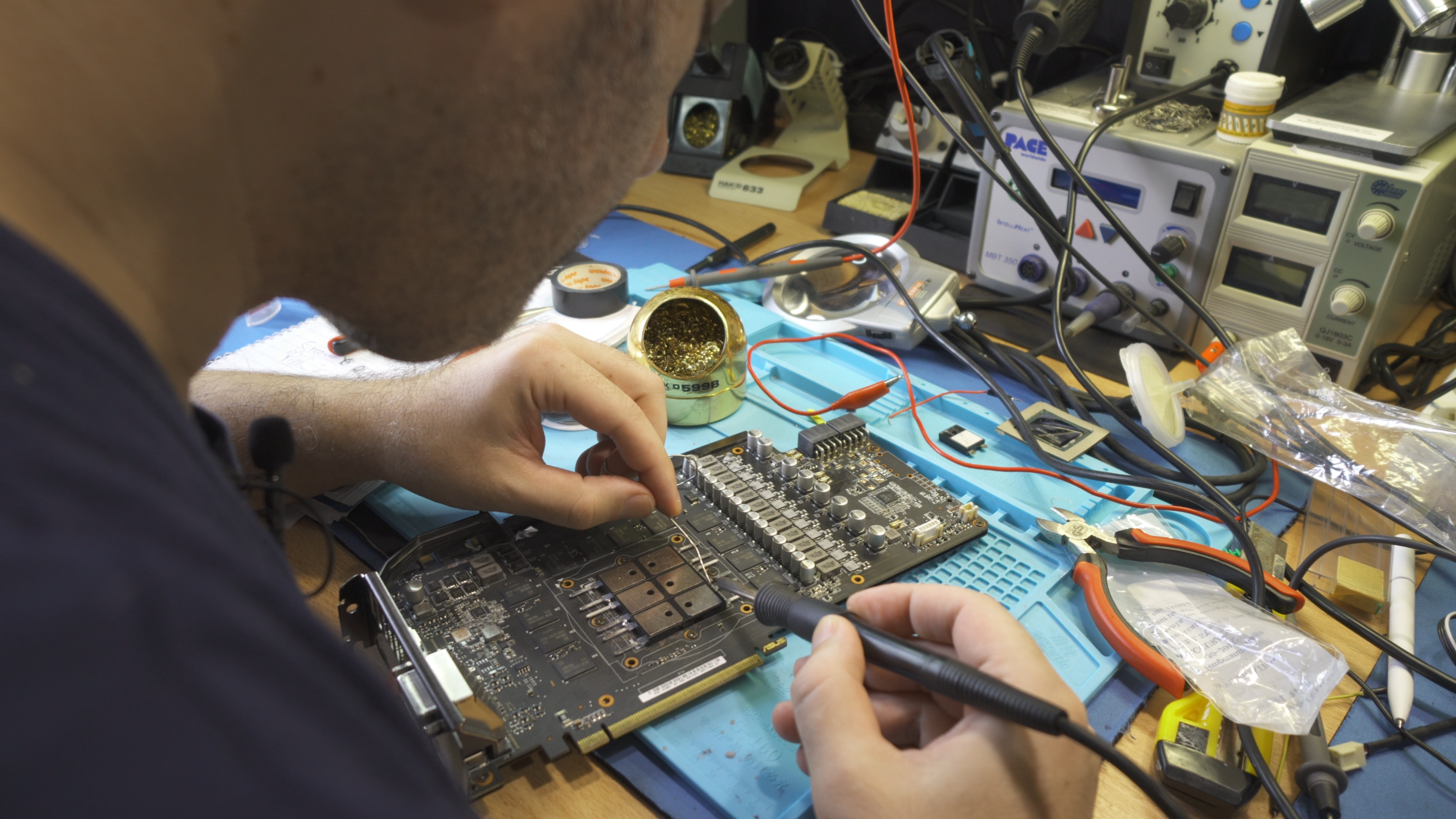

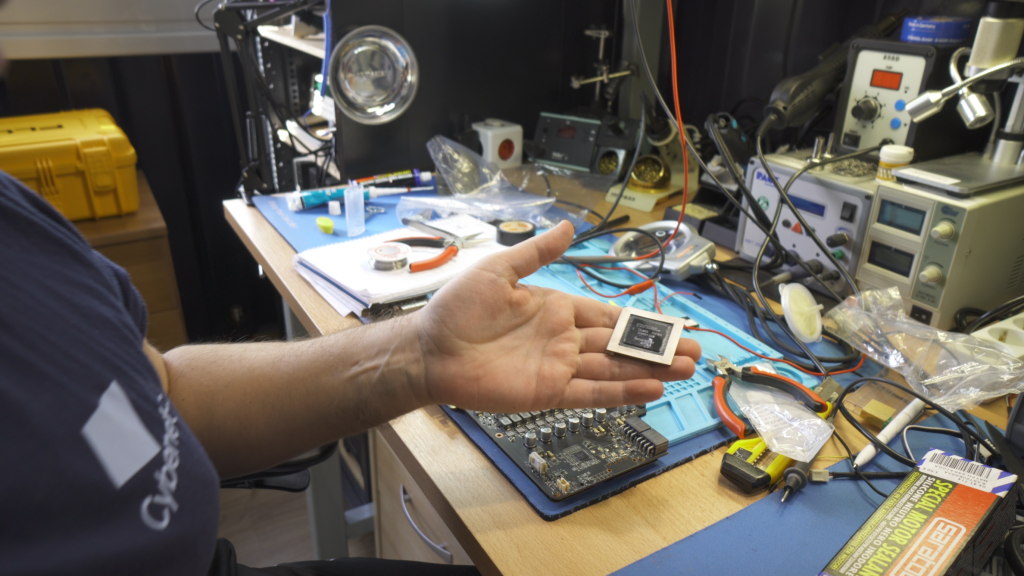

In addition to the motherboard, we also “butchered” a broken graphics card, from which we removed the GPU and installed several power resistors in its place, which can also provide up to 600W of load if needed. Is that enough? Yes, it should.

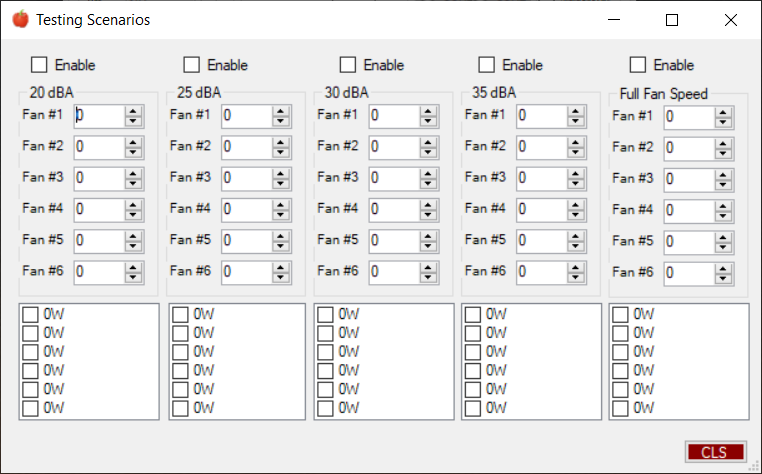

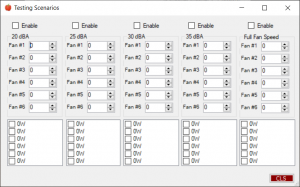

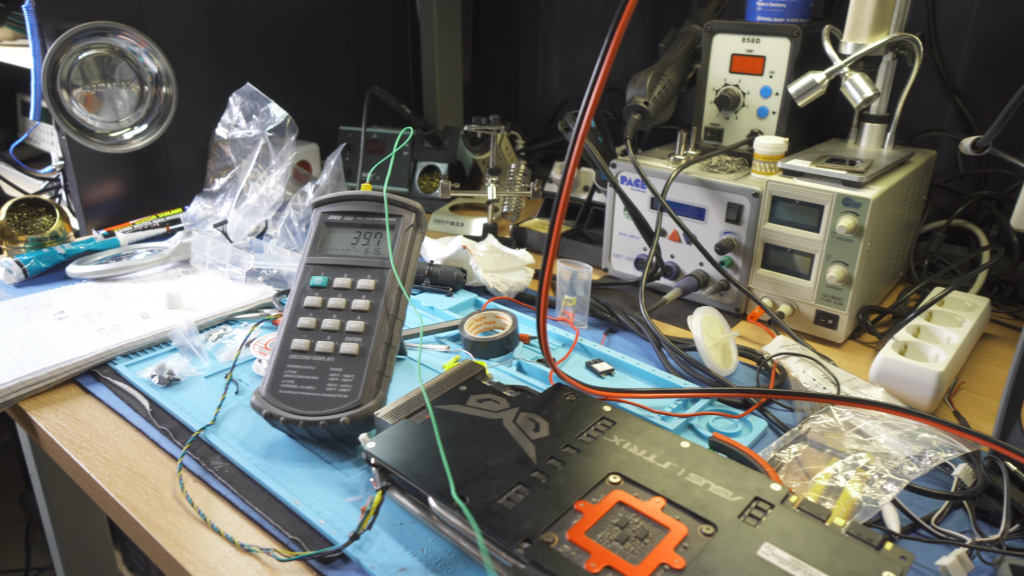

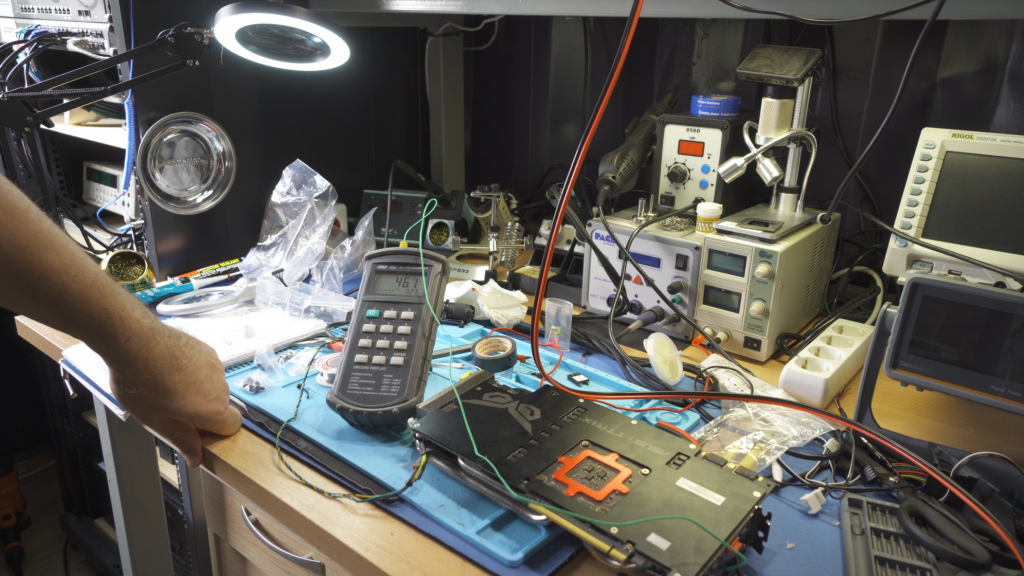

The CPU and GPU loads are controlled by a powerful and highly accurate lab power supply (AimTTi QPX600DP) capable of delivering up to 1200W combined. That’s an insanely high load, and we’ll probably never end up using it to its full potential. We plan to use different load combinations for both the CPU and GPU. For example, we could use three different loads for the CPU, 100W, 200W, 250W, and three or more other loads for the GPU, 150W, 250W, and 350W. So the possible load combinations are nine, and if we add in three of four different fan speed scenarios (for the case fans) (25 dBA, 30 dBA, 35 dBA, and full fan speed), then all the test combinations can go up to 36 variations!

Now if you assume that each test lasts at least 15 minutes, and if you include a 5 minute idle time between each test to allow all the parts to cool down, the total time of all the test sessions is 720 minutes or a whopping 12 hours!!!! For this reason, you simply need a fully automated test suite that controls and monitors everything. One major issue we had was with automatic fan speed control and monitoring, but fortunately the Corsair Commander Pro proved to be easy to hack and very capable of the task. Please forgive this outrage, it was just too tempting.

What’s coming, what will?

This was a brief presentation of what is to come soon. Follow us for more on Hardware Busters, and Igor, keep it rockin’! Together with igorslab.de we will enter into a kind of strategic partnership and thus make the measured data available to the readership. I am sure that especially the readers of this site will appreciate scientific methodology and a professional approach. This will not and should not replace “normal” case testing. But it will add robust facts to the tester’s perspective that would otherwise have taken extreme effort to determine on your own.

We will certainly extend this partnership to the measurement and evaluation of power supplies, as Cybenetics has been certifying and measuring power supplies for many years. However, it is still necessary to implement a suitable interface, then it is a fine win-win situation for all parties involved, the readers included of course. And the Hardware Busters YouTube channel is always worth a visit!

Kommentieren