Today’s article is actually more of a random product, because during the preparations for the Ampere launch I not only renewed and significantly expanded the test system, but also all the metrics and graphics for the evaluation. this is exactly where the whole test starts, because the depth of the evaluation was not possible in this form before and it took really forever until the evaluation software for my measuring station was finally finished.

Of course there will be the high-resolution measurements with 8 analog channels and the two oscilloscopes in master-slave-mode for Ampere again, because I want to know where the spikes originate, how Boost and the power supply tick even in the microsecond range and what effects this will have on the power supply. To all these details there will of course be a special article before Ampere, because I will be able to link to all new cards later when testing them. But today – even without amps and in the truest sense of the word – it is all about something green.

Can you really save energy with DLSS?

Exactly the question I had asked myself, when I have the current GeForce RTX in Ultra-HD for the coming launches once again completely new and already with the new, extended data benchmarked. The fact that a GeForce RTX 2080 Ti in Ultra-HD with DLSS offered significantly more performance with the same or even slightly lower power consumption in some games is nothing new. But this time I also combined the power consumption of the whole graphics card (12 Volt and 3.3 Volt) with the package power of the GPU!

In detail, I see the frame-time progressions as a curve on the same time axis as the high-resolution power consumption measurement and I also get a very good averaged power consumption over the entire benchmark, which can vary considerably depending on the game, settings and scene. To be able to measure all cards uniformly and absolutely comparably here now is almost priceless. If you then add the GPU and CPU, you can even see the effects of the respective graphics settings on the CPU’s hunger for performance.

So far, so theoretical. Because optically, you hardly see any difference when running DLSS 2.0, quite the contrary. Many details that disappear even in the native UHD resolutions are visible again with DLSS, even if you see more than the game developers put in. This is exactly where my experiment today starts. If you use the frame limiter to cover the FPS when running DLSS to exactly the same value that you measured on average without DLSS, then it is interesting to know how the power consumption of GPU and CPU changes, although you get the same result. This works remarkably well with Shadow of the Tomb Raider, Control and MechWarrior 5, but not quite as elegantly with Wolfenstein Youngblood. Whereby the latter game uses a kind of transitional solution between DLSS and DLSS 2.0. So you can also see in the comparison of the versions, where the way is going in the meantime!

How can you actually measure something like that?

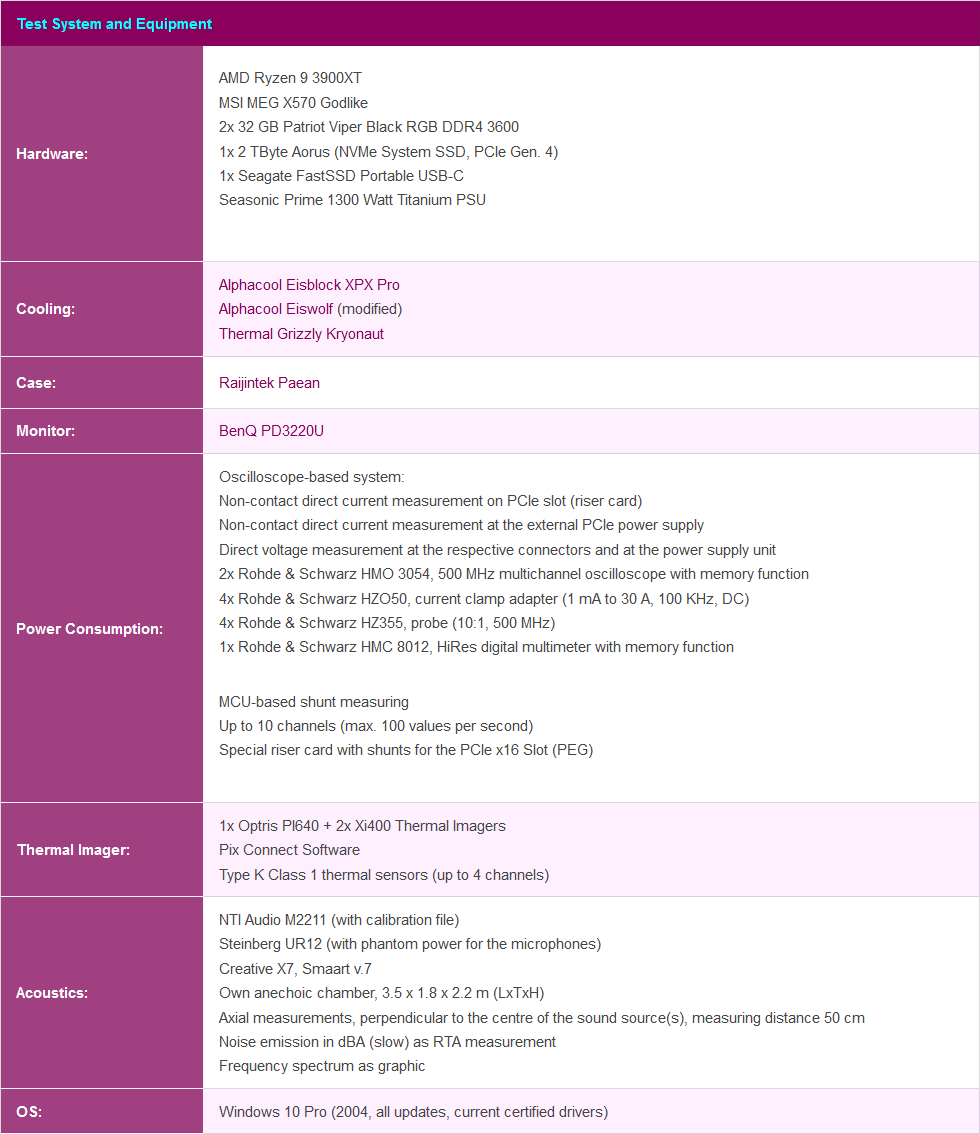

I’ll save the details for the special next week, because I’ll be able to present something different and exclusive at the same time. Today, everything revolves first of all around my measuring system, which I built a few weeks ago parallel to the oscillograph measuring station. Ultimately the crux of the matter was to ensure accurate synchronization of the data sets measured via the shunts and those measured with our own PresentMon-based logging software for the frame times. But in the end, even this worked without a sluggish API, because I didn’t want to create an additional system load on the benchtable for logging the power consumption data.

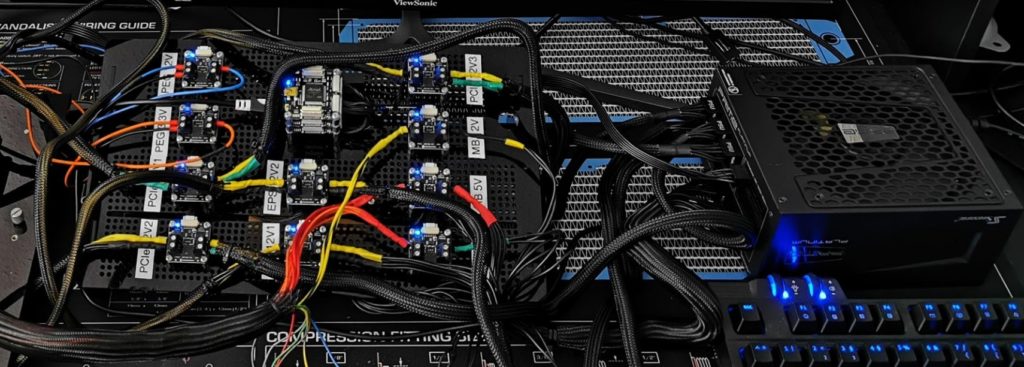

Of course, I not only measure the up to three 12-volt rails via the external supply sockets of the graphics card, but also the two 12-volt and 3.3-volt rails on the motherboard slot (PEG). For this purpose there is a special riser card which is of course already PCIe-4.0-compatible. It’s no secret that the new NVIDIA graphics cards will also support PCIe Gen.4.

I switched from the Ryzen 9 3950X to the Ryzen 9 3900XT for maximum performance, which I overclocked much better on the MSI MEG X570 Godlike. In the end, 4.5 GHz was a stable solution, because everything above that was simply not worth the additional use of electrical energy. The memory was a little bit too much, but I left it at DDR4 3600 with a little sharper timings, because I didn’t want to do without the 64 GB. Because I will also test workstation applications on this system where 32 GB of memory is not enough.

Kommentieren