We saw that the GeForce RTX 4060 8GB, including a non-functioning telemetry, consumes much more power in reality in the launch tests and with overclocking. Instead of the promised maximum power consumption of 115 watts in the spec sheet and a typical gaming value of 110 watts, it was a whopping 132 watts in some games and the average was still far above what both the spec and the telemetry values stated.

That definitely triggered me a lot, because the question about the actual performance with a real power limit of 115 watts was not only in the room, it was omnipresent. And that’s exactly why, to satisfy my and your curiosity, I at least did all the benchmarks again in Full HD. However, I have now excluded the power consumption of the 3.3 volt rail for now, since NVIDIA generally only includes the 12V rail.

The necessary percentages in the Afterburner can’t really be calculated because of the broken telemetry, so I tested it myself on games I know via trial & error. I allowed the most demanding game (Horizon Zero Dawn) 3 watts more to not slow down the more frugal games too much. If I had simply taken the game with the maximum and only cut it based on its value, the 115 watt card would have looked even worse. You can hit about 92 as 115-watt limit quite well. I tested a total of 10 games in Full HD: Weighted in with and without DXR as well as extreme and moderate power consumption.

The pitfalls of failed telemetry

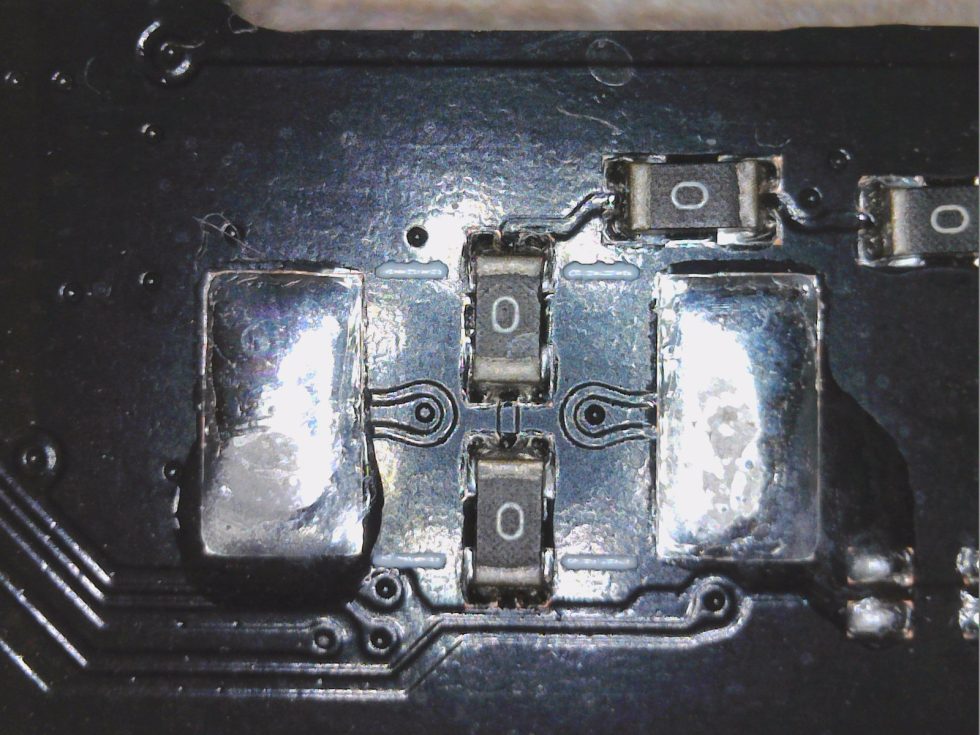

Before the benchmarks, I’d like to briefly summarize why the board layout and telemetry are such a technical bust. On the MSRP board (and not only there) we find a voltage converter design with a total of four phases. This could definitely be left as it is, but the efficiency of the voltage converters of all cards is also not the best due to the lack of proper DrMOS. The GPU already loses a few watts of power. The power that is fed to the entire card is always measured.

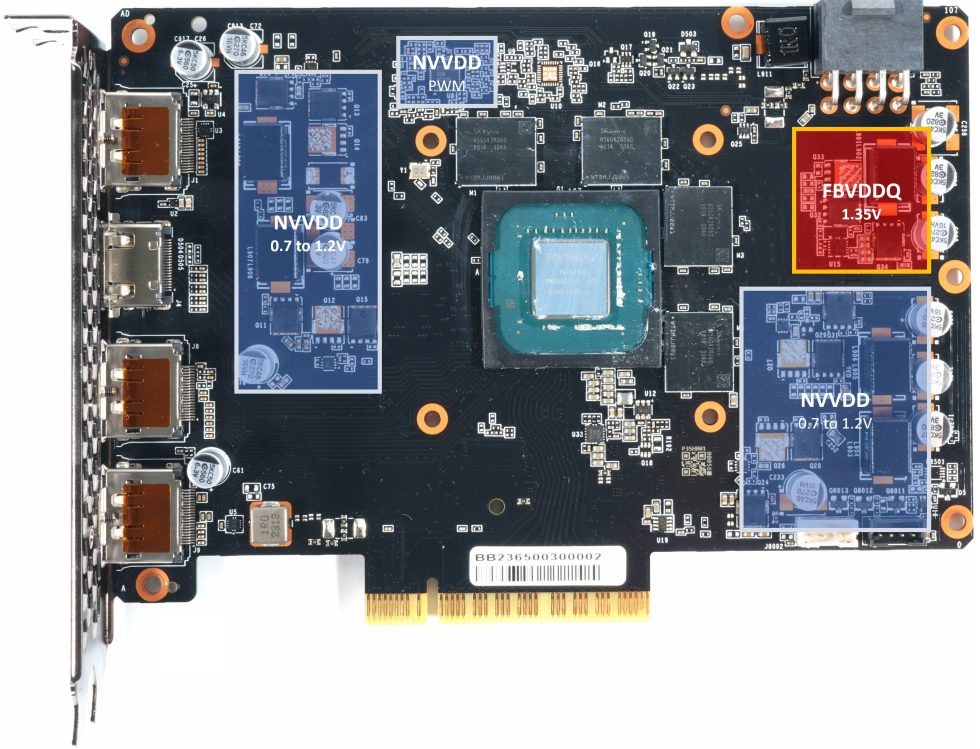

NVIDIA is actually known for permanently monitoring every 12V rail via shunts and the voltage drop that occurs there. Thus, a suitable monitoring chip can be used to determine the flowing currents and the firmware can then throttle the power supply so that the maximum power target stored in the firmware is never exceeded. Interestingly, these shunts do not exist on any of the RTX 4060 boards and a suitable monitoring chip is also completely missing.

NVIDIA declares the normal RTX 4060 as 115 watt cards and even talks about typical 110 watts when gaming. However, this is exactly untrue. Yes, the values that can be read via NVAPI are dutifully at 115 watts and below, but unfortunately they are not even close to correct. While the interface remains at 115 to 116 watts in the software, I actually measure over 130 watts on the rails and 4 games! That’s 15 watts more than the maximum allowed and still 20 watts above the communicated value for the games! Thus, the card is by far not as efficient as the interface suggests and the PR slides would like it to be. That would be over 18 percent more for gaming!

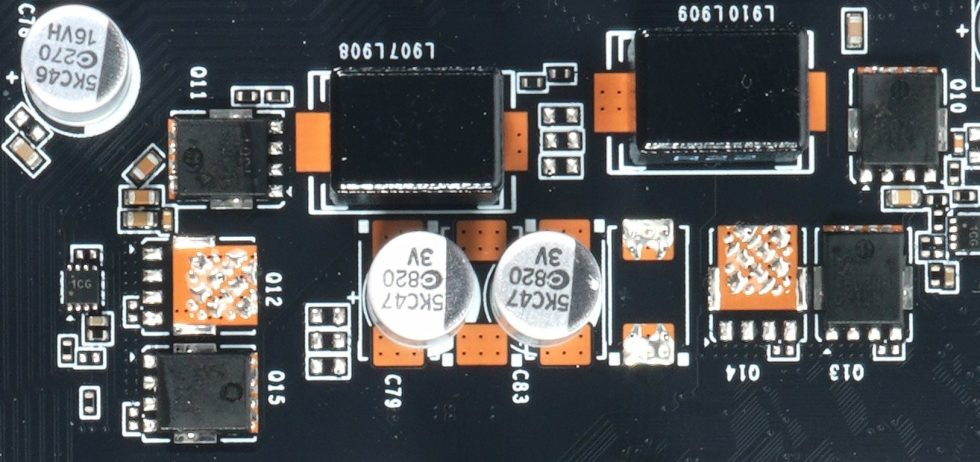

In order to be able to control the individual control circuits at all, a special procedure is needed. The keyword is DCR (Direct Current Resistance). In the end, every component has very specific characteristics in this regard. To make it short: DCR is the basis for calculating or measuring temperatures and especially currents. But how does the controller find out exactly which currents flow in which control loop? The monitoring can be different, because there are – who is surprised – different methods for it.

We have already analyzed that the voltage transformers have been implemented at the technical level of 10 years ago. Intelligent Smart Power Stages (SPS), which measure the drain currents of the MOSFETS for the MOSFET DCR in real time, are pure utopia here. One replaces the whole thing with the much cheaper Inductor DCR in a discrete circuit, i.e. a current measurement via the inductive resistance of the respective filter coils in the output area. However, the accuracy of this solution is significantly lower and is also strongly influenced by fluctuations in the quality of the components.

Supposedly, it can be fixed or would like to be fixed, but I am just wondering how. If you adjust the TBP to the specification and limit the card to real 115 watts, you have to trick the NVAPI and then add something on there again. Besides, the card would then be as fast or as slow as today’s test sample as a downgrade and that can hardly be explained to the customers. Or you really specify the card for 130 watts and then let the API deliver adjusted values. Also embarrassing. Or else, you simply sit out the problem. With the current number of cards sold, this is probably the most likely variant.

- 1 - Introduction, test methodology and causes

- 2 - Anno 1800

- 3 - Cyberpunk 2077

- 4 - Far Cry 6

- 5 - Horizon Zero Dawn

- 6 - Marvel's Guardians of the Galaxy

- 7 - Metro Exodus EE

- 8 - Rainbow Six: Extraction

- 9 - Shadow of the Tomb Raider

- 10 - A Total War Saga: TROY

- 11 - Watch Dogs Legion

- 12 - Summary and Conclusion

50 Antworten

Kommentar

Lade neue Kommentare

Urgestein

1

Mitglied

Urgestein

1

Urgestein

Urgestein

Urgestein

Urgestein

Veteran

Neuling

Neuling

Urgestein

Urgestein

Urgestein

Urgestein

Veteran

Veteran

Urgestein

Alle Kommentare lesen unter igor´sLAB Community →