Actually, Nvidia has a good laugh, because what Microsoft releases today as DXR 1.1 (DirectX Ray Tracing) makes at least this part the quasi-standard for PCs and consoles to the same extent. None of this is surprisingly new now, given that at the end of October last year you had a post called "Dev Preview of New DirectX 12 Features" on Microsoft's DirectX Developer Blog. The sticking points at the time were faster ray tracing calculations and mesh shaders for more geometry at higher frame rates.

But what is DXR? DirectX Ray Tracing under Direct3D12 is microsoft's own implementation to convert real-time ray tracing into games, and the Insider Preview alias Update 20H1 already provided access to DirectX Raytracing (DXR) Tier 1.1, the so-called mesh, as early as October 2019. shader and changes to texture streaming and shading. So this is not new for AMD or Nvidia. Especially since Nvidia, certainly not entirely unselfish, has cooperated quite closely with Microsoft in the development.

The status quo is quite clear in this respect (and probably for this reason). So far, only Nvidia supports these features with its own Turing cards and hardware acceleration through the special RT cores. Although the Pascal models were able to realize parts of DXR by software fallback purely by means of elaborate shader calculations, one can assume that many upcoming features up to further DLSS evolutions will no longer be covered by emulations Can.

According to Microsoft, DXR 1.1 is now expected to significantly increase the speed of ray tracing, and the blog post also explains how you want to do it. The number of rays per pixel can now be dynamic, and tracking and shading are much more flexible to control. Forward renderers and compute calculations such as culling or physics in particular are likely to benefit from this. But let's just get to the details.

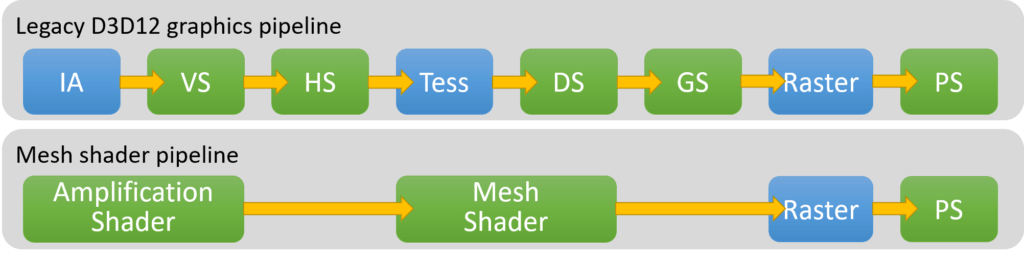

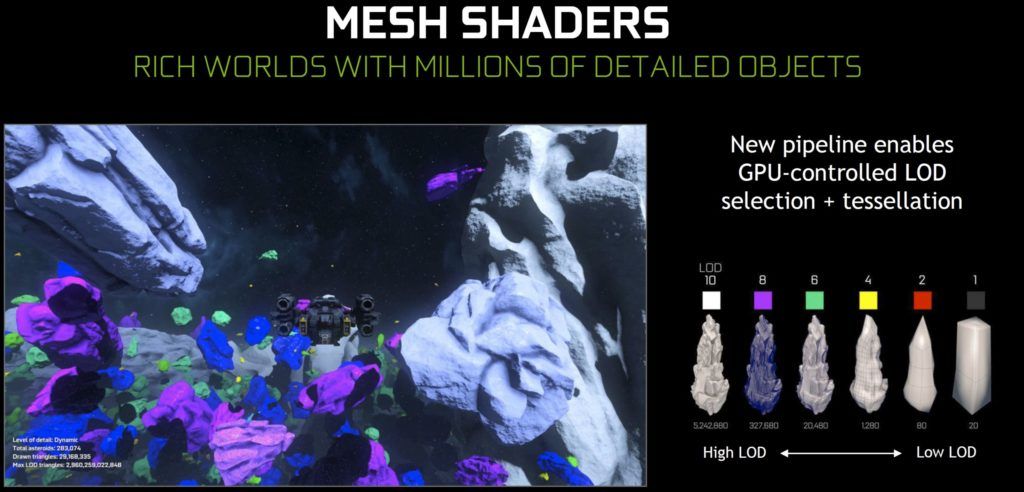

By the way, the mentioned mesh shaders were shown by Nvidia months ago. If you look at the scheme above, you can see the significant shortening of the processing steps. For example, if you have a scene with a large number of objects, a more elaborate geometry fails so far due to the resulting frame rate, because fluid is usually different. The reason is the tedious draw calls, because the processor simply does not get behind and slows down the graphics card.

Therefore, the pipeline is redesigned and given new stages. Amplification Shader and Mesh Shader take over the functional chain of Vertex and Hull Shader (VS, HS), Tessellation (Tess) and the domain and Geometry Shader (DS, GS). By the way: do we remember the introduction of AMD's Vega and the fabled primitive shaders and the supposedly specialized and programmable array for the calculation? Implemented or Unfortunately, this has never been activated, but it is aiming in exactly the same direction! By means of an optimized level of detail (LoD) and special lists, a better fault of unneeded polygons and, along with it, a more detailed tessellation should be possible – which of course should be checked (and is).

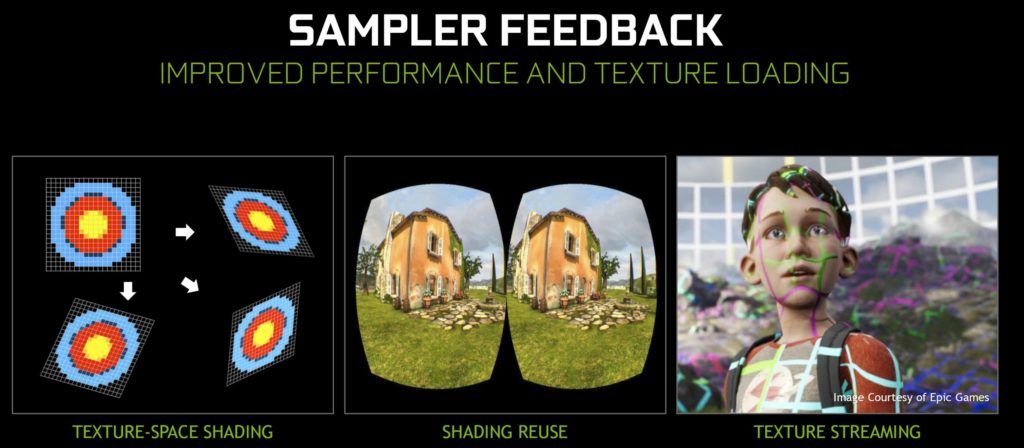

The sampler feedback mentioned is a hardware function to record which areas of a texture were called during sampling operations. With this sampler feedback, games can generate a feedback map during rendering that records which parts of which MIP layers must be resident. This feature is especially useful in two scenarios, the so-called texture streaming and the texture space shading.

Many games of the next generation have the same problem: because whenever ever larger worlds with ever higher-quality textures are to be rendered, these games suffer from longer loading time, higher storage load or both. Game developers will then need to reduce the quality of their assets or reload textures at run time. If you then aim for ultra HD resolution, the entire MIP map of a high-quality texture takes up a lot of space! It is therefore necessary to load only the necessary parts of the most detailed MIP levels.

One solution to this problem is texture streaming, where sampler feedback greatly improves the accuracy with which the right data can be loaded at the right time. The process is clearly structured. The scene is rendered and the desired texture tiles are first recorded using sampler feedback. If the texture tiles at the desired MIP levels are not yet resident, you then render the textures of the current frame with a lower MIP level and send IO request to the hard disk to load the desired texture tiles. This process of loading onto reserved resources is asynchronous only.

Another interesting scenario is texture space shading, in which games dynamically calculate and store the intermediate shading values in a texture, reducing both spatial and temporal rendering redundancy. Avoiding this redundant processing is the main concern. If you follow the editing history, you first record geometry with simple shaders using the sampler feedback to determine which parts of a texture are needed at all. Only then will these textures be filled. Then you redraw the geometry, but with real shaders, which then apply the texture data generated in this way.

Of course, it will now also depend on the game developers how extensively one will implement and use these new functions. To what extent which AMD cards and also Nvidia's GeForce GTX without RT and Tensor cores can still be integrated with which features by emulations is unclear and actually almost questionable. Especially with regard to AMD's current navigation maps with RDNA, one will probably have to look at the consoles more, which is and would be possible with RDNA2.