What is spatial hearing?

However, the unaffected reproduction of the frequency prectrum is only one side of the coin. We must also assess to the same extent how well individual sound sources can be dissolved and located. This in turn describes the precision of the reproduction, in which spatial hearing plays a very strong role.

Man has two ears, between which, as an acoustic barrier, the head sits in the middle. But how does man actually hear spatially and is able to locate acoustic events well and assign them to a specific place? The whole thing is based on two factors: the respective runtime differences (i.e. when exactly the sound hits the respective ear) and the intensity differences (differences in the sound pressure level).

However, one should not ignore one thing: usable information about the spatial location of a sound source due to intensity and runtime differences can only be detected and processed by the ears and brain if the sound is changes in content (sudden occurrence, spectrum, level, etc.). For example, the basic noise in forests or a large city can hardly be spatially differentiated if you are in the middle of it. The more violent or faster a change occurs, the better the sound source can be located.

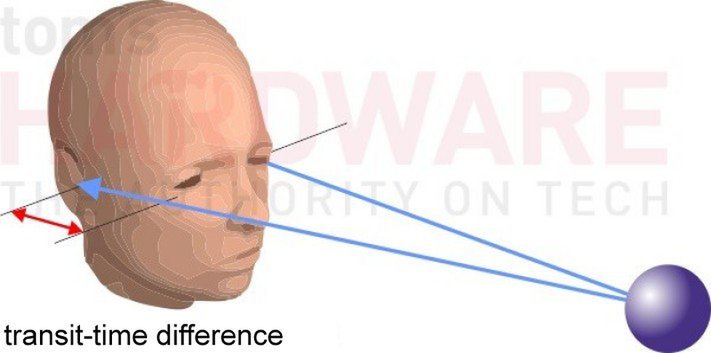

Runtime difference

The runtime difference is the time difference that sound waves of an event need to reach both ears. If the source is not frontal (at least 3° different), the sound logically reaches the nearest ear earlier than the other (see figure). This difference in runtime is therefore dependent on the different distances that the sound has to travel in order to reach the ears. The human hearing is able to perceive even the smallest runtime differences of 10 to 30 microseconds!

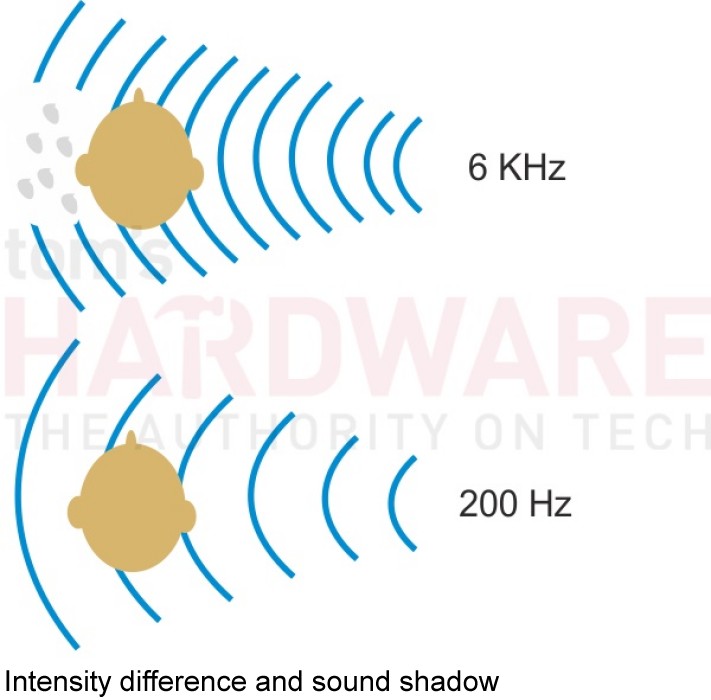

Intensity difference

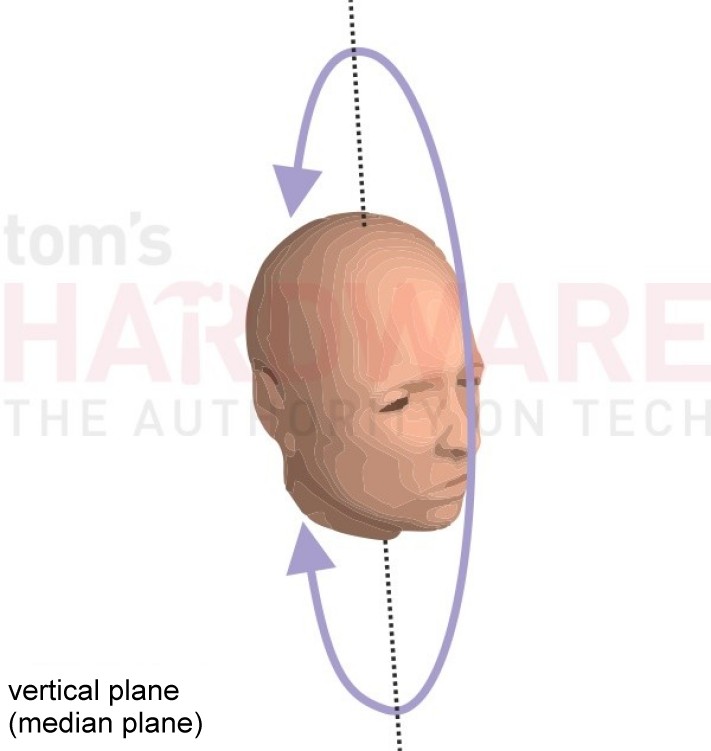

A possible intensity difference (level difference) occurs whenever the wavelength of the impacting sound is small enough compared to the head and therefore reflections occur that make the head an obstacle. As you can see well on the picture, a so-called sound shadow is created on the opposite side. However, this effect only occurs from frequencies above about two kilohertz and increases with increasing frequency. However, for the larger wavelengths of the lower tones, a head is no longer an obstacle.

Places of an acoustic event: localization

If an acoustic event occurs outside the head – i.e. generated via loudspeakers, for example – this is what is called a so-called localization. The evaluation of the information of the ears allows the brain to locate the origin of the event spatially precisely.

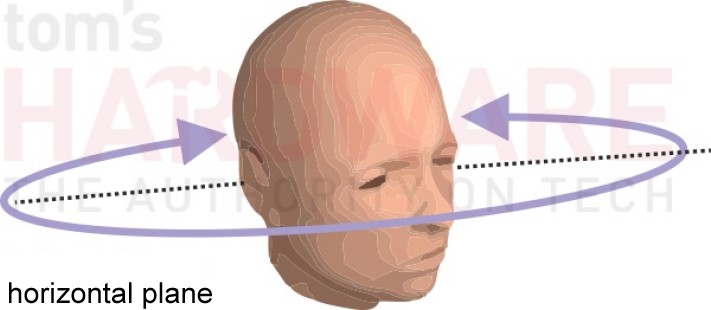

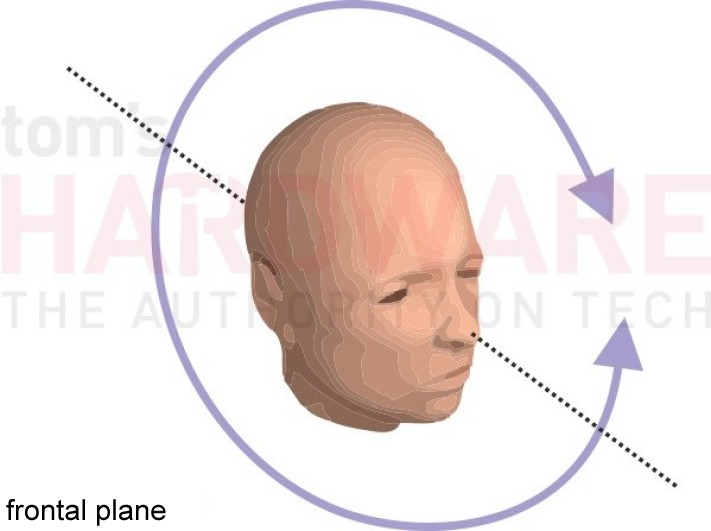

By the way, the head is unconsciously also in motion for precise spatial localization, so that a rotation, lifting, lowering or tilting allows a localization over all three levels (X, Z and Y). In this case – but only then – one can also speak of real 3D sound (three-dimensional), which cannot be generated with normal loudspeaker setups, which are all at more or less the same height.

Special features of headphones

When using headphones, however, the perception of the stimulus always occurs directly in the head! As soon as the sound waves generated by a headphone are synchronous, the sound source feels as if it were in the middle of the head – that is, the media level.

Lateralization is then an apparent movement of the sound source from the middle of the head to one side. This headphone-typical “walking” of a supposed sound source arises in turn – as already explained at the beginning – by a runtime difference (signals are recorded time-shifted) or. Intensity difference (volume differences).

Ears are almost never completely identical, so that with different sensitivity of both ears, a lateralization to the better ear is made! Therefore, an exact balance setting is always the first step in optimizing the hearing impression.

Room sound with headphones – trick or voodoo?

But much more is happening in lateralization! The shift of the phases, i.e. the moment in which a wave appears on the ear, can also lead to supposed positional shifts. The earcup itself is of great importance for locating acoustic events and at the same time acts as a sound catcher and also as a filter. The sound signals encountering it are distorted linearly in different ways depending on the direction of incidences of the sound and the distance of the sound source.

The earcups of every human being are unique and so in the end every person hears differently. Already the shape of the respective earcup influences how a sound wave bounces outside and enters the earcup (and further into the eardrum). By the way, the hair on the earcup also plays a role!

So – and what does all this have to do with headphones now? Everything and nothing! Genuine three-dimensional hearing necessarily requires a movement of the head on all axes. Something that is simply not technically possible with fixed binaural headphones!

things, such as distinguishing the actual positioning on the Z axis in front of or behind the head or on the Y-axis below or above the head, one-dimensional headphones cannot be mapped!

Even if the software side is working with phase shifts, runtime and level manipulation as well as a change of the frequency spectrum (everything from behind just sounds a bit deeper or duller) – it just doesn’t get a real spatial sound, even if the brain is already here It helps a little: It bases listening experiences from real life and can be manipulated (from time to time).

What are the bringof multiple drivers per earcup?

Headphones with several drivers, mounted at different angles, can help with the two-dimensional imaging, because depending on the listening sensation and hearing experience, the sound that hits the earcups at different angles can be quite as good as something like “room sound” sensation.

The disadvantage of such systems, however, often lies in the imponderables of multiple sound generation, because the drivers influence each other negatively due to the very tight positioning (phase shift, extinction). Music can no longer be enjoyed with such a system and it is also difficult to play music linearly over the widest possible spectrum.

There are now some usable multi-driver headsets on the market, which can realize such an illusion quite convincingly. But even in this case, such a feeling in interpretation is absolutely subjective and never transferable to other persons.

5.1 or 7.1 sound on the headphones is therefore always a matter of imagination or only succeeds with the help of the brain with the help of its own listening experience – and is even then maximally two-dimensional. Real 3D is nifty with loudspeakers, because only the X and Z axes are always shown.

And what is the real advantage?

Personally, I prefer very good stereo headphones, which dissolve in a differentiated and detailed way. Not only the reusable frequency range and its linear character play a major role, but the ability of the system not only to cleanly dissolve the contents of a single sound source, but also to realize this when several (or many) mix acoustic results or occur at the same time.

The separation of individual sources and their most accurate spatial location within a large overall acoustic picture is often referred to in HiFi-Geschwurbel-Jargon also as a so-called “stage”, whose as large as possible “width” the good spatial imaging capability of a headphone and its resolution capabilities.

If all this is missing, it sounds muddy and undifferentiated. For example, sounds and sounds merge into such an acoustic porridge, the spatial representation collapses like a house of cards.

Can anyone really “hear” that?

A clear jain. All tests with recorded surround material were repeated several times with a total of six test subjects (each 3x m/f) between the ages of 16 and 50 and with different headsets in random order. Only two of the test persons were able to assign the sources of the “real” surround headphones without errors, at least three people were at least partially correct and one person could only guess. With the virtual surround headsets with only one driver per shell, only two people wanted to “perceive something” at all – but no one was really flawless.

The more complex and loud the noise carpet, the greater the error rate! Incidentally, in the blind test, none of the test subjects was able to tell whether a headset with one or one of the up to three drivers (+ bass) per shell. Interestingly, two people were also able to certify the stereo reference headphone from time to time. This, in turn, is once again proof that everything only takes place in the brain and that the question cannot really be answered with a yes or no answer.

Ergo: Imagination is also an education. Before trying to think about the so-called “space sound” and the advertising-rich Dolby certificates, a headphone should first of all have basic things such as a good resolution of the individual acoustic events via the greatest possible Frequency spectrum, a linear reproduction of this spectrum, as unobtrusive vibration behavior as possible and a high level strength. Then the skills come almost by themselves.

Very good 5.1 emulations can realize the spatial imaging as an illusion with the help of the brain, but then usually suffer from the two-dimensional filigree resolution and clean reproduction in complex scenes with many simultaneous sound sources.

- 1 - Einführung und Übersicht

- 2 - Alles über Schall und Frequenzen

- 3 - Räumliches Hören, Surround und viel Voodoo

- 4 - Sounding: Markting-Gag oder Skill-Verstärker?

- 5 - Menschliche Sprache, Tiere, Bewegungen

- 6 - Typische Kampf- und Transportmittelgeräusche

- 7 - Wie wir messen und urteilen

- 8 - Fazit und Zusammenfassung

2 Antworten

Kommentar

Lade neue Kommentare

Urgestein

1

Alle Kommentare lesen unter igor´sLAB Community →