It is well known that you can use tools such as MSI Afterburner to increase or decrease the maximum power consumption of a graphics card (within the specifications). In addition, the 12V-2×6 connection (formerly 12VHPWR) also offers different power levels. But what are the differences between the two options and what happens if you use both? The differences are greater than you might think and the so-called transients (spikes) in particular are very different, although the game offers very similar benchmark results. To investigate this in more detail, I use an Asus GeForce RTX 4090 Strix, the card that has also shown weaknesses on the flip header of the 12V 2×6 connector. However, I am using a card repaired by KrisFix and fitted with a better quality header.

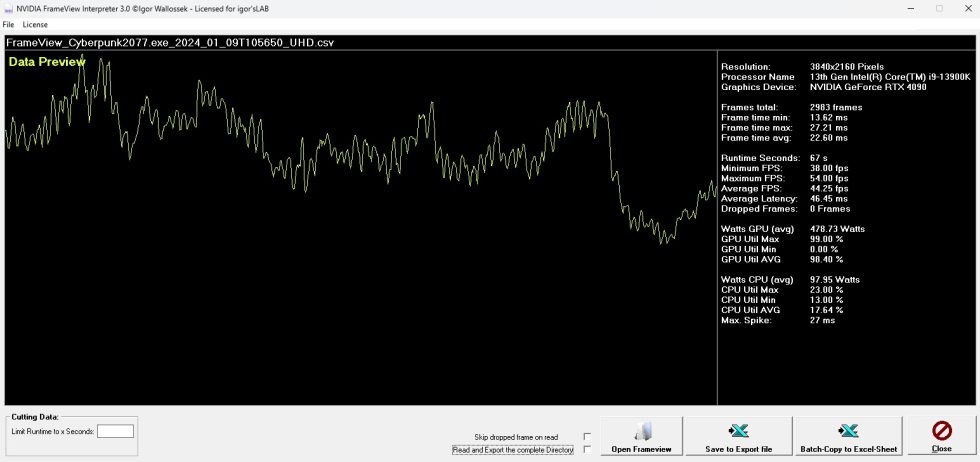

Cyberpunk 2077 in Ultra HD and with RT in Ultra is enough to almost fully utilize the card at 99%. I’ll leave the Tensor cores out of the equation. I didn’t get involved with Mafia II in UHD (maximum settings) so as not to provoke the spikes too much, although I was even able to force a be quiet! Straight Power 11 Platinum 1000 Watt to switch off reproducibly after just a few seconds, even though the Core i9-13900K had to work with almost 100 watts in the average value. This is because the CPU also produces a lot of transients and in total there is a party, regardless of which port the graphics card is connected to, especially since the individual rails in the NVIDIA adapter are connected in parallel anyway. That was also the reason why I measured directly with this adapter. Well, I also had another reason, but more on that later.

Measurements out of the box with 500 Watt power limit

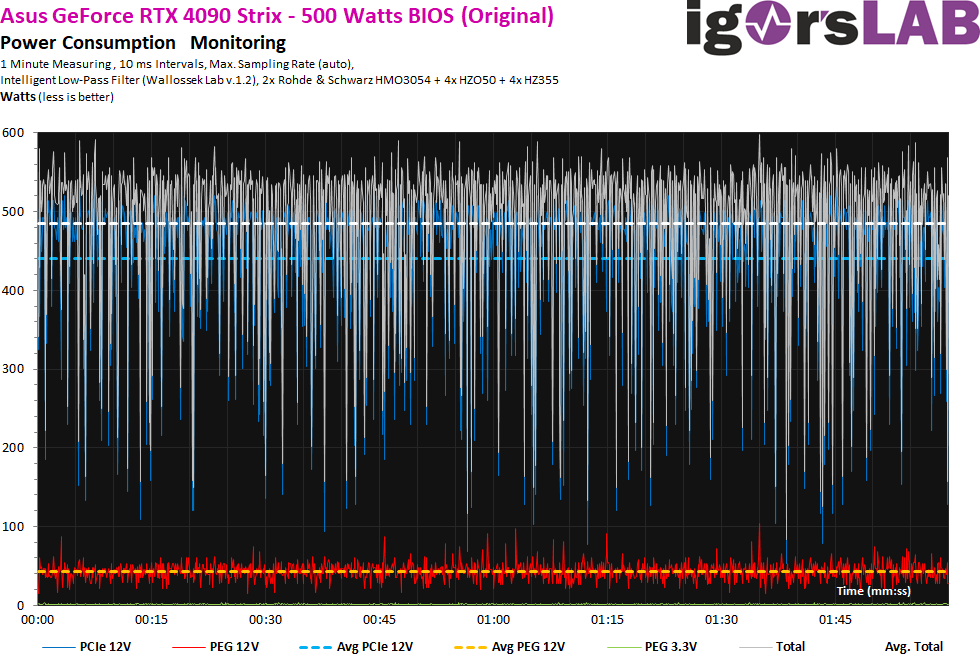

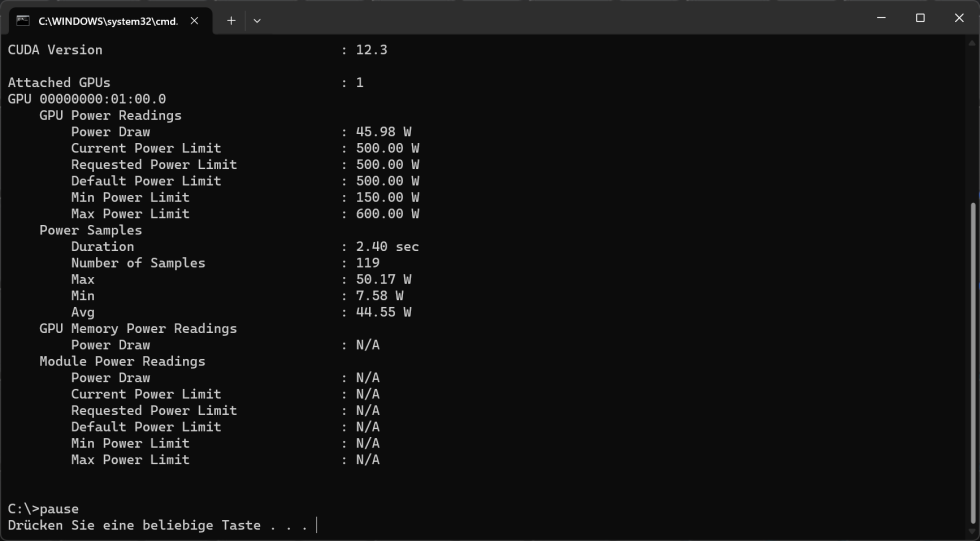

Let’s just take the initial situation, where even NVIDIA’s PCAT had output almost to the watt, which I was also able to determine on the measuring clamps. The only difference is that the truth is in the range that the normal shunt measurement cannot show. But I’ll come to that in more detail in a moment. Let’s first look at the initial situation with a card that can consume 500 watts directly out of the box, even without further OC, if you consider the maximum and set power limit and which I supply with a 4-connector adapter (maximum 600 watts) from NVIDIA:

If you then benchmark the whole thing with the fully warmed-up card, the in-game benchmark produces results that are quite reproducible, which is extremely important nowadays as it really comes down to nuances.

On the next graph, we see a little over 480 watts on all three supply rails, with the load now rising to 603.3 watts in the peaks. These are only short peaks, but they occur again and again. But where do they actually come from?

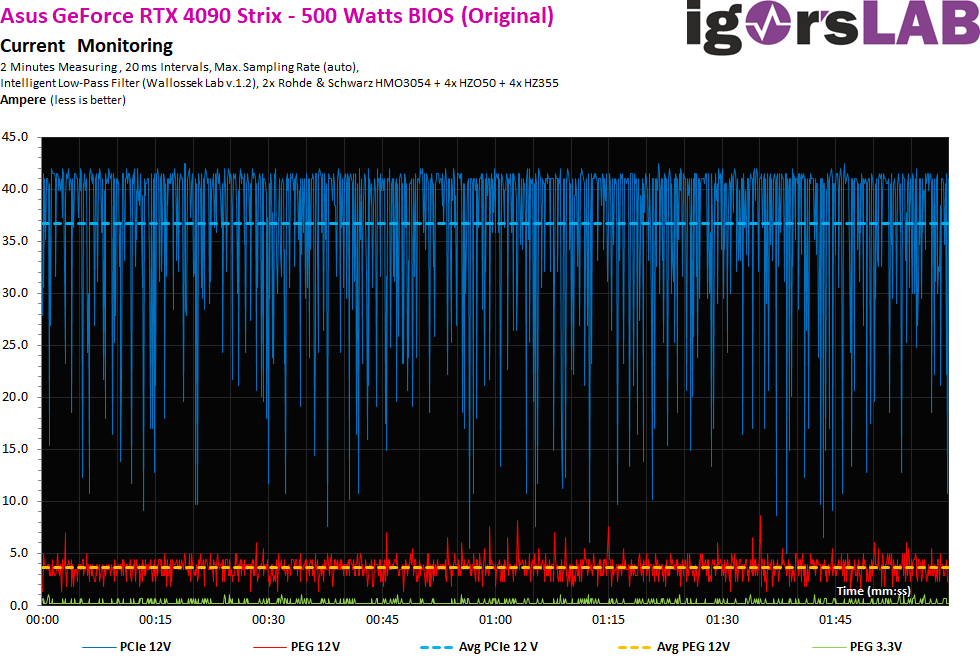

If you analyze the currents, you can see that with almost 480 watts of real power consumption, all rails are cut at around 42 amps and only individual spikes sometimes occur up to around 43 amps. However, we also see the intermittent dips and the regulation by boost, which is not only caused by the graphics load. This is where the limiter really comes into play.

If you have now done the math, you will have calculated a power consumption of around 442 watts at an average of 36.8 amps at a smooth 12 volts, so there is a shortfall of just under 36 watts. These are hidden in the voltage fluctuations, where the power supply suddenly starts to overvoltage slightly. This behavior can be measured in a similar way in all power supply units and then also generates the actual spikes. And this is exactly where the actual article begins today. What happens if you set the power target either via the software (NVAPI, MSI Afterburner or similar) or via the circuit logic of the power supply (sideband contacts of the 12V 2×6 connection) or even via both? This is exactly what we start with on the next page!

180 Antworten

Kommentar

Lade neue Kommentare

Urgestein

Urgestein

1

Urgestein

Urgestein

Urgestein

Neuling

Urgestein

Mitglied

Veteran

Mitglied

Urgestein

Urgestein

1

1

Urgestein

Urgestein

Urgestein

Urgestein

Alle Kommentare lesen unter igor´sLAB Community →