After the launch is before the launch and therefore Igor has an unspecified article ready for later today, but he already picked a deep red blossom in AMD’s blog garden yesterday, which I gratefully picked up for this morning to fill the gap. Because although NVIDIA’s GeForce RTX 4070 has not been officially launched yet, there are already numerous rumors and leaks about it circulating in the tech community. In particular, it is believed that it will feature 12 GB of GDDR6X VRAM, which should be a significant improvement over previous models given the performance demands of modern games and applications. However, some of the latest games have significantly increased memory requirements, especially at higher resolutions like Ultra-HD. Whether you think that’s nice, or even the new trend, is up to each person to consider.

Therefore, it is possible that the rumored 12 GB VRAM would not even be enough to ensure optimal performance. This put AMD, NVIDIA’s biggest competitor in the graphics card market, on notice at five to twelve, prompting it to use the opportunity to promote its own products. In fact, AMD already has some graphics cards in its portfolio starting with the Radeon RX 6000 series, which are equipped with up to 16 GB GDDR6 VRAM. This is a significant increase over previous generations. In light of this, AMD has decided to promote these products a bit more aggressively and annoy NVIDIA by highlighting the more generous VRAM amounts of their Radeon RX series.

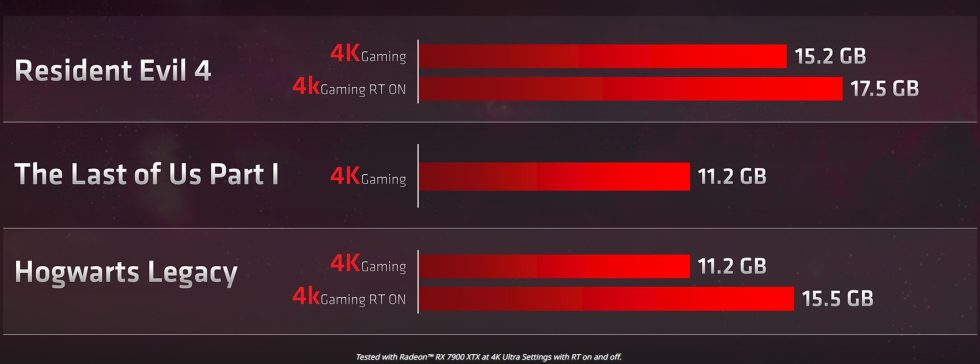

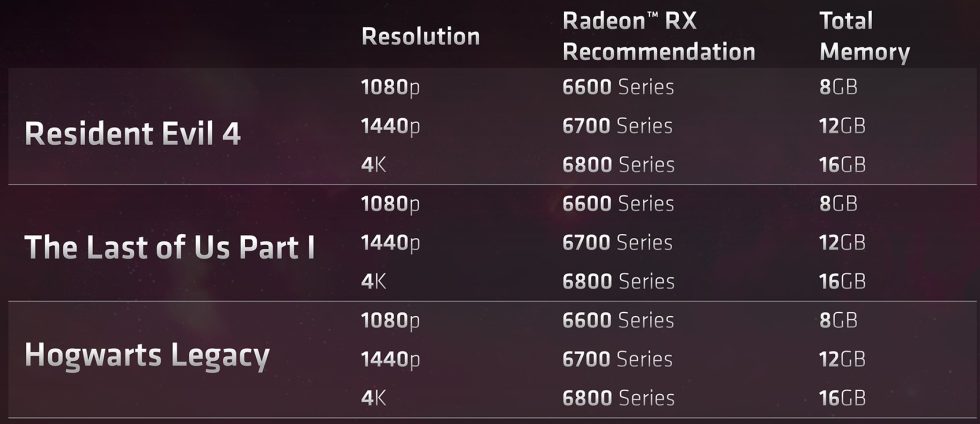

In a blog post titled “Are YOU an Enthusiast?”, AMD’s product marketing manager Matthew Hummel emphasizes the extreme amounts of VRAM needed for top specs in Ultra HD gaming. Hummel specifically mentions a few hand-picked games like the Resident Evil 4 remake, the recent port of The Last of Us Part I, and Hogwarts Legacy.

According to Hummel, for each of these games, AMD recommends using the 6600 series with 8GB of RAM at 1080p resolution, the 6700 series with 12GB of RAM at 1440p, and the 6800/69xx series with 16GB of RAM at 4K (Ultra-HD, to be correct). This even ignores the RTX 7900 XT with 20 GB VRAM and 7900 XTX with 24 GB VRAM. However, you have to ask those who play The Last of Us Part 1, for example, how far they really get with the recommended Radeon RX 6800.

Igor asked himself the same question and, just for fun, tested a Radeon RX 6800XT (the faster variant) directly in the game between all the benchmarks of a certain card. The 16 GB of VRAM was definitely not the limiting factor, but the barely achieved 40 FPS (25 FPS in the P1 Low) is actually nothing to show in a slide, although even an old RTX 3080 10GB with the measly RAM expansion was a tad faster in Average, but was completely shot down in the P1 Low due to the stuffed memory. To AMD’s credit, we also have to note that Igor only managed 46 FPS even with an RTX 4070 Ti, which is kind of smooth, but not really playable.

And what does AMD want to tell us with this? You’ll need more than 12 GB of RAM to really play in Ultra HD, especially with ray tracing! Well, now we know officially. Now, of course, this is a poore volta thing with such slides, because it remains to be doubted that the upcoming GeForce RTX 4070 is supposed to (and will) serve the target group of those who really want to play in ultra HD. And AMD and ray tracing are currently a thing where you always have to be careful with slides. At least the repeated statement that GPUs with 16 GB VRAM are offered for only 499 USD is fitting for the own PR scheme. How fast the cards really are in Ultra-HD then, especially when DXR is active, well… But in principle, AMD is already right when they make fun of NVIDIA over and over again because of the stingy memory expansion.

AMD has performed its own benchmark comparisons with NVIDIA cards to make this point. Different Radeon GPUs were compared against GeForce GPUs. The RX 6800 XT competed against Nvidia’s RTX 3070 Ti, the RX 6950 XT against the RTX 3080, the 7900 XT against the RTX 4070 Ti and the RX 7900 XTX against the RTX 4080. A total of 32 “selected games” in Ultra HD resolution were used. In the benchmarks published by AMD, the Radeon GPUs won in the majority of cases.

However, it is important to note that these benchmarks were performed by AMD itself and therefore might not be completely independent. The results should therefore (as always) be taken with a grain of salt, especially since none of the benchmarks show the true FPS numbers (including the P1 Low), nor do we find out which of the bars were even within the playable range. I have packed the original screenshots from the AMD homepage (AMD has unfortunately protected the pictures from simple saving) into a gallery for you to browse through:

AMD’s test notes are in full in the blog entry, but I’ve picked out the most important ones for each benchmark for you:

Slide 1 – Testing done by AMD performance labs March 5, 2023, on an AMD Radeon RX 6800 XT graphics card (Driver 23.2.1) paired with AMD Ryzen 9 7900X processor, AM5 motherboard, 32GB DDR5-6000MT memory, and Windows 11 Pro vs. a similarly configured test system with GeForce RTX 3070 Ti graphics card (Driver 528.24) across 32 games at max settings, in 4K resolution. System manufacturers may vary configurations yielding different results. Performance-per-dollar is calculated by taking the average scores of the games above divided by the lowest available price on Newegg (USD) as of April 3, 2023. RX-897.

Slide 2 – Testing done by AMD performance labs March 5, 2023, on an AMD Radeon RX 6950 XT graphics card (Driver 23.2.1) paired with AMD Ryzen 9 7900X processor, AM5 motherboard, 32GB DDR5-6000MT memory, and Windows 11 Pro vs. a similarly configured test system with NVIDIA GeForce RTX 3080 graphics card (Driver 528.24) across 32 select games at max settings, in 4K resolution. System manufacturers may vary configurations yielding different results. Performance per Dollar calculated by taking the average scores of the 32 titles, divided by the lowest available price on Newegg (USD) as of April 3, 2023. RX-896.

Slide 3 – Testing done by AMD performance labs March 2023, on an AMD Radeon RX 7900 XT graphics card (Driver 23.2.1) paired with AMD Ryzen 9 7900X processor, AM5 motherboard, 32GB DDR5-6000MT memory, and Windows 11 Pro vs. a similarly configured test system with NVIDIA GeForce RTX 4070 Ti graphics card (Driver 528.24) across 32 select games at max settings, in 4K resolution. System manufacturers may vary configurations yielding different results. Performance per Dollar calculated by taking the average scores of the 32 titles, divided by the lowest available price on Newegg (USD) as of April 3, 2023. RX-915.

Slide 4 – Testing done by AMD performance labs March, 2023, on an AMD Radeon RX 7900 XTX graphics card (Driver 23.2.2) paired with AMD Ryzen 9 7900X processor, AM5 motherboard, 32GB DDR5-6000MT memory, and Windows 11 Pro vs. a similarly configured test system with GeForce RTX 4080 graphics card (Driver 528.24) across 32 games at max settings, in 4K resolution. System manufacturers may vary configurations yielding different results. Performance per dollar is calculated by taking the average scores of the same 32 games, divided by the lowest available SEP on Newegg (USD), as of April 3, 2023. RX-927

In the so-called test notes, as is the case with any marketing article focused on benchmarks, there are some interesting notes on different CPUs, motherboards (some with modified overall performance), RAM speeds and more, depending on the requirements. Although most of the gaming tests were performed with the same specifications, some specifications vary and older test data was also used. The games used in this GPU showdown are not always the same, nor does it appear that FSR or DLSS were used in these tests.

So, until the launch of the GeForce RTX 4070, it is impossible to know for sure how it will compare to these GPUs. However, AMD emphasizes that their graphics cards are more generously equipped with VRAM, although no distinction is made between AMD’s GDDR6 and NVIDIA’s GDDR6X. By the way, it’s also worth mentioning that the options for VRAM capacities are directly related to the size of the memory bus or the number of preferred channels.

For example, AMD’s Navi 22 gets by with a 192-bit interface and 6 channels ( RX 6700 XT and the RX 6750 XT). Similarly, NVIDIA’s AD104 (RTX 4070 Ti and the upcoming RTX 4070) supports a 192-bit bus. Due to the use of 2 GB chips, NVIDIA can thus only offer up to 12 GB of memory capacity. A doubling to 24 GB was possible, but required the “clamshell” mode, where the memory is used on both sides of the board. That, in turn, would simply be too expensive and time-consuming (keyword cooling).

The question remains what these teases might say about the upcoming, smaller RDNA3 models. The RX 7900 XT with 20 GB of memory for a price of $800 to $900 is quite an announcement. And what about the colported and hotly anticipated RX 7800 XT? Reducing the memory to 16 GB would be fully in AMD’s prey scheme with the slides. But AMD could also technically go even further down and also only offer 12 GB.

But you also have to keep one thing in mind despite all the gloating or even malice: There is much more to graphics cards than just the pure memory capacity. Formative architecture features such as cache size also play an important role. AMD’s RX 6xxx series did offer a lush 16 GB on the top four models (RX 6950 XT, RX 6900 XT, RX 6800 XT, and RX 6800), but the competing RTX 30xx series could generally easily keep up in rasterizing games, especially since they offered superior ray tracing hardware and improvements like DLSS. Ergo: it depends on the overall product and we can be curious about what else is to come. Unfortunately, it will probably no longer be cheaper.

Neither AMD nor NVIDIA can do anything for the market, and yet the mood among buyers remains tense in the end. There is really no better way to put it than the picture Igor took on Easter Sunday during a short walk. The whole structure is permanently disturbed.

Source: AMD

40 Antworten

Kommentar

Lade neue Kommentare

Urgestein

1

Urgestein

1

Urgestein

1

Urgestein

Urgestein

Mitglied

Urgestein

Urgestein

1

Urgestein

Veteran

Urgestein

Urgestein

Urgestein

Veteran

Veteran

Alle Kommentare lesen unter igor´sLAB Community →