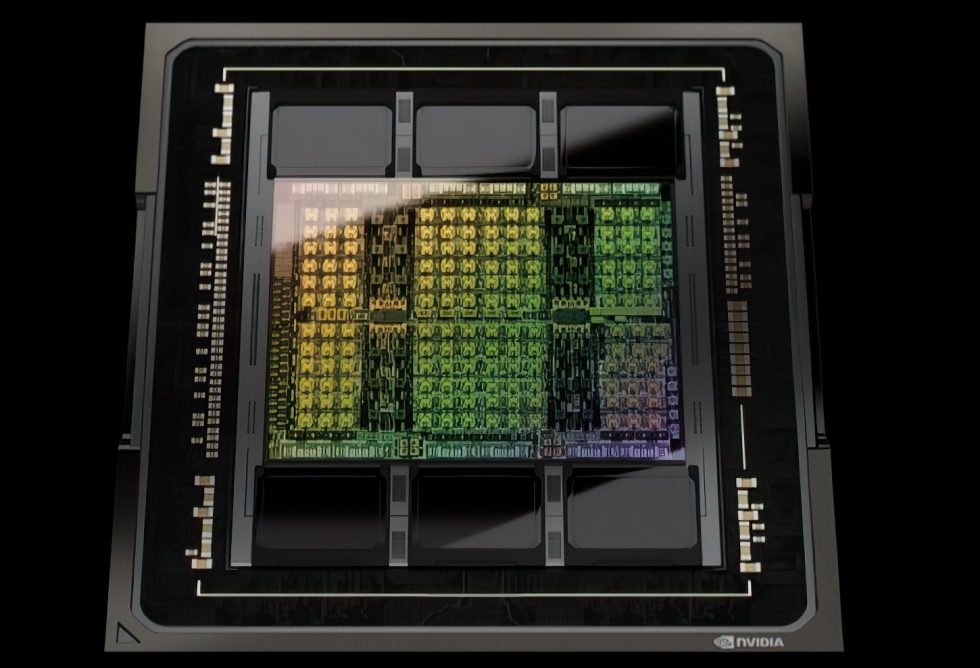

The NVIDIA H100 NVL GPU represents a high-performance graphics card designed specifically for demanding machine learning and artificial intelligence applications. This high-performance graphics processing unit was designed to efficiently handle large amounts of data while performing complex calculations. The NVIDIA Hopper GPU-based H100 NVL PCIe graphics card is indeed an impressive graphics processing solution. It features dual-GPU NVLINK connectivity, which enables fast communication between GPUs, and 94 GB of HBM3e memory per chip, which enables the graphics card to process large amounts of data quickly.

Particularly noteworthy is the GPU’s ability to process up to 175 billion ChatGPT parameters on the fly. This impressive performance shows that the NVIDIA H100 NVL GPU is perfectly suited for computationally intensive applications such as artificial intelligence and machine learning, making it ideal for demanding applications.

Compared to the H100 SXM5 configuration, the H100 PCIe configuration has lower specifications. While the GH100 GPU can activate 144 streaming multiprocessors (SMs), only 114 SMs are active in the H100 PCIe configuration. In contrast, the H100 SXM configuration has 132 SMs. Despite this reduction, the H100 PCIe configuration still remains powerful and offers impressive computing capacity.

The H100 PCIe configuration is capable of performing 3200 floating-point-8 (FP8) operations per second, 1600 tensor-16 (TF16) operations per second, and 48 TeraFLOPs (TFLOPs) double-precision floating-point (FP64) operations per second. In addition, the GPU is equipped with 456 tensor and texture units that can be used to handle complex artificial intelligence and machine learning computations.

Although the number of SMs is lower compared to the GH100 GPU and the H100 SXM configuration, the H100 PCIe configuration is still capable of performing sophisticated computations in various application fields such as science, artificial intelligence, and machine learning. It is a good choice for applications that require fewer SMs or rely on a PCIe configuration due to space constraints.

The NVIDIA H100 NVL GPU was designed specifically to meet the needs of ChatGPT, a large-scale language model training algorithm developed by OpenAI. ChatGPT is designed to have human-like conversations based on text input and requires an enormous amount of processing power to function efficiently and quickly. Using the NVIDIA H100 NVL GPU, ChatGPT can process large amounts of data in real time and respond faster, contributing to improved performance and a streamlined user experience.

The H100 PCIe represents a version of the NVIDIA A100 GPU, which is designed as a PCIe card. Compared to the more powerful SXM5 version, the H100 PCIe has a lower peak computing performance and therefore operates with lower clock frequencies. This card has a maximum thermal design power (TDP) of 350 watts, while the SXM5 version shows a double TDP of 700 watts. Nevertheless, the H100 PCIe retains its 80 GB storage capacity, which has a 5120-bit bus interface.

The memory of the H100 PCIe is realized by HBM2e memory, which enables a bandwidth of over 2 TB/s. HBM2e (High Bandwidth Memory 2e) is an enhanced version of HBM2 memory and offers higher data transfer rates and better energy efficiency. The H100 PCIe is particularly suitable for applications that require less computing power than those based on the SXM5 version. Nevertheless, it can handle large data sets because it has an extensive memory capacity and high memory bandwidth.

The H100 PCIe can be used in servers and workstations specialized in artificial intelligence, machine learning, deep learning or HPC applications. Overall, the H100 PCIe is a powerful GPU variant that offers large memory capacity and high memory bandwidth, while having a lower TDP than the SXM5 version.

Source: NVIDIA

12 Antworten

Kommentar

Lade neue Kommentare

Urgestein

Mitglied

Urgestein

Urgestein

Mitglied

Veteran

Urgestein

Urgestein

Urgestein

Veteran

Mitglied

Urgestein

Alle Kommentare lesen unter igor´sLAB Community →