My French colleague Bruno Cormier not only followed Nvidia's Keynote Live at CES, but also took the opportunity to gather as much information as possible, which WON NVIDIA's announcement at CES 2019 to open their GeForce cards and drivers to the Adaptive Sync technologies. This is particularly important in that there are already a lot of FreeSync displays available on the market and you could also use these, usually significantly cheaper offers, with GeForce cards from 15.01.2019.

In addition to NVIDIA's official statements and information at CES, Bruno was also able to interview other sources, as many things and details regarding compatibility are of high interest, but Nvidia kept itself partially covered. We will deal with this in a matter of time. To do this, we've also added a sample video from various screens that haven't been validated by NVIDIA. This article is intended to serve as a kind of FAQ, which is something to be edging to purchase and use of non-certified monitors.

Are all FreeSync displays compatible?

In principle, yes. But the devil is in the detail. With the new driver, it will be possible to manually enable G-Sync in the driver if the displays have not been validated as "G-Sync Compatible" by NVIDIA. If the VESA Adaptive Sync standard has been implemented correctly in the monitor, there is no reason why it should not work. To ensure this, however, it is necessary that the monitor has a DisplayPort 1.2a interface (and later) that already natively integrates the Adaptive Sync standard.

The very first FreeSync screens could therefore cause problems due to a frequently used "proto implementation" of FreeSync in their firmware. These older models may not be perfectly compliant with the VESA standard, especially if their DisplayPort interface does not at least match version 1.2a. However, the ratio of 12 validated screens out of 400 screens tested by Nvidia is currently alarmingly low, considering which validation criteria are to be read below (e.g. flickering, blanking).

The question arises as to what costs the monitor manufacturers could incur and whether they simply rely on the testers (and customers) to save this obolus easily. However, Nvidia also pointed out that the providers themselves could be a bit more active and that there is definitely no extra license fee. However, I would like to go into this in detail a little later.

What about HDMI VRR compatibility?

The question is quite justified, especially in the case of televisions. Although the HDMI VRR (Variable Refresh Rate) and AMD FreeSync technologies are based on the same VESA Adaptive Sync standard, they are not exactly identical and their compatibility is not guaranteed. AMD had to adapt its drivers at the time in order to be able to operate FreeSync via the HDMI interface. But even after that, most of the first FreeSync displays couldn't activate this technology via HDMI.

To ensure connectivity, it is strongly recommended that you use an HDMI 2.1 end-to-end interface that VRR has natively integrated. In some cases it is probably also possible in some cases that everything also works via the HDMI 2.0b interface (because FreeSync is mostly satisfied with it), but the risk of incompatibility is then very high.

More importantly, when using a normal home TV, NVIDIA provides BFGD displays with the DisplayPort interface, an advanced G-Sync controller, and the built-in Nvidia Shield. NVIDIA may not want too much competition in the industry, as it seems to lack a bit of motivation for HDMI VRR compatibility. NVIDIA has at least made it clear that no HDMI VRR compatibility is planned at this time. So you have to turn BFGD screens for the living room.

Which GeForces are compatible?

NVIDIA announces compatibility for the GeForce 10 and 20 series on Pascal GPUs and Turing. By using the DisplayPort output, compatibility is maximum. On the other hand, the question arises for HDMI outputs: Pascal is in HDMI 2.0b, just like Turing! As a result, it is difficult for TV with HDMI 2.1 and VRR to take a step towards full compatibility (backward compatibility). NVIDIA could also upgrade its HDMI 2.0b to 2.1 outputs, of course, but that's not certain at the moment.

What are the certification criteria?

NVIDIA has already validated the first 12 of 400 screens tested, as officially announced. The full list is available on Nvidia's homepage. The first criteria for NVIDIA's "G-Sync Compatible" validation are:

- No flickering. By this, Nvidia means the random and sudden change in brightness depending on the refresh rate (see video below).

- No fade-out. Disappear the image, even if it is short, especially due to an image that was missed from the screen due to a synchro error. This fade-out appears randomly when the refresh rate changes (see the video below).

- Is even LFC required? Interestingly, the 12 displays validated by NVIDIA all feature Low Framerate Compensation (LFC) technology, which splits images when the frame rate is low. This could help in particular to avoid flickering and blanking. However, NVIDIA has explained to us that the presence of the LFC is probably a coincidence, but not an explicit criterion, and also explained that it does not know exactly what the LFC does in practice, as they could not verify it.

- No artifacts means no defect or bug in the update display: Often there is an overdrive in play, which, if set too high, can cause reverse-ghosting or even rainbow effects on some screens.

- And it also specifies a minimum ratio for the update rate range managed by the ad. It must be at least 2.4:1, i.e. 60 to 144 Hz. So there is no minimum lower limit: the 12 validated screens start at 30, 40 or 48 Hz. On the other hand, most 60 or 75 Hz screens are automatically eliminated unless they can deliver below 30 Hz at the lower limit. However, a 30 to 75 Hz display could be validated!

Does NVIDIA charge its partners for certification?

No. Several sources have now independently confirmed that NVIDIA does not require an extra payment for its "G-Sync Compatible" validation. The manufacturer's only investment is to send the monitor to be tested to NVIDIA. It also appears that the respective monitor manufacturer can perform the tests according to Nvidia's criteria in its own laboratories and send the results, as well as certified evidence, to NVIDIA for validation. So similar to the Green Light Program with the special software for graphics cards.

It's certainly also about NVIDIA retaining control of its G-Sync brand and maintaining its reputation. The goal is to avoid possible bad adventures, for example with an unknown manufacturer who could launch a screen with very poor quality and glued-on G-Sync label. I believe that this is a perfectly legitimate concern, also in the interests of the final consumers.

By the way, the 400 monitors that have already been tested were purchased on the market and tested individually at Nvidia. However, there are currently no plans for future real FreeSync displays and their own internal tests. However, 140 more monitors are currently being tested.

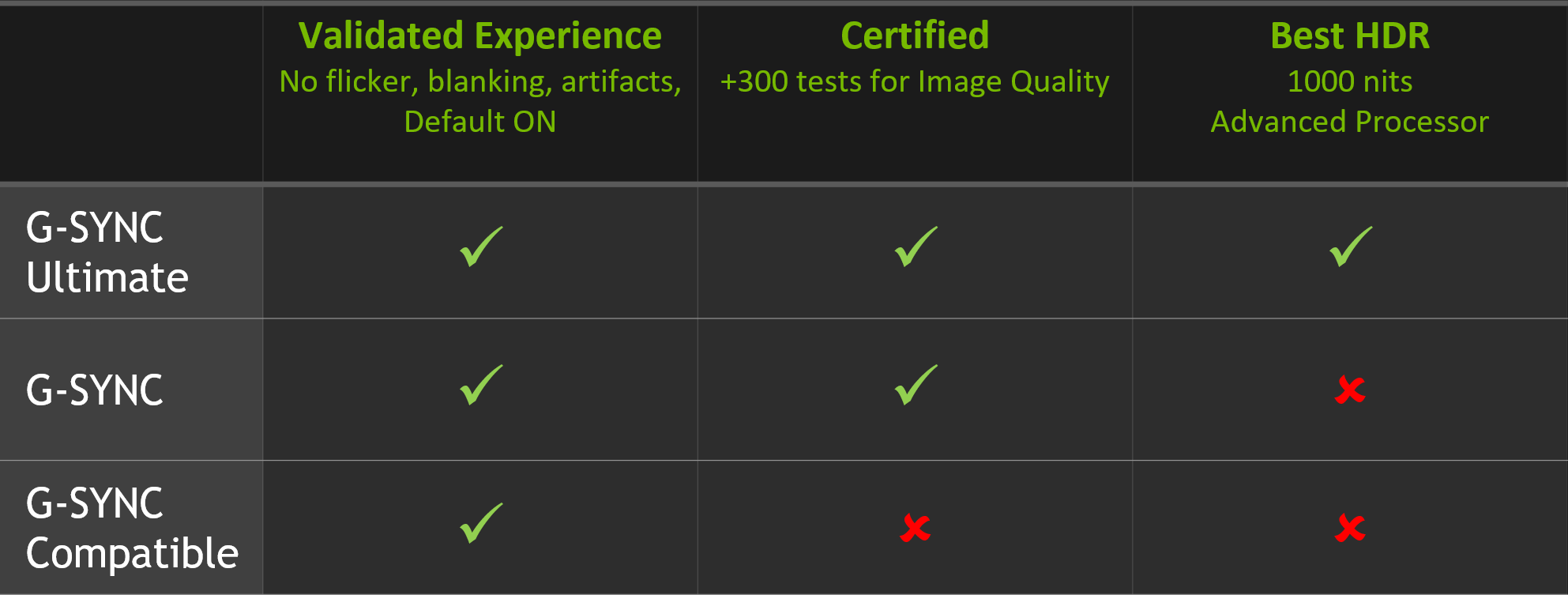

What are the benefits of G-Sync?

In order to justify the significantly higher acquisition costs, G-Sync is now presented as a high-end synchronization technology with some exclusive technical advantages. Benefits include the presence of NVIDIA's G-Sync controller board on display:

- A minimum delay in input (lag)

- A variable, dynamic overdrive depending on the refresh rate.

- An update area from 1 Hz (including image splitting)

- 300 screen display quality checks for all G-Sync screens

- HDR up to 1000 cd/m2, and a more advanced display controller for G-Sync Ultimate and large BFGD displays, including Dynamic Local Dimming (FALD) Backlight Management.

What about G-Sync for laptops?

That's a bit tricky. The implementation of G-Sync on laptops is done by first-time without a real, native G-Sync controller. Therefore, the VESA Adaptive Sync standard is used right from the start! This type of G-Sync is therefore slightly less advanced than the desktop monitor's and offers no advantage over the "G-Sync Compatible" validation, at least for the time being.

However, notebook manufacturers currently have to pay NVIDIA to activate G-Sync. One might suspect that this rather unfavourable situation could change now, because by definition all current laptops are no different than devices with "G-Sync Compatible" certified displays via the DisplayPort. But NVIDIA has also made it clear that nothing will change for laptops. Everything remains the same: Manufacturers need to enable G-Sync functionality through NVIDIA, especially because of the need to validate the LCD panel used in the laptop.

What happens to the current G-Sync screens?

Manufacturers and resellers are a little panicked. How can we get the stock of G-Sync displays to the man or woman, whose prices are now difficult to justify and which, moreover, offer hardly any real benefits? Some sources have confirmed that the current G-Sync screens could become the subject of peel offers.

However, if you already have a more up-to-date Nvidia graphics card and are targeting a new monitor, you might save a lot of money by cleverly monitoring the market. Because one thing is clear, owners of g-sync monitors can of course continue to use them. However, there is no fear that they could suddenly become incompatible. Nvidia has also assured us of this.

Finally, I would like to thank Bruno Cormier, who collected all this information on the spot, and the sources who also told us things that were unfortunately missing from the official statements.

Kommentieren