It is clear to many that AI has become an important part of technology. However, the question arises as to which company is taking the lead when it comes to AI In terms of GPUs, this is particularly exciting, which is why Stability AI used Stable Diffusion to compare Intel’s Gaudi 2 and NVIDIA’s H100 and A100 GPU accelerators.

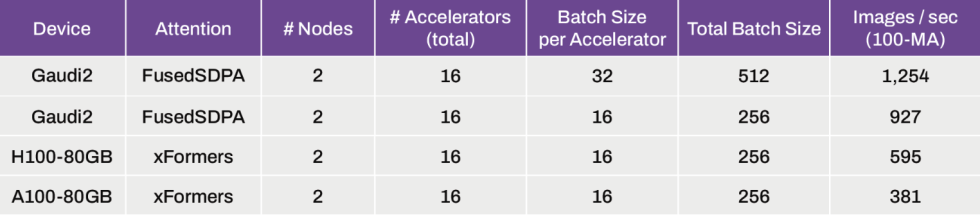

First of all, what is Stable Diffusion? It is an AI generator that can process text into realistic images. The company responsible for this is Stability AI. With Stable Diffusion 3, it offers a parameter number of 800M to 8B parameters. The 2B parameter version was used for the analysis. The benchmark is tested on 2 nodes, i.e. 16 accelerators, and shows an interesting result.

The Gaudi 2 system processed 927 training images per second and thus achieved 1.5 times greater performance than NVIDIA’s H100-80GB. In addition, a stack size of 32 per accelerator could be installed in the 96 GB High Bandwidth Memory (HBM2E) of Gaudi 2 to further increase the training rate to 1,254 images per second.

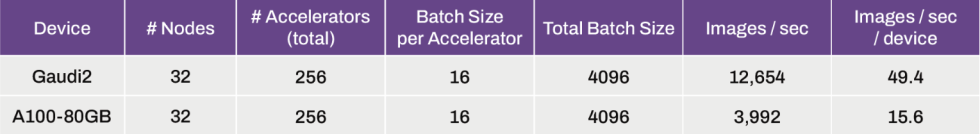

Then we continued with 32 nodes, which corresponds to 256 accelerators. Here the Gaudi2 was also able to show a clear performance. It generated 12,654 images per second and was thus able to generate slightly more than 3x more images than the A100-80GB.

A second model was also used for testing. This is Stable Beluga 2.5 70B and is a tuned version of LLaMA 2 70B, which is based on the Stable Beluga 2 model. The company ran this training benchmark on 256 Gaudi 2 accelerators. When running the PyTorch code without additional optimizations, the average total throughput was 116,777 tokens per second. For the 70B language model on Gaudi 2, an interference test generated 673 tokens/second per accelerator, using an input token size of 128 and an output token size of 2048. Compared to TensorRT-LLM, Gaudi 2 appears to be 28% faster than the 525 tokens per second on the A100.

According to Stable Diffussion, Gaudi 2 is expected to outperform the A100 chips with further optimizations, as currently the A100 chip has a 40% better performance to generate images, mainly due to the TensorRT optimization. However, it is only a question of how long this will be the case.

On inference tests with the Stable Diffusion 3 8B parameter model the Gaudi 2 chips offer inference speed similar to Nvidia A100 chips using base PyTorch. However, with TensorRT optimization, the A100 chips produce images 40% faster than Gaudi 2. We anticipate that with further optimization, Gaudi 2 will soon outperform A100s on this model. In earlier tests on our SDXL model with base PyTorch, Gaudi 2 generates a 1024×1024 image in 30 steps in 3.2 seconds, versus 3.6 seconds for PyTorch on A100s and 2.7 seconds for a generation with TensorRT on an A100.

Source: Stability AI

Bisher keine Kommentare

Kommentar

Lade neue Kommentare

Artikel-Butler

Alle Kommentare lesen unter igor´sLAB Community →