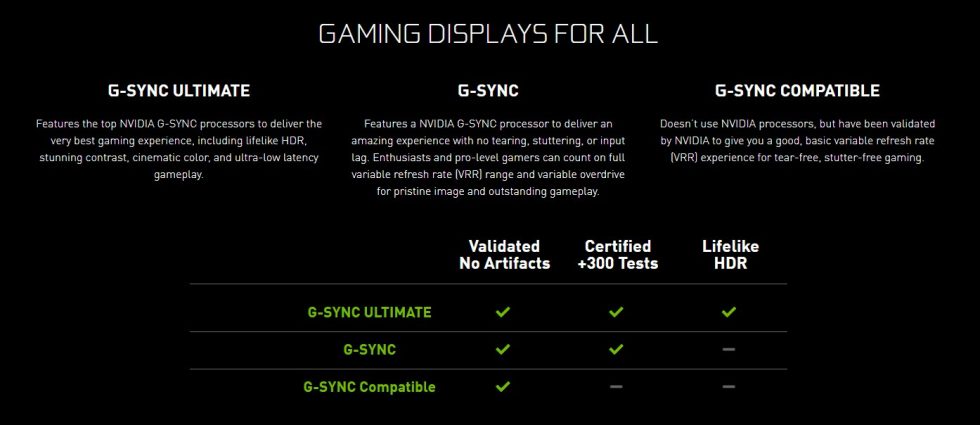

A lot has happened since the first G-Sync monitors from Nvidia came out. G-Sync with Nvidia graphics cards has also been possible with cheaper “Freesync” monitors for a long time. The difference here is that the frame synchronization between GPU and monitor is only done via the DisplayPort protocol and no additional hardware is required on the monitor side.

But this particular hardware is built into the official “G-Sync” products for a reason, if Nvidia has its way. Lower latency, better performance in low FPS ranges and HDR compatibility are features that are only possible with Nvidia’s dedicated hardware and have since been certified as “G-Sync Ultimate”.

I recently bought such a “G-Sync Ultimate” monitor privately. As a sysadmin and gamer, such a large investment is still unreasonable, but at least justifiable – or so I tell myself. At 1800 Euro for the AOC Agon AG353UCG I bought it after a long time of consideration, which should replace my previous Acer XB270HU – a G-Sync monitor of the first hour.

After using the AG353UCG for the first time, I immediately noticed the internal fan, which is not mentioned on the product page, but which immediately makes the new monitor a source of disturbance at my otherwise quiet workplace. My astonishment was immediately followed by the urge to get to the bottom of it all and disassemble the monitor.

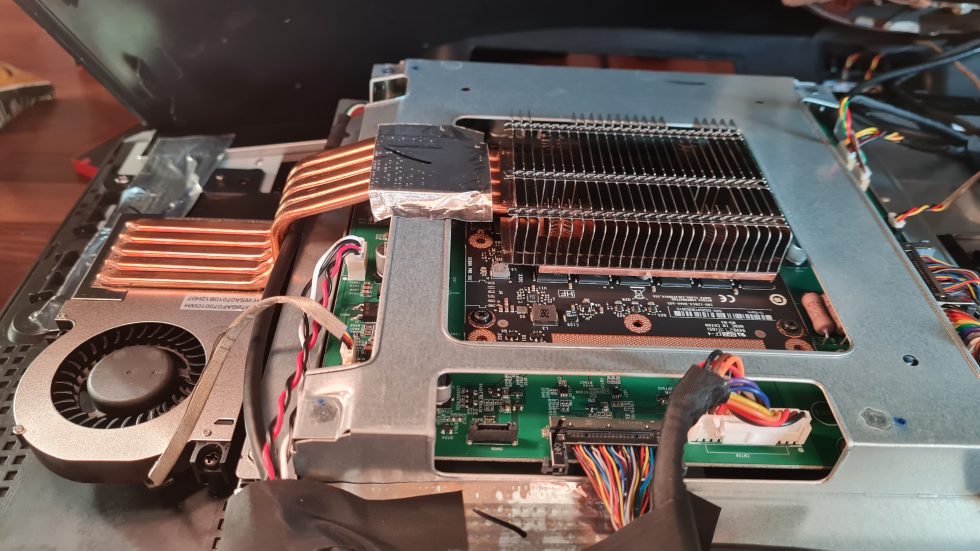

A few screws and dozens of plastic clips later this sight opened up to me. 5 heatpipes, a massive copper heatsink and a radial fan, as known from mobile workstation notebooks, actually provide active cooling in this monitor, and not too scarce.

Simply unplugging the power from the fan and relying on passive cooling, AMD X570S style, turned out to be a bad idea pretty quickly. After about 30 minutes of gaming in HDR at lowest brightness, a “Critical Temperature – Emergency Shutdown” message appears on the display’s OSD and it shuts down. The monitor can only be restarted by disconnecting and reconnecting the power supply. As a result, the waste heat should be significantly higher than I initially suspected and honestly hoped.

So I disassembled the monitor again and took a look at what was underneath the cooling copper construction. The result is on the one hand very viscous thermal paste between the base plate and the actively cooled chip, and underneath a black board, which resembles with layout and components at first sight a graphics card in MXM format, as it is also known from notebooks.

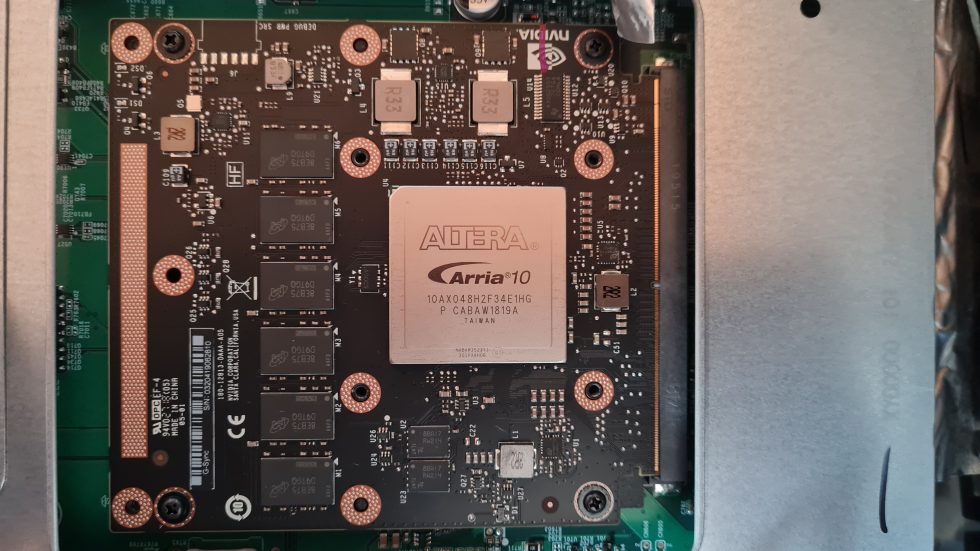

Then, underneath the raw amounts of cement-like paste, an “Altera Arria 10” chip emerges, with the model number 10AX048H2F34E1HG. After a short research it turns out to be a field-programmable gate array, or FPGA for short, which is simply a microchip with programmable functions. These FPGAs are relatively expensive compared to traditional fixed-function microchips because of their programmability. The advantage of FPGAs is that you don’t have to produce special chips and that the functionality can be programmed afterwards via software.

Fortunately the datasheet for this chip is public and as manufacturer beside Altera also Intel comes to light. The product number documentation can then be used to find out the exact variant of the Arria 10 chip that is installed here.

gsync_ultimate_a10_overview

So this A10X is a 17.4 Gbps transciever type with 480K logic elements, 24 transceivers with a speed grade of “2”, built into an FBGA package with 1152 pins on 35 mm x 35 mm. In addition, the chip has an operating temperature of 0 – 100 °C, FPGA Speed Grade of “1”, the power level “High Performance” and “RoHS6” classification. Voltage and power consumption depend on the respective programming and can therefore not be determined more exactly without further ado, but to conclude from the cooling needs it should be more than a normal desktop chipset with e.g. 15 W.

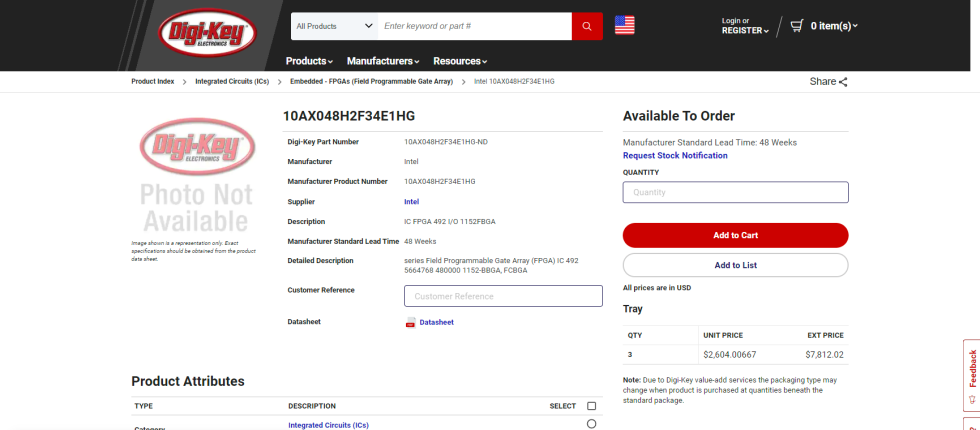

Throw the chip number into Google in search of the list price and you’re amazed. Over 2000 Euro or 2500 USD are called for it on sides like Digi-Key, whereby a quantity discount must be still deducted with higher numbers of items, of course. Moreover, Nvidia, as a bulk buyer, will probably not buy from such intermediaries, but directly from Intel.

Nevertheless, even if one would put a hypothetical purchase price of “only” 1000 USD in the room, Nvidia still has to program the FPGA, buy the remaining components, have the module manufactured and then resell the finished G-Sync module to the monitor manufacturers. A cheaper “G-Sync Ultimate” monitor like the LG UltraGear 34GP950G-B is already available for about 1250 euro, and that includes the panel and all the other stuff.

So the question is, how can such an expensive FPGA be installed in a monitor that is even cheaper than the FPGA in the list price? Do monitor manufacturers bear the cost of the G-Sync module? I don’t think so. Is Nvidia swallowing a lot of the cost of the FPGA and selling the G-Sync modules at a loss in exchange for pushing the G-Sync ecosystem with their GeForce graphics cards? That sounds more plausible.

And why does Nvidia use an FPGA at all and not have special chips made instead, which would be more expensive to design but cheaper to buy – are the G-Sync Ultimate quantities really that low? We don’t have the answers to all of these questions yet, but interesting discussion material it certainly is.

82 Antworten

Kommentar

Lade neue Kommentare

Mitglied

Urgestein

Veteran

Veteran

Moderator

Urgestein

Veteran

Urgestein

Veteran

Veteran

Urgestein

Veteran

Veteran

Urgestein

Mitglied

Neuling

Alle Kommentare lesen unter igor´sLAB Community →