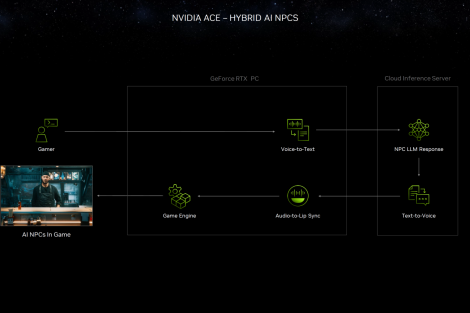

NVIDIA’s ACE (Avatar Cloud Engine) is an impressive technology that has yet to be seen in action. However, with the latest announcement from Team Green, we’re getting closer. The company has today unveiled its production microservices for ACE technology. These enable game developers, tools developers and app developers to seamlessly integrate AI models into avatars or NPCs. This enables them to create next-generation gaming and app experiences.

ACE has already been discussed many times. In 2023, NVIDIA expanded the technology with the introduction of NeMo SteerLM, which allows developers to experiment with customizable attributes for digital avatars. The recent collaboration with Inworld AI further extends these NPCs to act in a context-aware manner.

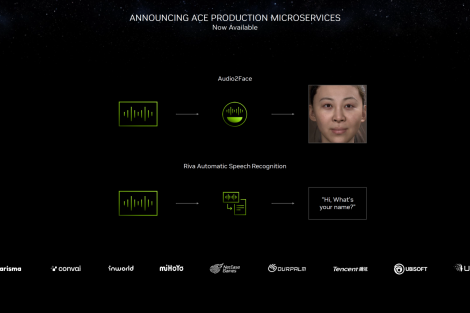

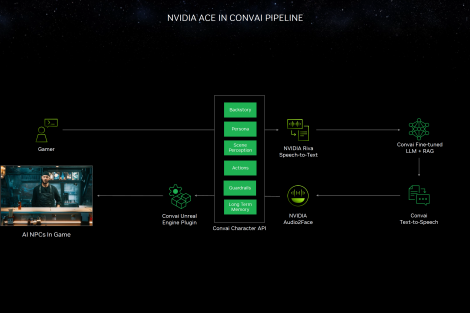

To usher in 2024, NVIDIA has collaborated with several developers through its ACE microservices partnerships to enhance AI-driven NPCs using Audio2Face (A2F) and Riva Automatic Speech Recognition (ASR). The developers involved include Ubisoft, Tencent, UneeQ, Ourpalm, NetEase Games, miHoYo, Convai, Charisma AI and the aforementioned Inworld. A demonstration by NVIDIA will show how Convai’s advanced LLM RAG models for text-to-speech are used to create highly convincing interactions with NPCs in games. The NPCs not only respond to the player based on the tone of their speech, but also interact with other NPCs in their environment based on the text they have just received from the player.

Once again, if we look at it from a gaming perspective, this could create some truly innovative experiences that have not been seen before. We’re excited to see how this is implemented in a quality AAA game. With ACE microservices, we are one step closer to realizing this goal.

4 Antworten

Kommentar

Lade neue Kommentare

Urgestein

Urgestein

Alle Kommentare lesen unter igor´sLAB Community →