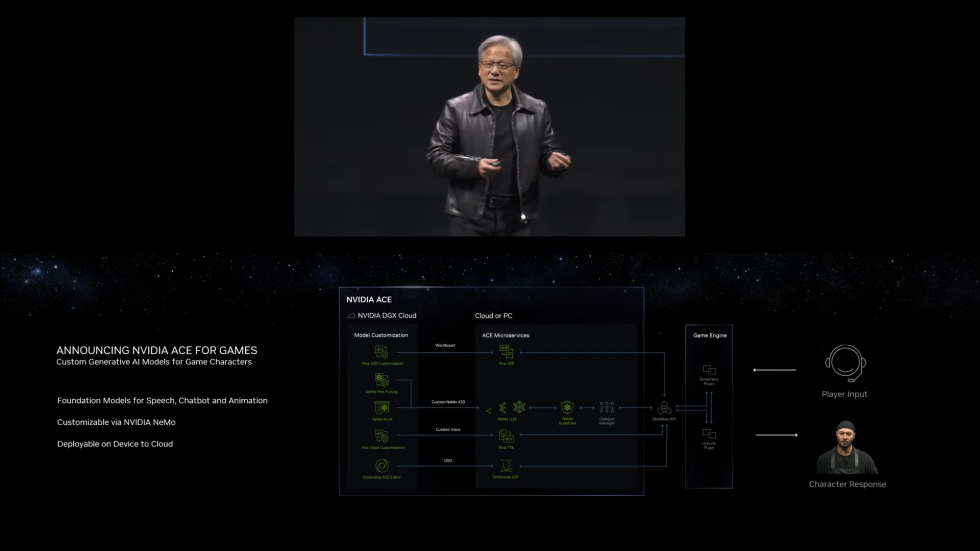

At Computex 2023, NVIDIA presented its ACE (Avatar Cloud Engine) technology for the first time. The goal was to help developers integrate unique AI models for speech, conversation and movement into game characters. Later, the technology was further enhanced with NVIDIA’s NeMo SteerLM tools to add an additional layer of customization for each character’s attributes. The company gave an interesting demonstration, which we reported on in detail.

NVIDIA is now going one step further with ACE, offering even more realism and accessibility for AI-controlled NPCs, avatars and digital humans. The brand new ACE model allows developers to utilize various cloud APIs for Automatic Speech Recognition (ASR), Text-to-Speech (TTS), Neural Machine Translation (NMT) and Audio2Face (A2F). All of these tools are already available in the early access program and work perfectly with popular rendering tools such as Unreal Engine 5, which is considered a powerhouse for developing next-gen AAA gaming experiences.

NVIDIA is not only working on developing its own technologies, but is also cooperating with start-ups such as Inworld AI, which uses Generative AI to create next-gen game characters. Inworld AI is already known for its innovative technology, which has already been reported on. Together with Xbox, Inworld AI has developed an AI-powered game development toolkit that enables dynamic stories, quests and dialog for future AAA titles. The technology has already been integrated by modders into existing games such as GTA V and Skyrim, where it enables completely new storylines and immersive dialog options.

The upcoming MMO Avalon will also use the technology to allow players to generate their own content with contextually aware NPCs. NVIDIA reveals more details about Inworld’s AI “Character Engine” in its blog, which will become open source after securing significant funding from companies such as Lightspeed Studios, Microsoft and Samsung. The Character Engine consists of three main layers.

Character Brain Layer: orchestrates a character’s performance by syncing to its multiple personality machine learning models, such as for text-to-speech, automatic speech recognition, emotions, gestures and animations.

The layer also enables AI-based NPCs to learn and adapt, navigate relationships and perform motivated actions. For example, users can create triggers using the “Goals and Action” feature to program NPCs to behave in a certain way in response to a given player input.

Contextual Mesh Layer: allows developers to set parameters for content and safety mechanisms, custom knowledge and narrative controls. Game developers can use the “Relationships” feature to create emergent narratives, such that an ally can turn into an enemy or vice versa based on how players treat an NPC.

Real-Time AI Layer: The Real-Time AI layer ensures optimal performance and scalability for real-time experiences.

Powerful AI hardware is required to operate the character engine. For this purpose, InWorld AI uses the A100 Ampere accelerator from NVIDIA, which offers the company the best balance between cost and performance.

Technologies like NVIDIA ACE and Inworld AI’s Character Engine are really pushing the limits of what Generative AI can do and achieve. NVIDIA has many plans for the future of AI, including the use of fully neural rendering approaches. This is just a glimpse of what’s to come. One example of a major AAA game that will benefit from NVIDIA ACE technology is expected to be S.T.A.L.K.E.R 2, which will be released next year. It will be very exciting to see how AI will influence the next generation of NPCs.

Source: YouTube

5 Antworten

Kommentar

Lade neue Kommentare

Mitglied

Veteran

Mitglied

Veteran

Urgestein

Alle Kommentare lesen unter igor´sLAB Community →