Game performance

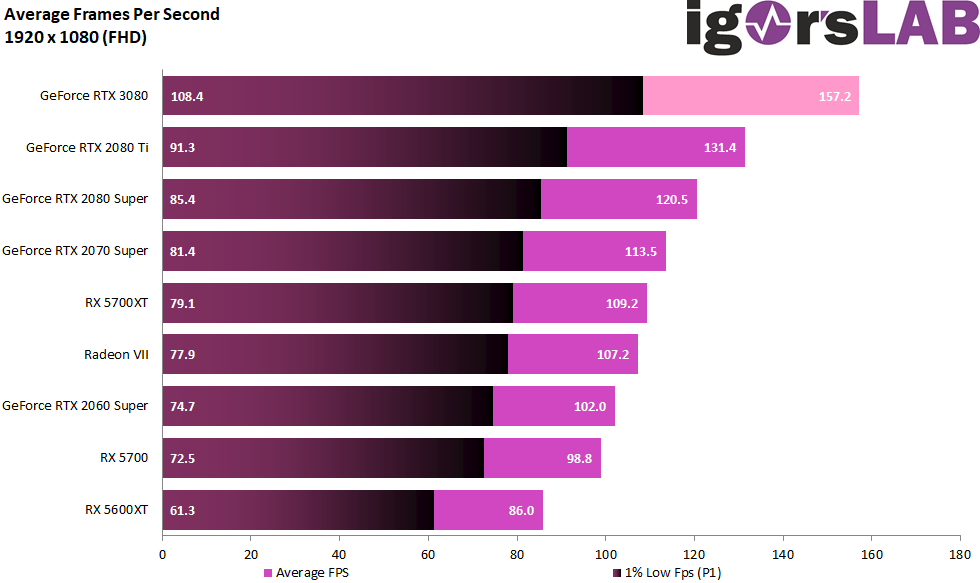

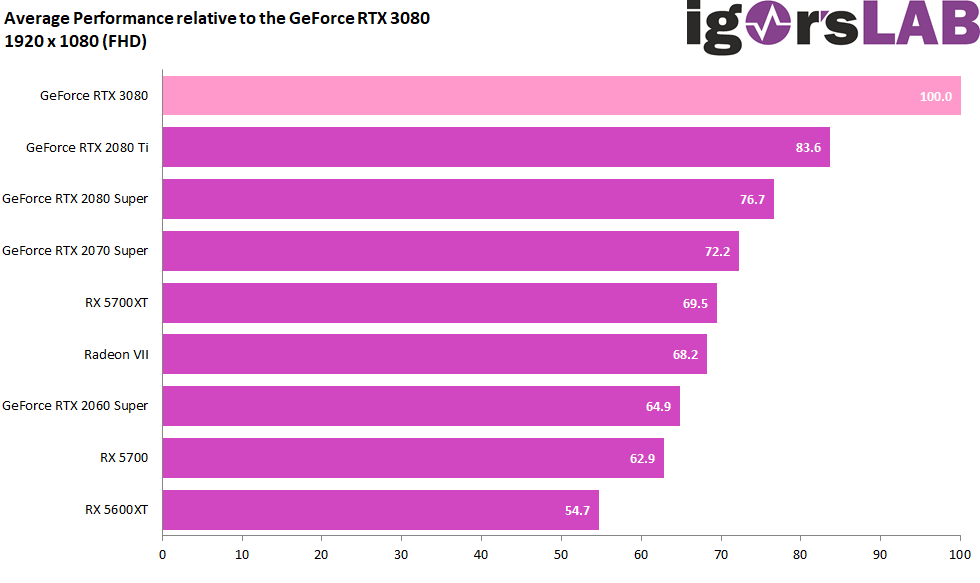

In the following result overviews I have on the one hand the average FPS of all cards and in all games cumulated anyway this then still percentage calculated and compared. I tested a total of 10 games in three resolutions, only to leave out the Full-HD resolution in the end for all the individual benchmarks. The reason is quickly explained, because neither the water-cooled Ryzen 9 3900XT, overclocked to 4.5 GHz, nor the Intel Core i9-10900K, running at 5 GHz and also perfectly watered, were consistently able to limit this graphics card. In some games the Intel CPU was slightly ahead, in others it was more a common failure. Since I can save 240 graphics this way, but I still have everything I need, I will now put these values at least at the end on the summary page.

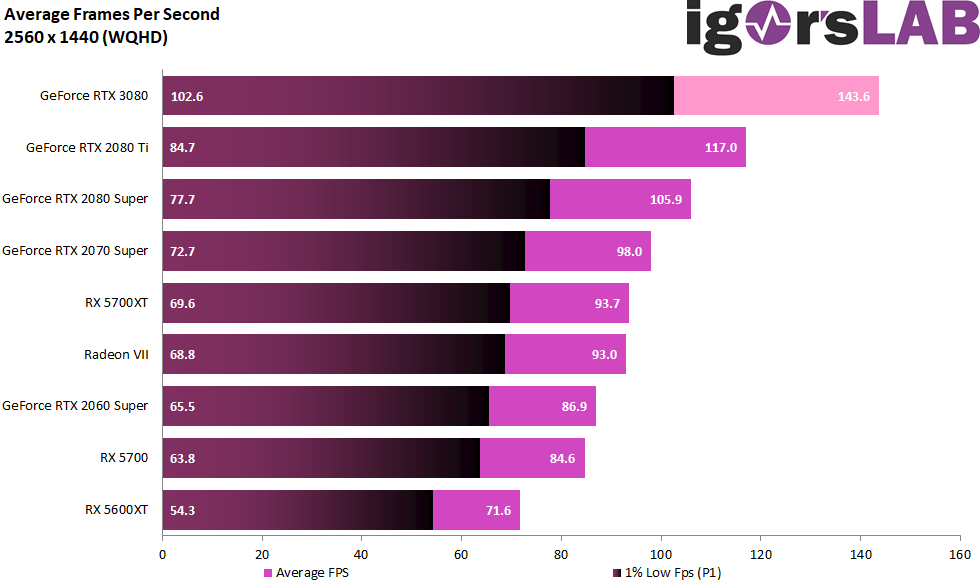

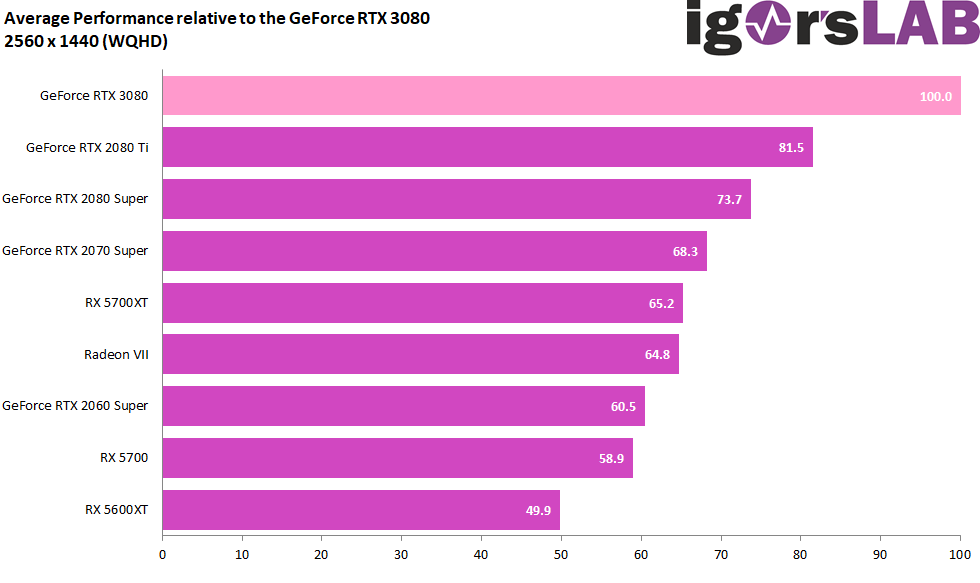

In WQHD, the GeForce RTX 3080 picks up a bit and the projection increases slightly.

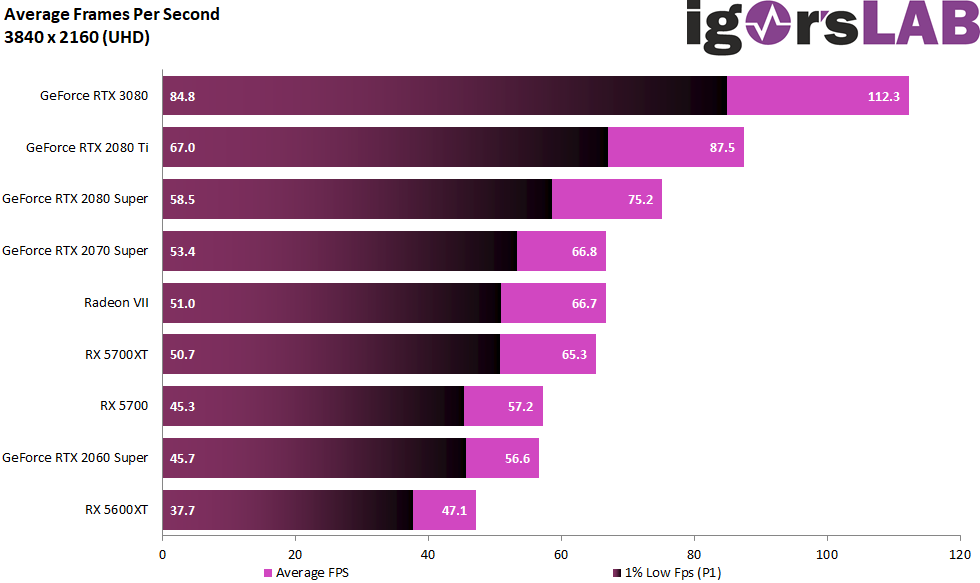

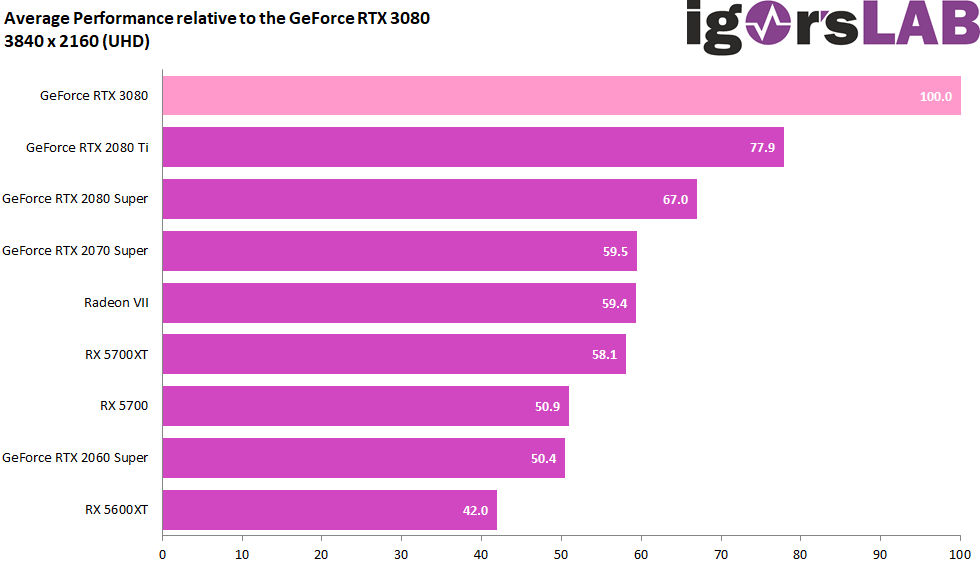

In Ultra-HD, the action is really happening and we must never forget that this is not cherry picking, but that there are games in the selection in every situation that can easily break through the fair weather front.

In summary: It should not be an advertising sales event, but a test that is as objective and fair as possible, even if the results are still so solid that you have to fight a bit with the “want to have” factor in the gaming sector. Especially at higher resolutions, this card is a real board, because even if the lead over the GeForce RTX 2080 Super doesn’t always turn out to be in high double digits, it’s always enough to virtually reach the next quality level in playability. Right stop of many quality controllers included. Particularly if the games of the GeForce RTX 3080 and the new architecture lie, are also sometimes up to 80% increase compared to the RTX 2080 super in it and a RTX 2080 Ti is beaten with almost 40%. This too must be noted if one wants to be fair. But it is only the beginning and not generally enforceable with the game engines, unfortunately.

In summary: It should not be an advertising sales event, but a test that is as objective and fair as possible, even if the results are still so solid that you have to fight a bit with the “want to have” factor in the gaming sector. Especially at higher resolutions, this card is a real board, because even if the lead over the GeForce RTX 2080 Super doesn’t always turn out to be in high double digits, it’s always enough to virtually reach the next quality level in playability. Right stop of many quality controllers included. Particularly if the games of the GeForce RTX 3080 and the new architecture lie, are also sometimes up to 80% increase compared to the RTX 2080 super in it and a RTX 2080 Ti is beaten with almost 40%. This too must be noted if one wants to be fair. But it is only the beginning and not generally enforceable with the game engines, unfortunately.

It is also exactly the increase, because you can, for example. has always demanded when playing in Ultra-HD. Here you go, here’s an offer for it. The fact that the RAM with its 10 GB could become scarce from time to time, at the latest in Ultra-HD, is due to the design by NVIDIA and also by many game manufacturers, who fill up with data exactly what can be filled up. Which of course would not be a blanket apology and thus the only point of criticism. It should have been doubled by now, price point or no price point.

DLSS 2.0 will definitely help here, because what was presented with DLSS at NVIDIA is almost a kind of wonder weapon as long as it is implemented. Of course, the game manufacturers are also in demand, so NVIDIA should not rely on them. This also applies to the inflationary use of the demanding ray tracing features. Less is more, and if implemented appropriately, no map needs to gasp for air. In combination with DLSS and Brain 2.0, the whole package is certainly forward-looking, if you are into such things. Dying more beautifully can be fun, especially when it is no longer done in slow motion.

And there’s NVIDIA Reflex for the quick clickers. Provided you have an amp card, a suitable G-Sync monitor and a game where the feature is integrated into the game. In this case the system latencies can be minimized. I recently had a longer article about this. The Reflex Low Latency mode in games like Valorant or Apex Legends is certainly an offer, but it will still have to assert itself. And then there are those nasty latencies on the Internet for which NVIDIA can’t do anything, but which can spoil your success. But the sum is always smaller if you at least take away the pile that lies in front of your own door. That can often be useful. See article.

But the mature customer always has something to complain about anyway (which is good by the way and keeps the suppliers on their toes) and NVIDIA keeps all options open in return to be able to top a possible Navi2x card with 16 GB memory expansion with 20 GB later. And who remembers the mysterious SKU20 between the GeForce RTX 3080 and RTX 3090? if AMD doesn’t screw it up again this time, this SKU20 will surely become a tie-break in pixel tennis. We’ll see.

Application Performance and Studio

A total of 17 benchmarks and many hours later you can almost feel even more enthusiasm in this area than a gamer-only gamer can perhaps understand. Because whenever FP32 computations are involved and you want to do e.g. in the final rendering every time you think you’re getting a little closer to retirement, the RTX 3080 is a spoilsport in the positive sense. Partly, even a Quadro-RTX 600 is declassified in such a way that one has to ask oneself which film one is watching at the moment. Speaking of movies, the NvEnc has been making life easier since Turing, but if all the postprocessing filters get a good run, the forced pause at the editing table is not even enough for a nice coffee break.

And because we were just talking about moving images, the new amp cards support AV1 natively in the decoder (Intel’s Xe will probably do the same). But why AV1? the codec is up to 50% more efficient than H.264, which means you only need half the Internet bandwidth to transmit the same quality – including HDR and even 10-bit encoding. This could be up to 8K – theoretically. Unfortunately, even among cable providers, the practice of Germany looks rather meagre. But the beginnings have been made and the will somehow counts.

In summary, yes, you can work really well with the GeForce RTX 3080 and you can even find it really nice, even if you can’t even see it because it’s inside the case. The 3D-community will probably hit and hyperventilate with relish, because no matter if Octane, Arnold or Blender – this is where it really gets wild. With OptiX, the GeForce RTX 3080 mutates into a cannibal, which partly acts almost twice as fast as the RTX 2080 Ti. Quadro-Punishment firmly included. Which of course also applies to video editing and all those workstation or CAD applications that do not require certified and optimized drivers for sprinting.

GeForce RTX 3080 Founders Edition

This time, the in-house Founders Edition does many things right and much better than its predecessors, even if the heavy pull-ups of the dual-slot design are not quite enough to put the card on the throne thermally and acoustically. Visually and haptically, the part is the very first cream of the crop and relatively space-saving in addition. The one with the 12-pin connector is now a running gag, but really tolerable. It is, it must be admitted without envy, the maximum of what you can squeeze out of such a compact cooler design at 320 watts and more.

That’s why she’ll find her friends and buyers, I’m sure. But without wanting to spoil, with more cooling surface would certainly be more. But the implementation of the FE is smart and at the latest the circuit board will certainly make it a coveted collector’s item. The size of the map is in its size, the rest is to get over. Buy? Absolutely. Can we convert to water-cooling? So in that sense, it fits. The rest is for testing.

Conclusion and final remark

The purchase tip is for the chip, which can make the supposed disadvantages of Samsung’s 8-nm node a thing of the past with a clever INT32 move. Well, the power consumption has increased once again, but so has the performance. If the gaming efficiency in WQHD still lies between the GeForce RTX 2080 Super and RTX 2080 Ti, it’s visible in Ultra-HD at the latest that you probably didn’t just drill the socket after all. When the CPU as a limiting factor is completely eliminated and pure computing power is required, the concept also comes back into play.

This can be seen above all in the production area, whether it is Workstation or Creation. Studio is the new GeForce wonderland and the Quadros may slowly retreat back into the professional corner of certified specialty programs. What AMD started back then with the Vega Frontier Edition and unfortunately didn’t continue (why not?), NVIDIA has long since taken up and consistently perfected. The market has changed and studio is no longer an exotic phrase. Does anyone remember in the early 1990s when you had to run to a graphic designer for every little printout? back then it was DTP and today it’s studio. More and more self-published multimedia and the transfer of many tasks from expensive rendering farms back to the PC have created exactly the gap that can now be filled with such cards.

So let’s wait for the board partner cards and what the respective interpretation of the manufacturers offers. Of course, all this is also tested, step by step. Bored? Not likely. And then AMD comes…

- 1 - Introduction, Unboxing and Test System

- 2 - Teardown, PCB analysis and Cooler

- 3 - Gaming Performance: WQHD and Full-HD with RTX On

- 4 - Gaming Performance: Ultra-HD with and without DLSS

- 5 - FPS, Percentiles, Frame Time & Variances

- 6 - Frame Times vs. Power Comsumption

- 7 - Workstation: CAD

- 8 - Studio: Rendering

- 9 - Studio: Video & Picture Editing

- 10 - Power Consumption: GPU and CPU in all Games

- 11 - Power Consumption: Efficiency in Detail

- 12 - Power Consumption: Summary, Transient Analysis and PSU Recomendation

- 13 - Temperatures and Thermal Imaging

- 14 - Noise and Sound Analysis

- 15 - NVIDIA Broadcast - more than a Gimmick?

- 16 - Summary, Conlusion and Verdict

Kommentieren