Design for the future: Tensor cores and DLSS

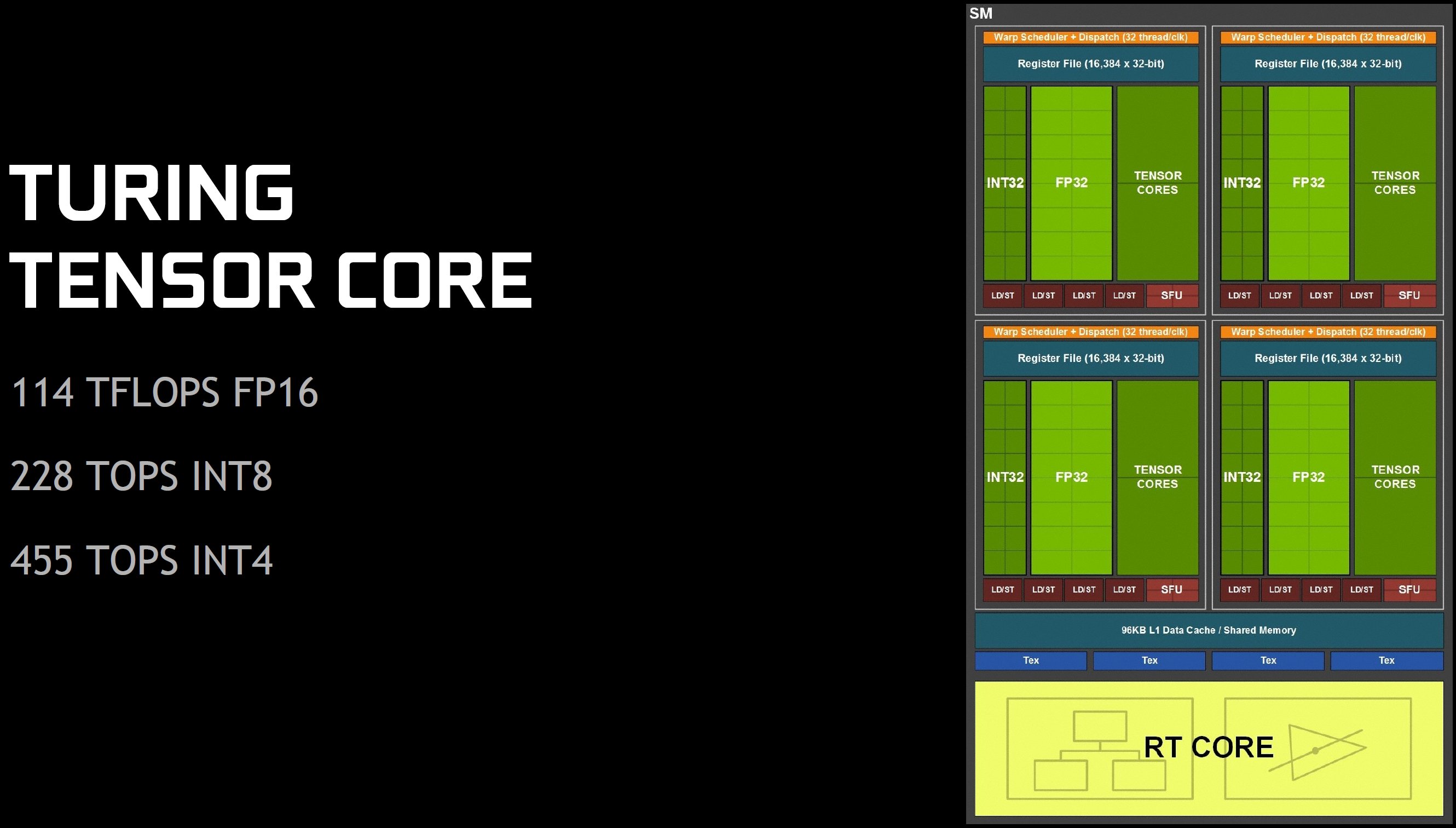

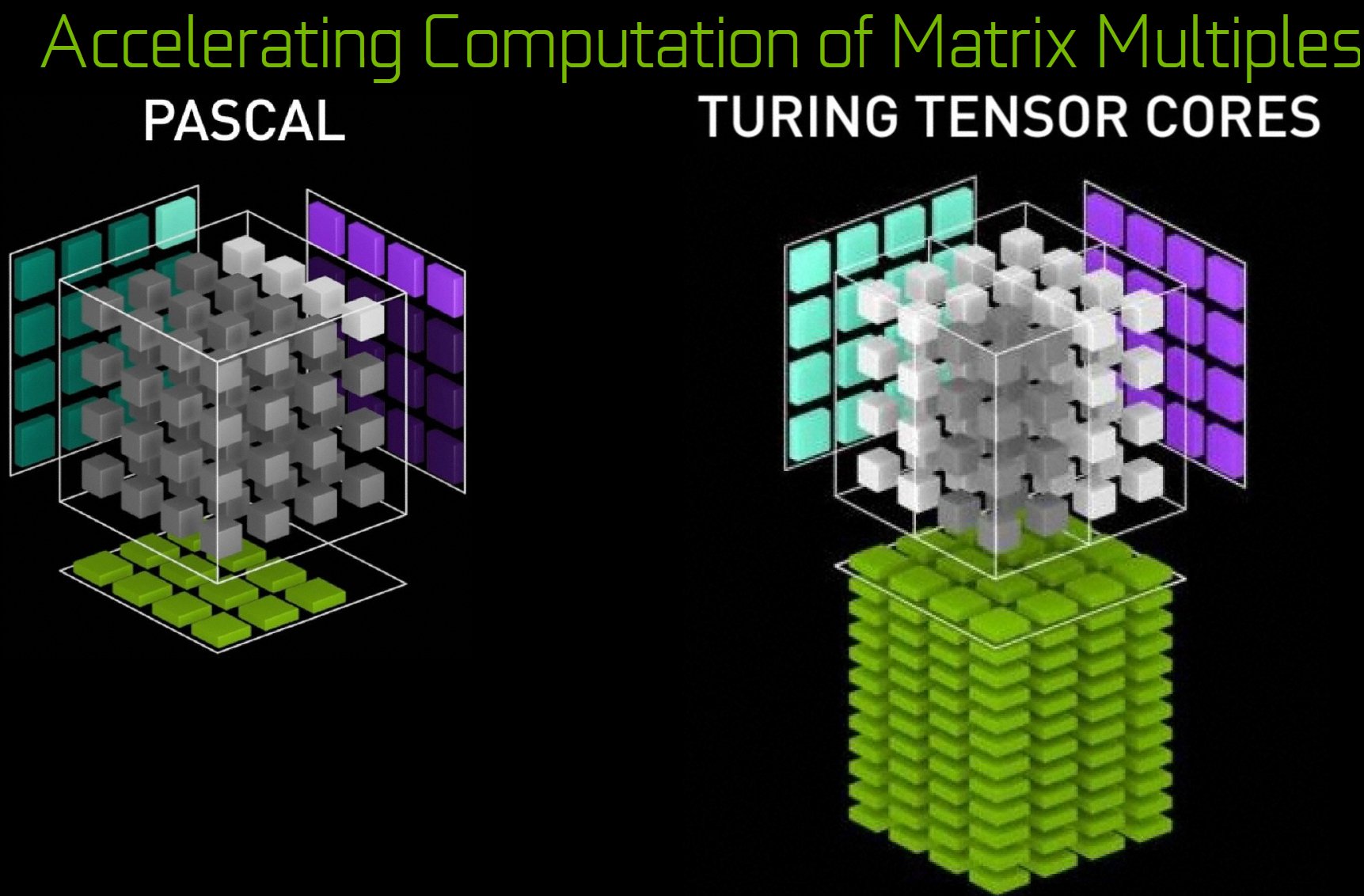

Although the Volta architecture was otherwise full of significant changes compared to Pascal, the addition of tensor cores was the most important indication of the actual purpose of the GV100: the acceleration of 4×4 matrix operations with FP16 input, which The basis for training and inference (reasoning to make explicit statements from implicit assumptions) form neural networks. Like the Volta SM, Turing has two tensor cores per quad or eight per streaming multiprocessor. The TU102 has fewer SMs than the GV100 (72 vs. 84) and the GeForce RTX 2080 Ti has fewer SMs enabled than the Titan V (68 vs. 80).

For example, the GeForce RTX 2080 Ti has only 544 tensor cores, the Titan V has 640. However, the TU102's tensor cores are a little slower: they support FP32 accumulation operations, as they are used for deep learning training, but only at half the speed of FP16 accumulation operations. Of course, this makes sense, because the GV100 is designed for the training of neural networks, while the TU102 is a gaming chip capable of using already trained networks for infering.

The targeted targeting of FP16, INT8, and INT4 operations requires fewer transistors than building wide pathways for high precision. Performance also benefits from this realignment, as Nvidia claims that the TU102's tensor cores deliver up to 114 TFLOPS for FP16 operation, 228 TOPS INT8 and 455 TOPS INT4.

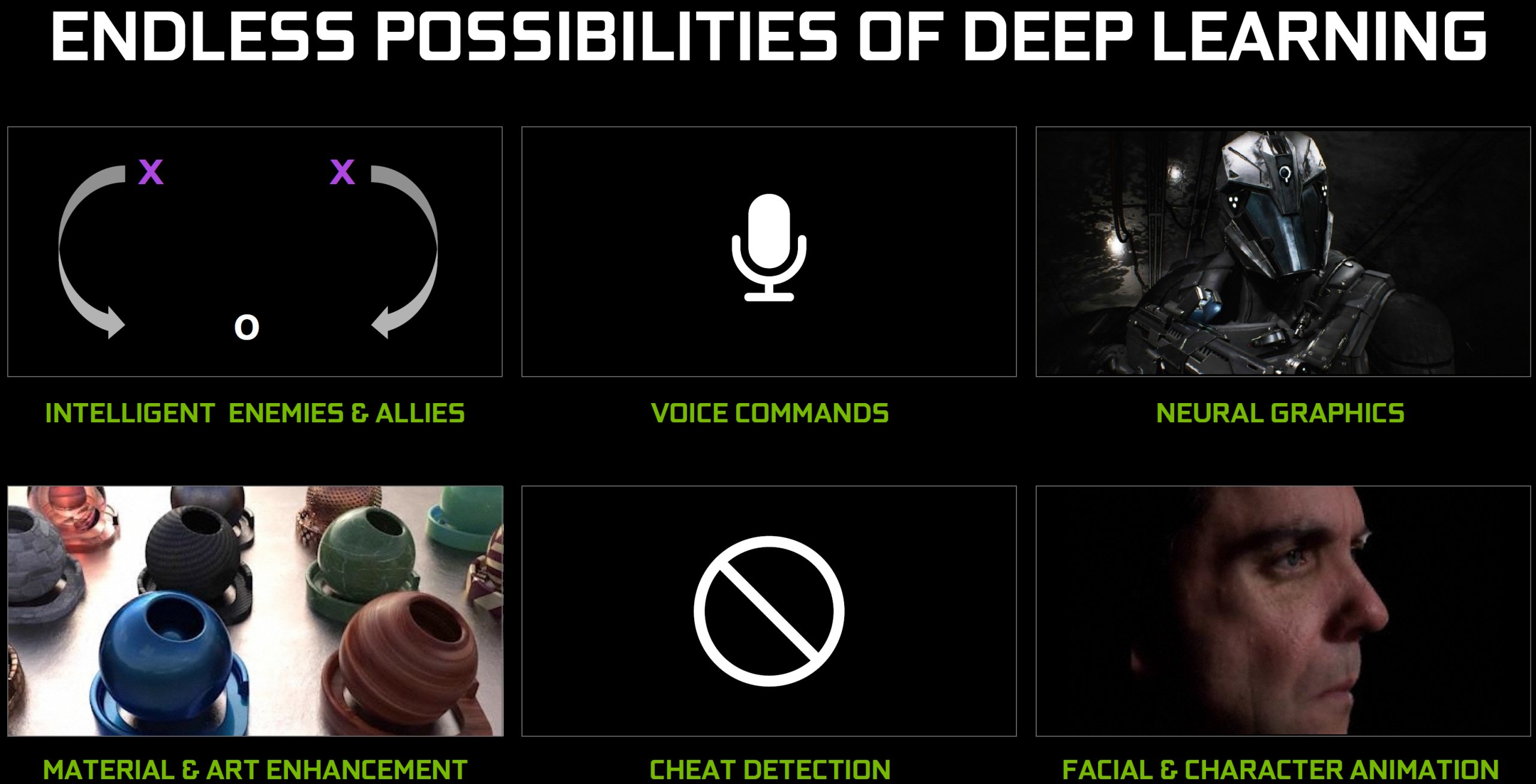

However, most of Nvidia's current plans for the tensor cores concern neural graphics. But you are also planning some other applications from the field of deep learning on desktop graphics cards. Smart enemies, for example, would completely change the way players approach final boss battles. Speech synthesis, speech recognition, material enhancement, cheat detection and character animation are all areas where AI is already in use or where Nvidia sees at least decent potential for it.

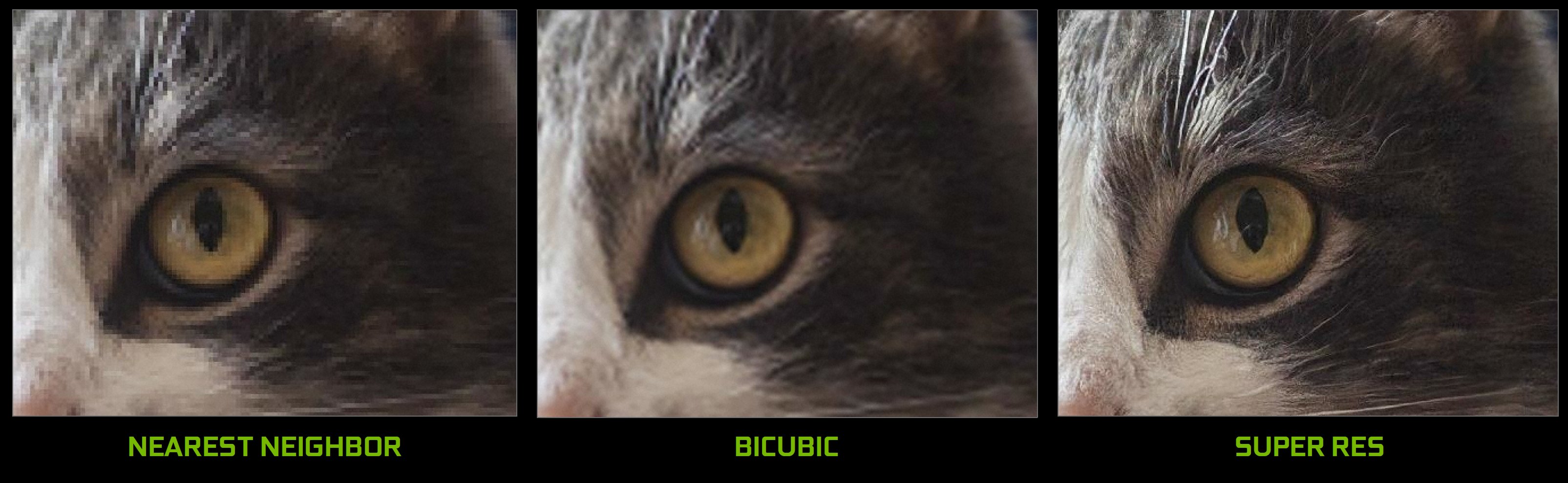

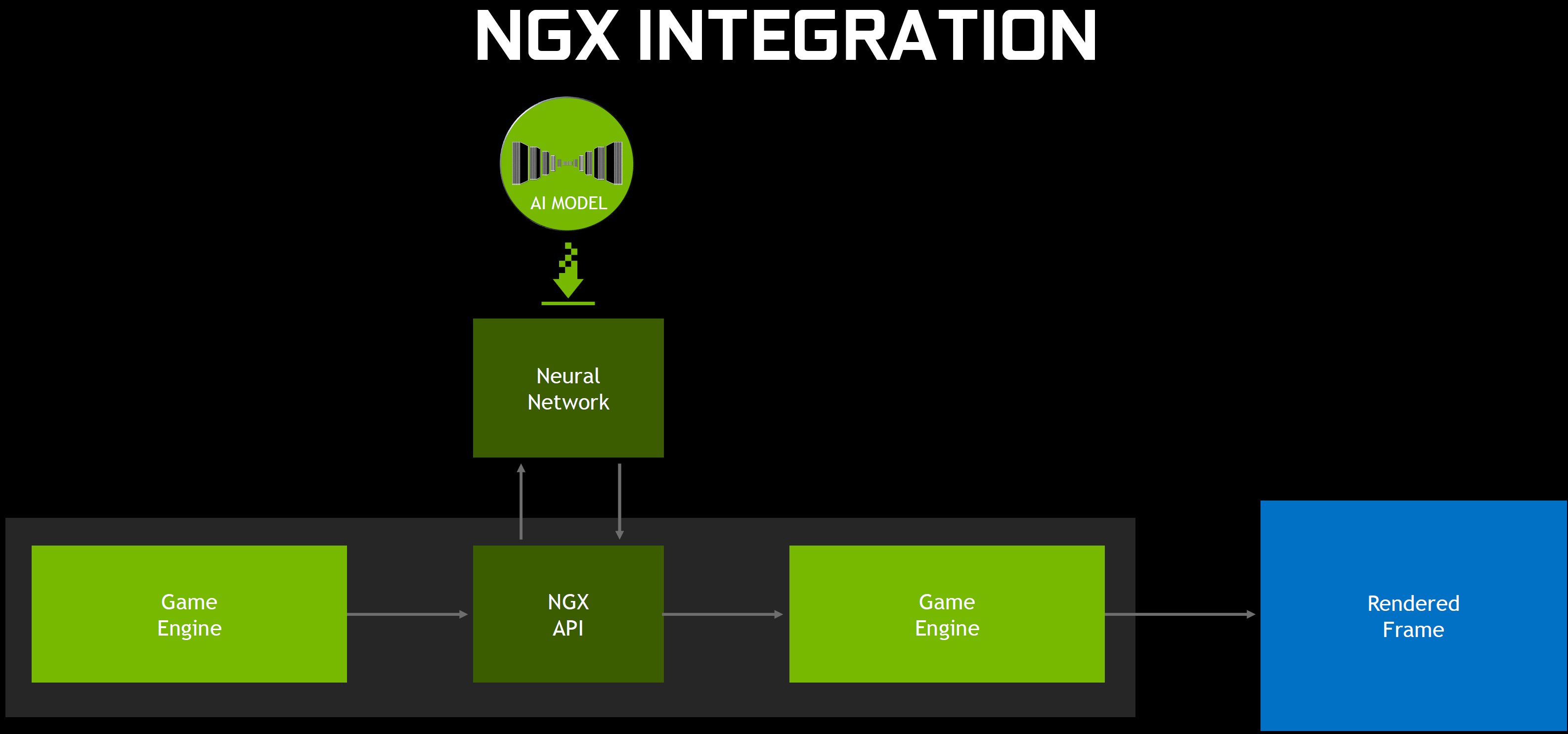

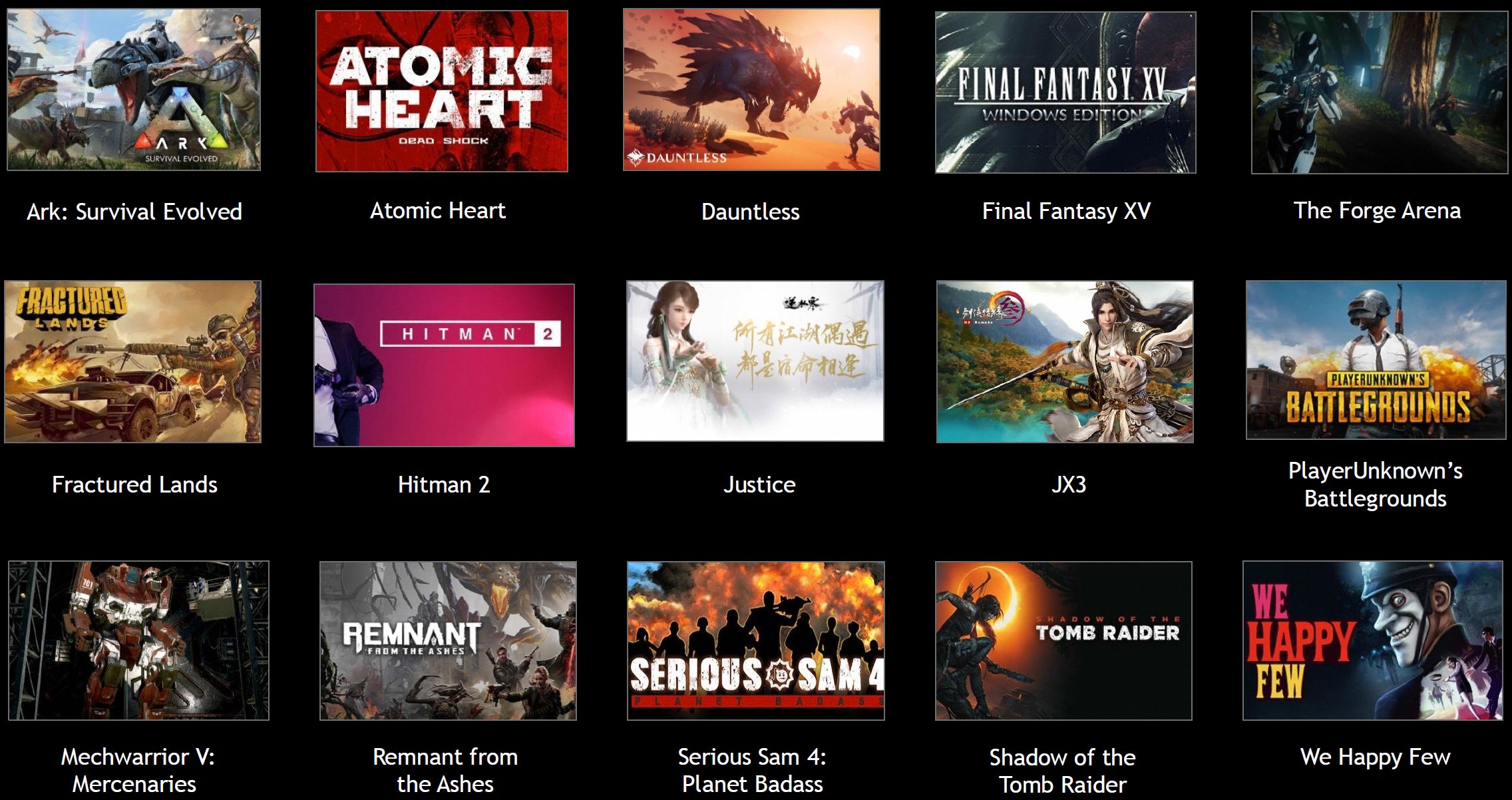

But of course Deep Learning Super Sampling (DLSS) is also the focus of geForce RTX. The process by which DLSS is implemented requires developer support from Nvidia's NGX API. But Nvidia promises that integration is pretty simple, and has released a list of games with planned support to demonstrate the enthusiasm of software developers for what DLSS can do for image quality.

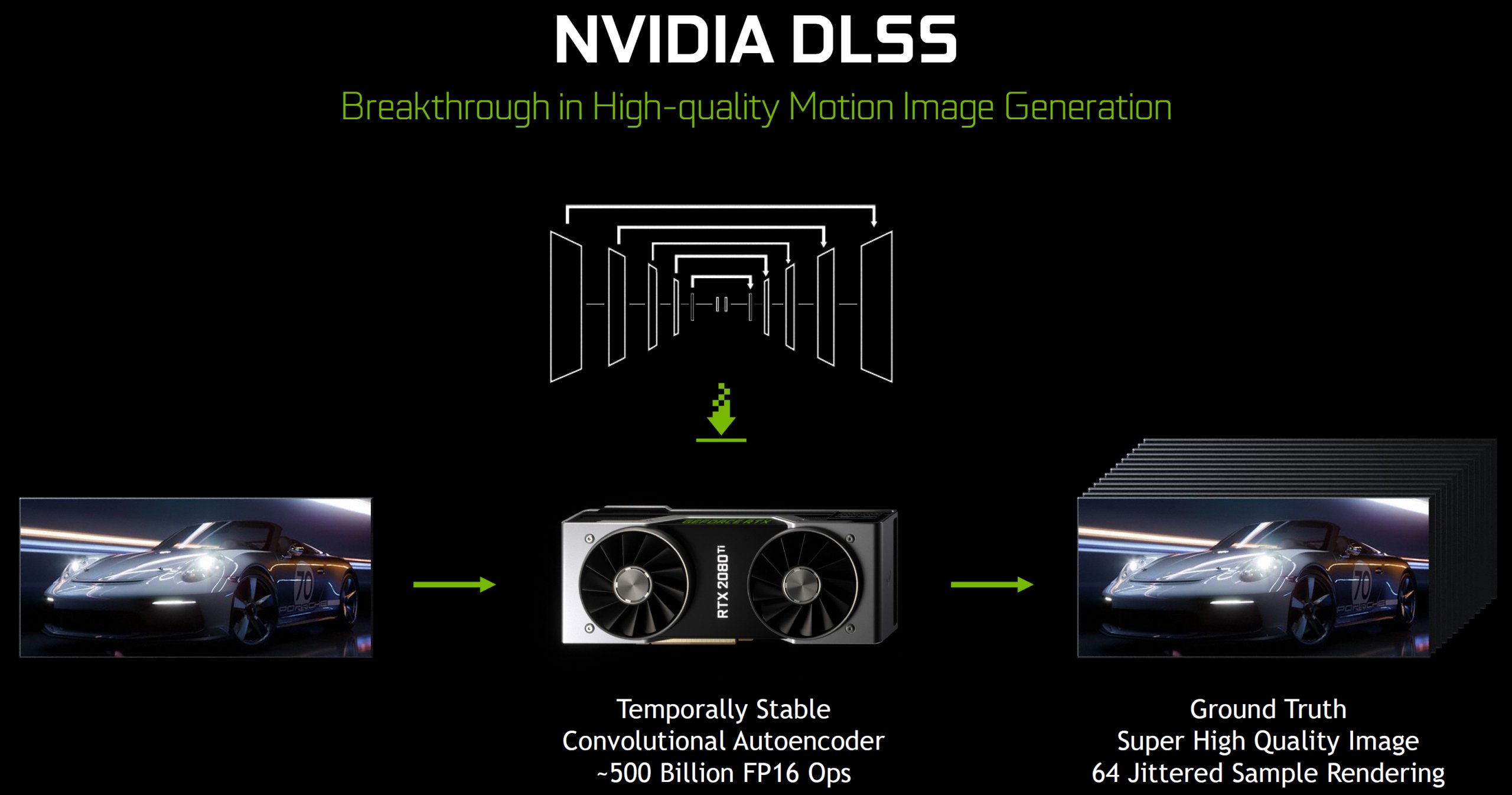

Presumably this is due to the fact that the most elaborate thing about this technique is realized by Nvidia itself or is carried out. The company offers the generation of Ground Truth Images – the highest possible display quality achieved by extremely high resolution, many samples per frame or many averaged frames. Then you'll be able to train an AI model with the 660-node DGX-1-based SaturnV server to bring low-quality images as close as possible to the original content.

These models are downloaded from the Nvidia driver and accessed via the Tensor cores on each GeForce RTX graphics card. Nvidia thinks that each AI model will be only a few megabytes in size, making it relatively easy to reload such content (once) when needed. We just hope that DLSS will not be explicitly bound and GeForce Experience, creating a registration and installation constraint.

But is the immense effort for DLSS really worth it? At this early stage, this is difficult to assess. We saw an example of DLSS from Epic's infiltrator demo and it looked great. But it's unclear whether Nvidia can achieve the same ideal image of results in every game, regardless of genre, tempo, environment details, and so on. What we do know is that DLSS is a real-time convolutional auto encoder trained on 64 times sampled images.

It receives a frame with normal resolution via the NGX API and returns a higher-quality version of that frame. Set to 2x DLSS, Nvidia said, it can reach the equivalent of 64x super-sampling, while completely bystinting the transparency artifacts and blurs that are sometimes generated by temporal anti-aliasing.

Shortly after the announcement at Gamescom, Nvidia began comparing the performance data of the GeForce RTX 2080 with DLSS compared to the GeForce GTX 1080. These results made it look as if DLSS would give you higher FPS. In reality, we believe that the company is trying to suggest that a GeForce RTX 2080 with DLSS and without anti-aliasing enabled achieves the same image quality as the previous generation with anti-aliasing enabled and without DLSS, and it is only fair to do so. to compare it in this way. Well, yes.

Nvidia has announced that 15 games are already in the pipeline with DLSS support, including existing titles such as Ark: Survival Evolved, Final Fantasy XV and PlayerUnknown's Battlegrounds, as well as some others that have not yet been released.

- 1 - Einführung und Vorstellung

- 2 - TU102 + GeForce RTX 2080 Ti

- 3 - TU104 + GeForce RTX 2080

- 4 - TU106 + GeForce RTX 2070

- 5 - Performance-Anstieg für bestehende Anwendungen

- 6 - Tensor-Kerne und DLSS

- 7 - Ray Tracing in Echtzeit

- 8 - NVLink: als Brücke wohin?

- 9 - RTX-OPs: wir rechnen nach

- 10 - Shading-Verbesserungen

- 11 - Anschlüsse und Video

- 12 - 1-Klick-Übertaktung

- 13 - Tschüss, Gebläselüfter!

- 14 - Zusammenfassung und Fazit

Kommentieren